Sunday, December 23, 2007

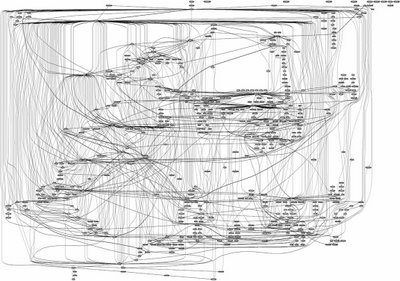

Arno's Software Development Bookshelf

Time for a picture of my software development bookshelf. It's basically divided into three sections: (A) Software development technology (by far the largest part), (B) Software development project management and (C) The history of the software industry. Please click on the image to zoom in.

Thursday, November 08, 2007

Switching Source Control Providers Under Visual Studio

Visual Studio 2005 let's you switch between different source control providers (e.g. Team Foundation Server and another SCC provider for Subversion or CVS) during runtime under Tools / Options / Source Control / Plug-In Selection. But if you still have to maintain some old .NET 1.1 code in Visual Studio 2003, you may be out of luck.

E.g. I recently had to access an old Sourcesafe repository, but since I had installed Team Foundation Server, Visual Studio 2003 would try to connect to the TFS server using a Sourcesafe URI. Not a good idea. And there is not way to change the provider back to VSS temporarily from within Visual Studio 2003.

Luckily I found this nice little tool SCCSwitch which did the job.

E.g. I recently had to access an old Sourcesafe repository, but since I had installed Team Foundation Server, Visual Studio 2003 would try to connect to the TFS server using a Sourcesafe URI. Not a good idea. And there is not way to change the provider back to VSS temporarily from within Visual Studio 2003.

Luckily I found this nice little tool SCCSwitch which did the job.

Sunday, November 04, 2007

Poker Calculator

My friend and colleague Josef developed a pokerhand odds calculator in Java, and I asked him to throw it into an applet so I could host it on this blog as well. Here we go:

Great stuff, Josef! Handling the input is very easy, just click the cards of choice for the input field having the focus (yellow background), and press "Calculate" to compute the odds, resp. to finish dealing out. You can also undo/redo any action.

The odds calculator uses a brute force approach. It looks quite fast, considering the fact it needs to process millions of combinations before the flop, and finishes in about a second on my PC. You can take a closer look at the code as well - it's open source.

My blog stylesheet won't resize the content area's width, that's why the applet is cut off on the right side, otherwise my navigation panel would have disappeared (I'll probably ask Josef to let his LayoutManager do some resizing instead, so the applet will fit within smaller screen estate as well). You can see it here in full size.

The applet requires Java 5, which - if missing - should be installed automatically on MSIE, I hope also on Firefox (for Mozilla browsers I had to replace the <embed> tag by an <applet> tag in order for it to run at all inside a blogspot.com page).

And by the way Josef, here are the odds for our game last week, when you went all in. Sorry to mention that 84% sometimes still is not enough. ;-)

Update: Some proxies seem to filter away <object> tags altogether, so I replaced it with an <applet> tag for all browsers.

Great stuff, Josef! Handling the input is very easy, just click the cards of choice for the input field having the focus (yellow background), and press "Calculate" to compute the odds, resp. to finish dealing out. You can also undo/redo any action.

The odds calculator uses a brute force approach. It looks quite fast, considering the fact it needs to process millions of combinations before the flop, and finishes in about a second on my PC. You can take a closer look at the code as well - it's open source.

My blog stylesheet won't resize the content area's width, that's why the applet is cut off on the right side, otherwise my navigation panel would have disappeared (I'll probably ask Josef to let his LayoutManager do some resizing instead, so the applet will fit within smaller screen estate as well). You can see it here in full size.

The applet requires Java 5, which - if missing - should be installed automatically on MSIE, I hope also on Firefox (for Mozilla browsers I had to replace the <embed> tag by an <applet> tag in order for it to run at all inside a blogspot.com page).

And by the way Josef, here are the odds for our game last week, when you went all in. Sorry to mention that 84% sometimes still is not enough. ;-)

Update: Some proxies seem to filter away <object> tags altogether, so I replaced it with an <applet> tag for all browsers.

Wednesday, October 31, 2007

How To Trim A .NET Application's Memory Workingset

.NET developers who have monitored their application's memory consumption in Windows Taskmanager, or slightly more sophisticated performance monitors like PerfMon, might have noticed the effect when memory usage slowly rises, and hardly ever drops. Once the whole operating systems starts to run low on memory, the CLR finally seems to give back memory as well. And as some people have noted, memory usage also goes down once an application's main window is minimized.

First of all it's important to note that by default the Windows Taskmanager only shows the amount of physical memory acquired. There is another column for displaying virtual memory usage, but it's not visible originally. So when physical memory usage drops, it's not always necessarily the CLR returning memory, but probably physical memory being swapped out to disk.

So memory consumption drops at some point in time - just probably too late. Those symptoms give us a first clue that we are not dealing with memory leaks here (of course memory leaks are more unlikely to happen in managed environments than in unmanaged ones, still it's possible - e.g. static variables holding whole trees of objects that could otherwise be reclaimed, or that EventListener that should have been unregistered but wasn't). Also, whatever amount of native heap the CLR has allocated, the size of the managed heap within that native heap is a whole different story. The CLR might just have decided to keep native memory allocated even if it could be free'd after a garbage collection pass.

And this does not look like a big deal at first glance - so what if the CLR keeps some more memory than necessary, as long as it's being returned once in a while? But the thing is, the CLR's decisions on when the right moment for freeing memory has arrived (or for that matter, the OS swapping unused memory pages to disk) might not always coincide with the users' expectations. And I have also seen Citrix installations with plenty of .NET Winforms applications running in parallel, soaking up a lot more resources than necessary, hence restraining the whole system.

Some customers tend to get nervous when they watch a simple client process holding 500MB or more of memory. "Your application is leaking memory" is the first thing they will scream. And nervous programmers will profile their code, unable to find a leak, and then start invoking GC.Collect() manually - which not only doesn't help, but is a bad idea generally speaking.

Under Java there is a way to limit the default maximum heap size (the default value depends on the Java VM), which can be overridden by passing the "-Xmx" commandline parameter to the runtime. Once the limit is reached, the garbage collector will be forced to run once more, and if that doesn't help any more either, an OutOfMemoryError is thrown. This might be bad news for the Java application, but at least it will not bring down the whole system.

I don't know of a counterpart to "-Xmx" in the .NET world. Process.MaxWorkingSet property allows for limiting the physical memory a process may occupy. I have read several postings recommending this approach to keep the whole .NET memory footprint low, but I am not so sure, plus setting Process.MaxWorkingSet requires admin privileges - something that application users will not (and should not) have.

A better choice is the Win32 API function SetProcessWorkingSetSize() with two special paramater values: -1.

From MSDN:

BOOL WINAPI SetProcessWorkingSetSize(

__in HANDLE hProcess,

__in SIZE_T dwMinimumWorkingSetSize,

__in SIZE_T dwMaximumWorkingSetSize

);

If both dwMinimumWorkingSetSize and dwMaximumWorkingSetSize have the value (SIZE_T)-1, the function temporarily trims the working set of the specified process to zero. This essentially swaps the process out of physical RAM memory.

What SetProcessWorkingSetSize() does is to invalidate the process's memory pages. what we have achieved at this point, is that our application's physical memory usage is limited to the bare minimum. And all that unused memory - as long as it is not being accessed, it will not be reloaded into physical memory. The same is true for .NET Assemblies which have been loaded, but are not currently used.

And the good news: this does not require the user to have admin rights. By the way, SetProcessWorkingSetSize is what's being invoked when an application window is minimized, which explains the effect described above.

I should not that there might be a performance penalty associated with that approach, as it might lead to a higher number of page faults following following the invocation, in case other processes regain physical memory in the meantime.

Obviously Windows' virtual memory implementation can not always swap out unused memory as aggressively. And it's my guess that what might hinder it furthermore is the constant relocation of objects within the native heap caused by garbage collection (which means a lot of different memory pages are being accessed over time, hence hardly ever paged to disk).

A Timer can be applied for repeated invocations of SetProcessWorkingSetSize(), with a reasonable interval between two calls of maybe 15 or 30 minutes (this depends heavily on the kind of application and its workload). Another possibility is to check from time to time on the physical memory being used, and once a certain amount has been reached the call to SetProcessWorkingSetSize() will occurr. A word of warning though - I do not advocate to invoke it too often either. Also, don't set the minimum and maximum working sizes (let the CLR take care of that), just use the -1 parameter values in order to swap out memory, after all that's what we are trying to achieve.

The complete code:

Anyway, our Citrix customers are happy again, and no one has ever screamed "Memory leak!" since we implemented that workaround.

First of all it's important to note that by default the Windows Taskmanager only shows the amount of physical memory acquired. There is another column for displaying virtual memory usage, but it's not visible originally. So when physical memory usage drops, it's not always necessarily the CLR returning memory, but probably physical memory being swapped out to disk.

So memory consumption drops at some point in time - just probably too late. Those symptoms give us a first clue that we are not dealing with memory leaks here (of course memory leaks are more unlikely to happen in managed environments than in unmanaged ones, still it's possible - e.g. static variables holding whole trees of objects that could otherwise be reclaimed, or that EventListener that should have been unregistered but wasn't). Also, whatever amount of native heap the CLR has allocated, the size of the managed heap within that native heap is a whole different story. The CLR might just have decided to keep native memory allocated even if it could be free'd after a garbage collection pass.

And this does not look like a big deal at first glance - so what if the CLR keeps some more memory than necessary, as long as it's being returned once in a while? But the thing is, the CLR's decisions on when the right moment for freeing memory has arrived (or for that matter, the OS swapping unused memory pages to disk) might not always coincide with the users' expectations. And I have also seen Citrix installations with plenty of .NET Winforms applications running in parallel, soaking up a lot more resources than necessary, hence restraining the whole system.

Some customers tend to get nervous when they watch a simple client process holding 500MB or more of memory. "Your application is leaking memory" is the first thing they will scream. And nervous programmers will profile their code, unable to find a leak, and then start invoking GC.Collect() manually - which not only doesn't help, but is a bad idea generally speaking.

Under Java there is a way to limit the default maximum heap size (the default value depends on the Java VM), which can be overridden by passing the "-Xmx" commandline parameter to the runtime. Once the limit is reached, the garbage collector will be forced to run once more, and if that doesn't help any more either, an OutOfMemoryError is thrown. This might be bad news for the Java application, but at least it will not bring down the whole system.

I don't know of a counterpart to "-Xmx" in the .NET world. Process.MaxWorkingSet property allows for limiting the physical memory a process may occupy. I have read several postings recommending this approach to keep the whole .NET memory footprint low, but I am not so sure, plus setting Process.MaxWorkingSet requires admin privileges - something that application users will not (and should not) have.

A better choice is the Win32 API function SetProcessWorkingSetSize() with two special paramater values: -1.

From MSDN:

BOOL WINAPI SetProcessWorkingSetSize(

__in HANDLE hProcess,

__in SIZE_T dwMinimumWorkingSetSize,

__in SIZE_T dwMaximumWorkingSetSize

);

If both dwMinimumWorkingSetSize and dwMaximumWorkingSetSize have the value (SIZE_T)-1, the function temporarily trims the working set of the specified process to zero. This essentially swaps the process out of physical RAM memory.

What SetProcessWorkingSetSize() does is to invalidate the process's memory pages. what we have achieved at this point, is that our application's physical memory usage is limited to the bare minimum. And all that unused memory - as long as it is not being accessed, it will not be reloaded into physical memory. The same is true for .NET Assemblies which have been loaded, but are not currently used.

And the good news: this does not require the user to have admin rights. By the way, SetProcessWorkingSetSize is what's being invoked when an application window is minimized, which explains the effect described above.

I should not that there might be a performance penalty associated with that approach, as it might lead to a higher number of page faults following following the invocation, in case other processes regain physical memory in the meantime.

Obviously Windows' virtual memory implementation can not always swap out unused memory as aggressively. And it's my guess that what might hinder it furthermore is the constant relocation of objects within the native heap caused by garbage collection (which means a lot of different memory pages are being accessed over time, hence hardly ever paged to disk).

A Timer can be applied for repeated invocations of SetProcessWorkingSetSize(), with a reasonable interval between two calls of maybe 15 or 30 minutes (this depends heavily on the kind of application and its workload). Another possibility is to check from time to time on the physical memory being used, and once a certain amount has been reached the call to SetProcessWorkingSetSize() will occurr. A word of warning though - I do not advocate to invoke it too often either. Also, don't set the minimum and maximum working sizes (let the CLR take care of that), just use the -1 parameter values in order to swap out memory, after all that's what we are trying to achieve.

The complete code:

[DllImport("kernel32")]

static extern bool SetProcessWorkingSetSize(IntPtr handle, int minSize, int maxSize);

SetProcessWorkingSetSize(Process.GetCurrentProcess().Handle, -1, -1);

Anyway, our Citrix customers are happy again, and no one has ever screamed "Memory leak!" since we implemented that workaround.

Thursday, October 25, 2007

Five Easy Ways To Fail

Joel Spolsky describes the most common reasons for software projects to go awry in his latest articel "How Hard Could It Be? Five Easy Ways to Fail".

As kind of expected, a "mediocre team of developers" comes up as number one, and as usual Joel Spolsky describes it much more eloquently than I ever could:

I tend to question the four other reasons he mentions though (mainly estimating and scheduling issues). Don't get me wrong, he surely got his points, but I would rank other problem fields higher than that, lack of management support, amateurish requirements analysis or suffering from the NIH-syndrome among them.

As kind of expected, a "mediocre team of developers" comes up as number one, and as usual Joel Spolsky describes it much more eloquently than I ever could:

#1: Start with a mediocre team of developers

Designing software is hard, and unfortunately, a lot of the people who call themselves programmers can't really do it. But even though a bad team of developers tends to be the No. 1 cause of software project failures, you'd never know it from reading official postmortems.

In all fields, from software to logistics to customer service, people are too nice to talk about their co-workers' lack of competence. You'll never hear anyone say "the team was just not smart enough or talented enough to pull this off." Why hurt their feelings?

The simple fact is that if the people on a given project team aren't very good at what they do, they're going to come into work every day and yet--behold!--the software won't get created. And don't worry too much about HR standing in your way of hiring a bunch of duds. In most cases, I assure you they will do nothing to prevent you from hiring untalented people.

I tend to question the four other reasons he mentions though (mainly estimating and scheduling issues). Don't get me wrong, he surely got his points, but I would rank other problem fields higher than that, lack of management support, amateurish requirements analysis or suffering from the NIH-syndrome among them.

Monday, October 15, 2007

Hints And Pitfalls In Database Development (Part 5): The Importance Of Choosing The Right Clustered Index

In database design, a clustered index defines the physical order in which data rows are stored on disk (Note: the most common data structure for storing rows both in memory and on disk are B-trees, so the term "page" can also be interpretated as "B-tree leaf node" in the following text, although it's not necessarily a 1:1 match - but you get the point). In most cases the default clustered index is the primary key. The trouble starts when people don't spend any further thought and stick with that setting no matter whether the primary key is a good choice for physical ordering or not...

File I/O happens at a page level, so reading a row implies that all other rows stored within the same physical disk page are read as well. Wouldn't it make sense to align those rows together which are most likely to be fetched en bloc too? This limits the number of page reads, and avoids having to switch disk tracks (which would be a costly operation).

So the secret is to choose an attribute for clustering which causes the least overhead for I/O. Those rows that are most likely going to be accessed together should reside within the same page, or at least in pages next to each other.

Usually an auto-increment primary key is a good choice for a clustered index. Rows that have been created consecutively will then be stored consecutively, which fits in case they are likely to be accessed along with each other as well. On the other hand if a row contains a date column, and data is mainly being selected based on these date values, this column might be the right option for clustering. And for child rows it's probably a good idea to choose the foreign key column referencing the parent row for the table's clustered index - a parent row's child rows can then be fetched in one pass.

I work on a project that applies unique identifiers for primary keys. This has several advantages, the client being able to create primary keys in advance among them. But unique identifier primary keys are a bad choice for a clustered index, as their values disperse more or less randomly, hence the physical order on disk will be just as random. We have experienced a many-fold performance speedup by choosing more suitable columns for clustered indexing.

Previous Posts:

File I/O happens at a page level, so reading a row implies that all other rows stored within the same physical disk page are read as well. Wouldn't it make sense to align those rows together which are most likely to be fetched en bloc too? This limits the number of page reads, and avoids having to switch disk tracks (which would be a costly operation).

So the secret is to choose an attribute for clustering which causes the least overhead for I/O. Those rows that are most likely going to be accessed together should reside within the same page, or at least in pages next to each other.

Usually an auto-increment primary key is a good choice for a clustered index. Rows that have been created consecutively will then be stored consecutively, which fits in case they are likely to be accessed along with each other as well. On the other hand if a row contains a date column, and data is mainly being selected based on these date values, this column might be the right option for clustering. And for child rows it's probably a good idea to choose the foreign key column referencing the parent row for the table's clustered index - a parent row's child rows can then be fetched in one pass.

I work on a project that applies unique identifiers for primary keys. This has several advantages, the client being able to create primary keys in advance among them. But unique identifier primary keys are a bad choice for a clustered index, as their values disperse more or less randomly, hence the physical order on disk will be just as random. We have experienced a many-fold performance speedup by choosing more suitable columns for clustered indexing.

Previous Posts:

Wednesday, October 10, 2007

Friday, October 05, 2007

Fun With WinDbg

I did some debugging on an old legacy reporting system this week, using WinDbg. The reporting engine terminated prematurely after something like 1000 printouts.

After attaching WinDbg and letting the reporter run for half an hour, a first chance exception breakpoint hit because of this memory access violation:

Trying to access address 0x0000004 ([EAX+4]), one of the reporting DLLs was obviously doing some pointer arithmetics on a NULL pointer. The previous command was a call to GEEI11!WEP+0xb47c, which happened to be the fixup for GlobalAlloc:

GlobalLock takes a global memory handle, locks it, and returns a pointer to the actual memory block, or NULL in case of an error. According to the Win32 API calling conventions (stdcall), EAX is used for 32bit return values.

The reporting engine code calling into GlobalLock was too optimistic and did not test for a NULL return value.

The next question was, why would GlobalLock return NULL? Most likely because of an invalid handle passed in. Where could the parameter be found? At the ESI register - it was the one pushed onto the stack before the call to GlobalAlloc, thus must be the one and only function parameter, and it is callee-saved, so GlobalAlloc had restored it in its epilog.

As expected, ESI was 0x00000000, and GetLastError confirmed this as well:

Doing some further research, I found out that the global memory handle was NULL because a prior invocation of GlobalAlloc had been unsuccessful. Again, the caller had not checked for NULL at that point. And GlobalAlloc failed because the system had run out of global memory handles, as there is an upper limit of 65535. The reporting engine leaked those handles, neglecting to call GlobalDelete() on time, and after a while (1000 reports) had run out of handles.

By the way, I could not figure out how to dump the global memory handle table in WinDbg. It seems to support all kinds of Windows handles, with the exception of global memory handles. Please drop me a line in case you know how to do that.

Now, there is no way to patch the reporting engine as it's an old third party binary, so the solution we will most likely implement is to restart the engine process after a while, so all handles are free'd by terminating the old process.

After attaching WinDbg and letting the reporter run for half an hour, a first chance exception breakpoint hit because of this memory access violation:

(aa8.a14): Access violation - code c0000005 (first chance)

First chance exceptions are reported before any exception handling.

This exception may be expected and handled.

eax=00000000 ebx=665b0006 ecx=7c80ff98 edx=00000000 esi=00000000 edi=00000000

eip=665a384f esp=0012bdc4 ebp=00000005 iopl=0 nv up ei pl zr na pe nc

cs=001b ss=0023 ds=0023 es=0023 fs=003b gs=0000 efl=00010246

*** ERROR: Symbol file could not be found. Defaulted to export symbols for GEEI11.dll -

GEEI11!FUNPower+0x15f:

665a384f 668b7804 mov di,word ptr [eax+4] ds:0023:00000004=????Trying to access address 0x0000004 ([EAX+4]), one of the reporting DLLs was obviously doing some pointer arithmetics on a NULL pointer. The previous command was a call to GEEI11!WEP+0xb47c, which happened to be the fixup for GlobalAlloc:

665a3849 ff157c445b66 call dword ptr [GEEI11!WEP+0xb47c (665b447c)]

665a384f 668b7804 mov di,word ptr [eax+4] ds:0023:00000004=????GlobalLock takes a global memory handle, locks it, and returns a pointer to the actual memory block, or NULL in case of an error. According to the Win32 API calling conventions (stdcall), EAX is used for 32bit return values.

The reporting engine code calling into GlobalLock was too optimistic and did not test for a NULL return value.

The next question was, why would GlobalLock return NULL? Most likely because of an invalid handle passed in. Where could the parameter be found? At the ESI register - it was the one pushed onto the stack before the call to GlobalAlloc, thus must be the one and only function parameter, and it is callee-saved, so GlobalAlloc had restored it in its epilog.

665a3848 56 push esi

665a3849 ff157c445b66 call dword ptr [GEEI11!WEP+0xb47c (665b447c)]

0:000> r

eax=00000000 ebx=665b0006 ecx=7c80ff98 edx=00000000 esi=00000000 edi=00000000

eip=665a384f esp=0012bdc4 ebp=00000005 iopl=0 nv up ei pl zr na pe nc

cs=001b ss=0023 ds=0023 es=0023 fs=003b gs=0000 efl=00010246As expected, ESI was 0x00000000, and GetLastError confirmed this as well:

0:000> !gle

LastErrorValue: (Win32) 0x6 (6) - Das Handle ist ungültig.

LastStatusValue: (NTSTATUS) 0xc0000034 - Der Objektname wurde nicht gefunden.Doing some further research, I found out that the global memory handle was NULL because a prior invocation of GlobalAlloc had been unsuccessful. Again, the caller had not checked for NULL at that point. And GlobalAlloc failed because the system had run out of global memory handles, as there is an upper limit of 65535. The reporting engine leaked those handles, neglecting to call GlobalDelete() on time, and after a while (1000 reports) had run out of handles.

By the way, I could not figure out how to dump the global memory handle table in WinDbg. It seems to support all kinds of Windows handles, with the exception of global memory handles. Please drop me a line in case you know how to do that.

Now, there is no way to patch the reporting engine as it's an old third party binary, so the solution we will most likely implement is to restart the engine process after a while, so all handles are free'd by terminating the old process.

Saturday, September 29, 2007

Disdain Mediocrity

Ben Rady writes:

I couldn't agree more...

I do know some traits that good software developers all seem to share. One of them is a healthy disdain for mediocrity.

Good developers cannot stand sloppiness (in software, anyway). Apathy, haste, and carelessness send shivers down their spines. They may disagree on the best way to do things, but they all agree that things should be done the best way. And they’re constantly looking and learning to find exactly what the best way is. They realize that seeking it is an ever-changing, lifelong quest.

I couldn't agree more...

Thursday, September 27, 2007

Three Years Of Blogging

Three years of blogging, 290 postings full of insight and wisdom (yeah, right), and ranked within the top 5 on hit count is the entry about the old Arcade joystick?!? I am flabbergasted... ;-)

Wednesday, September 26, 2007

The People Factor In Software Development

You may want to have a look at a presentation I assembled on "The People Factor in Software Development" (german only).

Friday, September 14, 2007

Finding Unused Classes Under .NET

While integrating some .NET libraries (which by the way came from an external development partner) in our main project, we noticed several classes that never were utilized. Getting suspicious, we decided to search for all unused classes. The question was: How to do that?

The .NET compiler is no big help on this, which is understandable - it can't emit warnings on apparently unused public classes within a class library, as they are most likely part of the library's public API, but happen not to referenced inside the library itself. The same is true for IDE-integrated refactoring tools like Resharper. Resharper points out private/internal methods never called and private/internal types never referenced, but public classes are another story.

So my next bet was on static code analysis tools. They usually let you define the system boundaries, hence it should be possible to identify classes never referenced within those boundaries.

FXCop was one of the most widely used tools in the early days of .NET, but seems a little bit abandoned now, and did not have any matching analysis rule (or at least I didn't find any).

Total .NET Analyzer on the other hand looked very promising and supposedly includes this feature. In contrast to FXCop it parses the sourcecode as well, thus has the means for a more powerful breakdown. Unfortunately it ran out of memory when scanning our Visual Studio solution on my 2GB developer workstation.

Finally I ended up applying NDepend. NDepend has extensive code analysis capabilities, including the highly-anticipated search for unused classes. It also calculates all kinds of other metrics. I have only scratched the surface so far, but what I have seen is very convincing.

The .NET compiler is no big help on this, which is understandable - it can't emit warnings on apparently unused public classes within a class library, as they are most likely part of the library's public API, but happen not to referenced inside the library itself. The same is true for IDE-integrated refactoring tools like Resharper. Resharper points out private/internal methods never called and private/internal types never referenced, but public classes are another story.

So my next bet was on static code analysis tools. They usually let you define the system boundaries, hence it should be possible to identify classes never referenced within those boundaries.

FXCop was one of the most widely used tools in the early days of .NET, but seems a little bit abandoned now, and did not have any matching analysis rule (or at least I didn't find any).

Total .NET Analyzer on the other hand looked very promising and supposedly includes this feature. In contrast to FXCop it parses the sourcecode as well, thus has the means for a more powerful breakdown. Unfortunately it ran out of memory when scanning our Visual Studio solution on my 2GB developer workstation.

Finally I ended up applying NDepend. NDepend has extensive code analysis capabilities, including the highly-anticipated search for unused classes. It also calculates all kinds of other metrics. I have only scratched the surface so far, but what I have seen is very convincing.

Sunday, September 09, 2007

Leading Software Development Teams: The Human Factor

I am currently preparing a presentation on the topic "Leading Software Development Teams: The Human Factor". Once I find time I will translate it to English and post it on this blog, for the moment here is my list of sources:

- DeMarco, Lister: "Peopleware"

- McBreen, P.: "Software craftsmanship - The new imperative"

- Rainwater, H.: "Herding cats: A primer for programmers who lead programmers"

- Spolsky, J.: "Joel on software"

- Spolsky, J.: "Smart and gets things done - Joel Spolsky's concise guide to finding the best technical talent"

- Weinberg, G.: "Becoming a technical leader - An organic problem-solving approach"

- Weinberg, G.: "The psychology of computer programming"

- Yourdon, E.: "Death March"

Thursday, September 06, 2007

Declare War On Your Enemies

While Joel Spolsky - as recently mentioned - prefers to bury bozos under a pile of bug reports, Ted Neward goes on the offensive and declares war on such and other enemies of project success:

Highly recommended reading material!

Software is endless battle and conflict, and you cannot develop effectively unless you can identify the enemies of your project. Obstacles are subtle and evasive, sometimes appearing to be strengths and not distractions. You need clarity. Learn to smoke out your obstacles, to spot them by the signs and patterns that reveal hostility and opposition to your success. Then, once you have them in your sights, have your team declare war. As the opposite poles of a magnet create motion, your enemies - your opposites - can fill you with purpose and direction. As people and problems that stand in your way, who represent what you loathe, oppositions to react against, they are a source of energy. Do not be naive: with some problems, there can be no compromise, no middle ground.

Highly recommended reading material!

Friday, August 31, 2007

Word COM Interop: Problems With Application.Selection

Most code samples out there use the Application.Selection component for creating Word documents with COM Interop. From the Word object model documentation:

Often those code samples do not even bother to set the selection beforehand (e.g. by invoking Document.Range(begin, end).Select()), as they misconceive that Application.Selection just keeps on matching while they insert content to the end of the document. Even with Range.Select() there are potantial race conditions, as we will soon see...

The problem here becomes obvious when taking a closer look at the object model: the Selection object is attached to the Application object, not to the Document object. If the application's active document changes, its selection will refer to a different document than before.

Now it still seems to be save at first sight as long as the caller operates on one document only, but not even this is the case: If the user opens a Word document from his desktop in the meantime, this is not going to fork a new Word process, but the new document will be attached to the running Word Interop process (hence the same Application). This Application has run window-less in the background so far, but now will pop up a Word window, where the user can watch in real-time how the Interop code suddenly manipulates the WRONG document, because from this moment on Application.Selection refers to the newly opened document.

How come this simple fact is not mentioned in the API documentation? I have found several newsgroup postings on that issue, people are struggling with this. So what might be a possible solution? Working with the Range API on document ranges (e.g. Document.Range(begin, end)), instead of application selections.

Previous Posts:

The Selection object represents the area that is currently selected. When you perform an operation in the Word user interface, such as bolding text, you select, or highlight, the text and then apply the formatting. The Selection object is always present in a document. If nothing is selected, then it represents the insertion point. In addition, it can also be multiple blocks of text that are not contiguous.

Often those code samples do not even bother to set the selection beforehand (e.g. by invoking Document.Range(begin, end).Select()), as they misconceive that Application.Selection just keeps on matching while they insert content to the end of the document. Even with Range.Select() there are potantial race conditions, as we will soon see...

The problem here becomes obvious when taking a closer look at the object model: the Selection object is attached to the Application object, not to the Document object. If the application's active document changes, its selection will refer to a different document than before.

Now it still seems to be save at first sight as long as the caller operates on one document only, but not even this is the case: If the user opens a Word document from his desktop in the meantime, this is not going to fork a new Word process, but the new document will be attached to the running Word Interop process (hence the same Application). This Application has run window-less in the background so far, but now will pop up a Word window, where the user can watch in real-time how the Interop code suddenly manipulates the WRONG document, because from this moment on Application.Selection refers to the newly opened document.

How come this simple fact is not mentioned in the API documentation? I have found several newsgroup postings on that issue, people are struggling with this. So what might be a possible solution? Working with the Range API on document ranges (e.g. Document.Range(begin, end)), instead of application selections.

Previous Posts:

Thursday, August 23, 2007

COM Interop Performance

COM Interop method calls are slow. When you think about it, that does not come as a surprise. Managed parameter types need to be marshalled to unmanaged parameter types and vice versa. This hurts performance especially with plenty of calls to methods which only accomplish a tiny piece of work, like setting or getting property values. 99% percent of the time might be lost on call overhead then.

There are more issues, e.g. incompatible threading models that may lead to message posting and thread context switching, memory fragmentation due to all the parameter marshalling effort, unnecessarily long-lived COM objects which are not disposed by the caller, but by the garbage collector much, much later. So when handled improperly, systems applying COM Interop not only tend to be slow, but also degrade over time regarding performance and system resources.

Most of our COM Interop code is related to MS Office integration, assemblying word documents (content and formatting) for example, this kind of stuff. We had to do quite some tuning to kind of reach a level of acceptable runtime behavior. Limiting the number of method calls and keeping references to COM objects (respectively their COM interop wrappers) for reuse was one step towards improvement, while explicitly disposing them as soon as not needed any more was another one. And never forget to invoke Application.Quit() when done, unless no one cares for zombie Word processes sucking up system resources.

Previous Posts:

There are more issues, e.g. incompatible threading models that may lead to message posting and thread context switching, memory fragmentation due to all the parameter marshalling effort, unnecessarily long-lived COM objects which are not disposed by the caller, but by the garbage collector much, much later. So when handled improperly, systems applying COM Interop not only tend to be slow, but also degrade over time regarding performance and system resources.

Most of our COM Interop code is related to MS Office integration, assemblying word documents (content and formatting) for example, this kind of stuff. We had to do quite some tuning to kind of reach a level of acceptable runtime behavior. Limiting the number of method calls and keeping references to COM objects (respectively their COM interop wrappers) for reuse was one step towards improvement, while explicitly disposing them as soon as not needed any more was another one. And never forget to invoke Application.Quit() when done, unless no one cares for zombie Word processes sucking up system resources.

Previous Posts:

- Hosting MS Office Inside .NET Windows Forms Applications

- COM Registration In .NET

- DSOFramer Now Compatible To Office 2007

Saturday, August 18, 2007

Sunday, August 12, 2007

Handling The Bozo Invasion

Invaluable advice from Joel Spolsky in "Getting Things Done When You're Only a Grunt":

I agree for the scenario described above, that is if you have to deal with one or two bozos under your coworkers. There might be other situations, though. For example bozos placed in strategic positions, so they can highly influence your work. Or company growth based on hiring legions of morons. An invasion of bozos usually is a sign that an organization is doomed anyway. The path to downfall might be long and winding, and being doomed doesn't necessarily mean going out of business - just a slow and steady decline with failed projects paving the way. This is just inevitable. It's better to leave the sinking ship earlier than later in those cases.

Strategy 4: Neutralize The Bozos

Even the best teams can have a bozo or two. The frustrating part about having bad programmers on your team is when their bad code breaks your good code, or good programmers have to spend time cleaning up after the bad programmers.

As a grunt, your goal is damage-minimization, a.k.a. containment. At some point, one of these geniuses will spend two weeks writing a bit of code that is so unbelievably bad that it can never work. You're tempted to spend the fifteen minutes that it takes to rewrite the thing correctly from scratch. Resist the temptation. You've got a perfect opportunity to neutralize this moron for several months. Just keep reporting bugs against their code. They will have no choice but to keep slogging away at it for months until you can't find any more bugs. Those are months in which they can't do any damage anywhere else.

I agree for the scenario described above, that is if you have to deal with one or two bozos under your coworkers. There might be other situations, though. For example bozos placed in strategic positions, so they can highly influence your work. Or company growth based on hiring legions of morons. An invasion of bozos usually is a sign that an organization is doomed anyway. The path to downfall might be long and winding, and being doomed doesn't necessarily mean going out of business - just a slow and steady decline with failed projects paving the way. This is just inevitable. It's better to leave the sinking ship earlier than later in those cases.

Wednesday, August 08, 2007

DSOFramer Now Compatible To Office 2007

Just a short follow-up to my posting about hosting MS Office inside .NET WinForms applications, Microsoft's DSOFramer is now available in an Office2007-compatible version.

Previous Posts:

Follow-Ups:

Previous Posts:

Follow-Ups:

Sunday, July 22, 2007

My Computer Museum (Update 2)

Main table (left to right)

(1) Sun SparcStation 5, NEC 21" VGA Monitor

(2) Commodore 128D, Commodore 1901 Color Monitor

(3) Commodore Amiga 500, Commodore 1085S Color Monitor

(4) Atari 1040ST, Atari SM124 Monochrome Monitor, Atari SC1224 Color Monitor

(5) Apple Macintosh LC, Apple Monochrome Monitor

(6) Commodore 64, Commodore 1541 Floppy Drive, Commodore 1081 Color Monitor

Back cross table (left to right)

(1) Commodore CBM8032

(2) Apple IIc, Apple II Green Composite Monitor

(3) Sinclair ZX81, B+W TV Set

Middle cross table (left to right)

(1) Atari 2600

(2) Magnavox Odyssey 2

(3) Atari PONG

(4) Magnavox Odyssey 1

Front cross table (left to right)

(1) Apple Macintosh Plus

(2) IBM PC 5150, IBM 5151 Green Composite Monitor

Wednesday, July 18, 2007

Parkinson's Law

In project management, individual tasks with end dates rarely finish early because the people doing the work expand the work to finish approximately at the end date. Coupled with deferment or avoidance of an action or task to a later time, individual tasks are nearly guaranteed to be late.

From: Parkinson's Law

Very true, especially for software projects.

Saturday, July 14, 2007

Vintage Computer / Video Console Purchases On Ebay

My latest vintage computer / video console purchases on Ebay include:

There were other auctions on rare products, e.g. an Apple Lisa (went away for USD 700) and a NeXTStation (sold for USD 200 - man, I should have bought it!).

I am kind of running out of table space in my cellar, that's why I cannot provide any actual images yet of the entire collection, so for the meantime there is this photo of a previous state.

- IBM 5150 PC

- Apple Macintosh Plus

- Magnavox Odyssey 1 (!)

- Magnavox Odyssey 2

- Atari PONG (Sears edition)

- Atari 2600

There were other auctions on rare products, e.g. an Apple Lisa (went away for USD 700) and a NeXTStation (sold for USD 200 - man, I should have bought it!).

I am kind of running out of table space in my cellar, that's why I cannot provide any actual images yet of the entire collection, so for the meantime there is this photo of a previous state.

Friday, July 13, 2007

My IBM 5150 Is Booting

The DOS 2.10 disks - which by the way I purchased in Australia - arrived today, so I tried to convince my IBM 5150 to boot. Unfortunately it did not recognize the disk at first, and continued to jump to the built-in Basic interpreter. I had no possibility to check the disk on any other system. What to do next? I mean this thing doesn't even have a BIOS, it has... DIP switches on the motherboard! I should note that my IBM did not come with the two standard 5.25" floppy drives, but one floppy drive and one 10MB hard drive, so I figured there might be some misconfiguration. Luckily I still found documentation on the 5150 DIP switch settings, and after flipping one switch I was able to boot.

Current date is Tue 1-01-1980

Enter new date:Current time is 0:00:12.89

Enter new time:The IBM Personal Computer DOS Version 2.10

(C)Copyright IBM Corp 1981, 1982, 1983

A> _

Next steps: Backup the only DOS 2.10 disk I have, and try to install DOS on the hard disk. Hard disks were supported from DOS 2.0 on - so this should work as well. Oh yeah, DOS 2.0 also introduced subdirectories...

Current date is Tue 1-01-1980

Enter new date:Current time is 0:00:12.89

Enter new time:The IBM Personal Computer DOS Version 2.10

(C)Copyright IBM Corp 1981, 1982, 1983

A> _

Next steps: Backup the only DOS 2.10 disk I have, and try to install DOS on the hard disk. Hard disks were supported from DOS 2.0 on - so this should work as well. Oh yeah, DOS 2.0 also introduced subdirectories...

Tuesday, July 10, 2007

Hosting .NET Winforms Applications In MSIE

I built a little .NET 2.0 Winforms frontend for my brute-force Sudoku Solver, and played around with hosting it inside MSIE. In case you are using MSIE and have .NET Framework 2.0 installed, you can give it a try here.

This is how it looks like:

Weird, isn't it? What is that, the Black and White Look & Feel? When running as a standalone application (download link), of course everything is just fine:

I have seen the same effect on other sites as well. Maybe that's Microsoft's way of visually marking MSIE-hosted .NET applications. Won't be too beneficial to the success of that approach I am afraid.

BTW, the Sudoku puzzle you see here is somehow famous for its problem depth. I compared the 62ms it took the algorithm on my old 2.4GHz Athlon to find the solution with other brute force Sudoku solvers, and it came off quite well in comparison.

Previos Posts:

This is how it looks like:

Weird, isn't it? What is that, the Black and White Look & Feel? When running as a standalone application (download link), of course everything is just fine:

I have seen the same effect on other sites as well. Maybe that's Microsoft's way of visually marking MSIE-hosted .NET applications. Won't be too beneficial to the success of that approach I am afraid.

BTW, the Sudoku puzzle you see here is somehow famous for its problem depth. I compared the 62ms it took the algorithm on my old 2.4GHz Athlon to find the solution with other brute force Sudoku solvers, and it came off quite well in comparison.

Previos Posts:

Friday, June 29, 2007

More Performance Tuning

I was occupied with some performance tuning this week on your .NET client/server solution, here are some of the conclusions I have drawn.

One of the bottlenecks turned out to be caused by some missing database indices. This again reminds me that I have hardly experienced a case when there were too many indices, but plenty of times when there were too few. Foreign keys AND where-criteria attributes are primary candidates for indices, and I'd rather have a real good reason when leaving out an index on those columns. I know some folks will disagree, and point out the performance penalty for maintaining indices, and that database size will grow. While I agree those implications exist, they are negligible in comparison to queries that run a hundred times faster with an index at the right place.

Also, the reasoning that relatively small tables don't need indices at all simply does not catch up with reality. While an index seek might not be much faster than a scan on a small number of rows, missing an index on a column that is being searched for also implies that there are no statistics attached to this column (unless statistics are manually created - and guess how many developers will do so), hence the query optimizer might be wrong about that data distribution which can lead to ill-performing query plans. Also, the optimizer might make different decisions because it considers the fact that there is no index on certain columns.

There are several ways to find out about missing indices: Running the Tuning Advisor, checking execution plans for anomalies, and of course some excellent tool scripts from SqlServerCentral and from Microsoft.

A query construct that turns out problematic at times under SqlServer looks something like

While these expressions help to keep SQL statements simple when applying optional parameters, they can confuse the query optimizer. Think about it - with @param holding a value, the optimizer has a restraining criteria at hand, but with @param being null it doesn't. When the optimizer re-uses an execution plan previously compiled for the same statement, trouble is around the corner. This is specifically true for stored procedures. Invoking stored procedures "with recompile" usually solves this issue. But this may happen within client-generated SQL-code as well. Options then are forcing an explicit execution plan for the query (tedious, plus if you still have to support SqlServer 2000 you are out of luck), using join hints, or finally re-phrasing the query (in one case we moved some criteria from the where- to the join-clause, which led the optimizer back to the right path again - also additional criterias might help).

By the way I can only emphasize the importance of mass data tests and of database profiling. I don't want my customers to find out about my performance problems, and I want to know what is being shoveled hence and forth my database at any time.

I was also investigating another performance problem that appeared when reloading a certain set of records with plenty of child data. Convinced of just having to profile for the slowest SQL, I was surprised that they all performed well. In reality, the time got lost within the UI, with too much refreshing going on (some over-eagerly implemented toolbar wrapping code which re-instantiated a complete Infragistics Toolbar Manager being the reason - external code, not ours).

I enjoy runtime tuning. A clear goal, followed through all obstacles, and at the end watching the same application running faster multiple times - those are the best moments for a developer.

One of the bottlenecks turned out to be caused by some missing database indices. This again reminds me that I have hardly experienced a case when there were too many indices, but plenty of times when there were too few. Foreign keys AND where-criteria attributes are primary candidates for indices, and I'd rather have a real good reason when leaving out an index on those columns. I know some folks will disagree, and point out the performance penalty for maintaining indices, and that database size will grow. While I agree those implications exist, they are negligible in comparison to queries that run a hundred times faster with an index at the right place.

Also, the reasoning that relatively small tables don't need indices at all simply does not catch up with reality. While an index seek might not be much faster than a scan on a small number of rows, missing an index on a column that is being searched for also implies that there are no statistics attached to this column (unless statistics are manually created - and guess how many developers will do so), hence the query optimizer might be wrong about that data distribution which can lead to ill-performing query plans. Also, the optimizer might make different decisions because it considers the fact that there is no index on certain columns.

There are several ways to find out about missing indices: Running the Tuning Advisor, checking execution plans for anomalies, and of course some excellent tool scripts from SqlServerCentral and from Microsoft.

A query construct that turns out problematic at times under SqlServer looks something like

where (table.attribute = @param or @param is null)While these expressions help to keep SQL statements simple when applying optional parameters, they can confuse the query optimizer. Think about it - with @param holding a value, the optimizer has a restraining criteria at hand, but with @param being null it doesn't. When the optimizer re-uses an execution plan previously compiled for the same statement, trouble is around the corner. This is specifically true for stored procedures. Invoking stored procedures "with recompile" usually solves this issue. But this may happen within client-generated SQL-code as well. Options then are forcing an explicit execution plan for the query (tedious, plus if you still have to support SqlServer 2000 you are out of luck), using join hints, or finally re-phrasing the query (in one case we moved some criteria from the where- to the join-clause, which led the optimizer back to the right path again - also additional criterias might help).

By the way I can only emphasize the importance of mass data tests and of database profiling. I don't want my customers to find out about my performance problems, and I want to know what is being shoveled hence and forth my database at any time.

I was also investigating another performance problem that appeared when reloading a certain set of records with plenty of child data. Convinced of just having to profile for the slowest SQL, I was surprised that they all performed well. In reality, the time got lost within the UI, with too much refreshing going on (some over-eagerly implemented toolbar wrapping code which re-instantiated a complete Infragistics Toolbar Manager being the reason - external code, not ours).

I enjoy runtime tuning. A clear goal, followed through all obstacles, and at the end watching the same application running faster multiple times - those are the best moments for a developer.

Saturday, June 23, 2007

Pure Ignorance Or The Not-Invented-Here Syndrome?

I just finished listening to this .NET Rocks episode with guest Jeff Atwood from Coding Horror fame. I can really identify with a lot of what he says and what he propagates on his blog. One topic was brought up by Richard Campbell I think, when he mentioned that nothing else hurts him more than watching a 60K or 80K developer working on problems for months or years (not to mention whether successfully or not), when there are existing solutions available either free in terms of open source projects, or as commerical products priced at some hundred bucks. Jeff wholeheartedly agreed.

They hit the nail on the head. I don't know whether it's pure ignorance or just the infamous Not-Invented-Here Syndrome, but this just seems to happen again and again. For my part, I blame decision makers approving budgets for such projects just as much as developers who try to solve problems that have been solved a hundred times better a hundred times before.

Here are some examples:

They hit the nail on the head. I don't know whether it's pure ignorance or just the infamous Not-Invented-Here Syndrome, but this just seems to happen again and again. For my part, I blame decision makers approving budgets for such projects just as much as developers who try to solve problems that have been solved a hundred times better a hundred times before.

Here are some examples:

- Why not implement my own Database Diff tool? See RedGate SQL Compare and SQL data Compare.

- Why not implement my own O/R mapper? See Hibernate.

- Why not implement my own XML parser? See Apache Xerces.

- Why not implement my own XML binding? See JAXB.

- Why not implement my own HTTP protocol? See package sun.net.www.http or Jakarta Commons HttpClient.

- Why not implement my own Java Enterprise Framework? See Spring Framework.

Friday, June 22, 2007

Bidding For An Atari Pong Videogame

Please don't tell anybody, but I am currently bidding for an Atari Pong videogame (the so called Sears edition) on EBay.

Thursday, June 21, 2007

COM Registration In .NET

Just a short follow-up to my posting about Hosting MS Office inside .NET WinForms applications: In case there is the need to install ActiveX controls like DSOFramer or other COM components, but no Installer that could do so (e.g. because of XCopy deployment), this sample code shows how to accomplish on-the-fly COM registration in .NET.

Previous Posts:

Follow-Ups:

Previous Posts:

Follow-Ups:

Wednesday, June 20, 2007

EBay'ed An IBM 5150

After weeks of trying I finally managed to buy an IBM 5150 on EBay (yes, that's the first IBM PC). EUR 100 plus EUR 70 for shipping - but hey, it's worth the price.

I found original IBM DOS 2.0 disks as well, which complete the whole purchase (IBM DOS 1.1 or 1.0 would have been even better, but they are also more rare).

I found original IBM DOS 2.0 disks as well, which complete the whole purchase (IBM DOS 1.1 or 1.0 would have been even better, but they are also more rare).

Friday, June 15, 2007

In Defense Of Data Transfer Objects

In the J2EE world, Data Transfer Objects have been branded as bad design or even as an anti-pattern by several Enterprise Java luminiaries. Their bad image also stems back to the time when DTOs were a must at EJB Container boundaries, as many developers ended up using Session EJBs at the middle tier which received/passed DTOs from/to the client, tediously mapping them to Entity Beans internally.

Then came Hibernate, which alongside its excellent O/R-mapping capabilities allowed for passing entities through all tiers, attached in case of a Open-Session-In-View scenario, detached in case of a loosely coupled service layer. So no need of DTOs and that cumbersome mapping approach anymore, this seems to be the widely accepted opinion.

And it's true, DTOs might be a bad idea in many, maybe even in most of the cases. E.g. it doesn't not make a lot of sense to map Hibernate POJOs to DTOs when POJO and DTO classes would just look the same.

But what if internal and external domain models would differ? One probably does not want to propagate certain internal attributes to the client, because they only matter inside the business layer. Or some attributes just must be sliced off for certain services, because they are of no interest in their context.

What if a system had been designed with a physical separation between web and middle tier (e.g. due to security and scalability reasons)? An EJB container hosting stateless session beans is still first class citizens in this case. Other services might be published as webservices. It's problematic to transfer Hibernate-specific classes over RMI/IIOP or SOAP. Even if it's possible (as it is the case under RMI/IIOP) this necessarily makes the client Hibernate-aware.

While it is true that Hibernate (and as well EJB3 resp. the Java Persistence API) are based on lightweight POJOs for entities, Hibernate has to inject it's own collection classes (PersistentSet) and CGLib-generated entity subclasses. That's logical due the nature of O/R-mapping, but having these classes being transferred over service layer boundaries is not a great thing to happen. And there are more little pitfalls, for example state management on detached entities - how can an object be marked for deletion when it is detached from it's Hibernate session?

Sorry, but I have to stand up and defend DTOs for these scenarios. Don't get me wrong, I appreciate Hibernate a lot and use it thoroughly within the middle tier, but I also don't see the problem of mapping Hibernate POJOs to DTOs on external service boundaries, especially when done in a non-invasive way. No mapping code has to pollute the business logic, no hardwiring is necessary, it can all be achieved by applying mapping frameworks like Dozer, using predefined mapping configurations. What goes over the wire at runtime is exactly the same as declared at compiletime, a clear service contract, no obsolete entity attributes, no object trees without knowing where the boundaries are, and no surprising LazyInitializationExceptions on the client.

Then came Hibernate, which alongside its excellent O/R-mapping capabilities allowed for passing entities through all tiers, attached in case of a Open-Session-In-View scenario, detached in case of a loosely coupled service layer. So no need of DTOs and that cumbersome mapping approach anymore, this seems to be the widely accepted opinion.

And it's true, DTOs might be a bad idea in many, maybe even in most of the cases. E.g. it doesn't not make a lot of sense to map Hibernate POJOs to DTOs when POJO and DTO classes would just look the same.

But what if internal and external domain models would differ? One probably does not want to propagate certain internal attributes to the client, because they only matter inside the business layer. Or some attributes just must be sliced off for certain services, because they are of no interest in their context.

What if a system had been designed with a physical separation between web and middle tier (e.g. due to security and scalability reasons)? An EJB container hosting stateless session beans is still first class citizens in this case. Other services might be published as webservices. It's problematic to transfer Hibernate-specific classes over RMI/IIOP or SOAP. Even if it's possible (as it is the case under RMI/IIOP) this necessarily makes the client Hibernate-aware.

While it is true that Hibernate (and as well EJB3 resp. the Java Persistence API) are based on lightweight POJOs for entities, Hibernate has to inject it's own collection classes (PersistentSet) and CGLib-generated entity subclasses. That's logical due the nature of O/R-mapping, but having these classes being transferred over service layer boundaries is not a great thing to happen. And there are more little pitfalls, for example state management on detached entities - how can an object be marked for deletion when it is detached from it's Hibernate session?

Sorry, but I have to stand up and defend DTOs for these scenarios. Don't get me wrong, I appreciate Hibernate a lot and use it thoroughly within the middle tier, but I also don't see the problem of mapping Hibernate POJOs to DTOs on external service boundaries, especially when done in a non-invasive way. No mapping code has to pollute the business logic, no hardwiring is necessary, it can all be achieved by applying mapping frameworks like Dozer, using predefined mapping configurations. What goes over the wire at runtime is exactly the same as declared at compiletime, a clear service contract, no obsolete entity attributes, no object trees without knowing where the boundaries are, and no surprising LazyInitializationExceptions on the client.

Monday, June 04, 2007

Learning From Experience (Introduction)

Last weekend I digged out an old university project of mine - Visual Chat, a graphical Java chat system that I built for a class assignment exactly a decade ago. I was allowed to open-source Visual Chat back then, and I still receive questions from people today who are working on extending the application. Back in 1997 I was clearly still an apprentice, as this was one of my first Java resp. OOP projects at all. When I look at the sourcecode today, I remember what I learned in the process of developing it, but I also see I was still missing a lot of experience.

Learning not only in theory but also from real projects is particularly important in software development, and this is something that - in my opinion - is done far too little during education. Designing a system at a larger scale is a completely different task than solving isolated algorithmic problems (which is what you normally get to do for homework).

Class assignments that simulate real projects are great because one has the freedom to make mistakes and learn from them, much more than in professional life. Around 500.000 people have signed up at the Visual Chat test installation site so far, so I could learn a lot from monitoring what was going on at runtime. The worst thing that might have happened was that people would not like my chat program and would switch to another one (as I am sure, many did). There was no danger of financial losses (or worse) for any user in case of an application mistake. I am glad I had this opportunity - it helped me to build better products once the chips were down at work, e.g. when developing banking or hospital information systems.

Receiving a solid training is important in our profession, but only as long as it is accompanied by applying what one has learned in classroom. Half-baked knowledge is dangerous, and it often takes two or three tries to make it right. Only a small fraction of people is able to do a perfect job from the beginning (and even a genius like Linus Torvalds openly admits that he also wrote some ugly code once upon a time).

So I decided to do two things. I am going to provide a little series of what I learned back then, and since then (looking at the code today), hoping that novices reading those articles can benefit by taking this path as a short-cut instead of walking through the same of experience on their own (which would be far more time-consuming). And I will do some refactoring on the old code, so everyone who decided to continue development work on Visual Chat will be able to take advantage of that as well.

Here is my topic list at this time of writing:

1. OOP Design - On lose coupling, the sense of interfaces and the concept of Singletons

2. Multithreading on the server - Gaining stability and performance through queuing

3. Multithreading on the client - The secrets of the AWT EventDispatch thread, EventQueues and repainting

4. How not to serialize a java.awt.Image

5. What are asynchronous Sockets (and why are they not supported in JDK1.1)?

6. Conclusions

Learning not only in theory but also from real projects is particularly important in software development, and this is something that - in my opinion - is done far too little during education. Designing a system at a larger scale is a completely different task than solving isolated algorithmic problems (which is what you normally get to do for homework).

Class assignments that simulate real projects are great because one has the freedom to make mistakes and learn from them, much more than in professional life. Around 500.000 people have signed up at the Visual Chat test installation site so far, so I could learn a lot from monitoring what was going on at runtime. The worst thing that might have happened was that people would not like my chat program and would switch to another one (as I am sure, many did). There was no danger of financial losses (or worse) for any user in case of an application mistake. I am glad I had this opportunity - it helped me to build better products once the chips were down at work, e.g. when developing banking or hospital information systems.

Receiving a solid training is important in our profession, but only as long as it is accompanied by applying what one has learned in classroom. Half-baked knowledge is dangerous, and it often takes two or three tries to make it right. Only a small fraction of people is able to do a perfect job from the beginning (and even a genius like Linus Torvalds openly admits that he also wrote some ugly code once upon a time).

So I decided to do two things. I am going to provide a little series of what I learned back then, and since then (looking at the code today), hoping that novices reading those articles can benefit by taking this path as a short-cut instead of walking through the same of experience on their own (which would be far more time-consuming). And I will do some refactoring on the old code, so everyone who decided to continue development work on Visual Chat will be able to take advantage of that as well.

Here is my topic list at this time of writing:

1. OOP Design - On lose coupling, the sense of interfaces and the concept of Singletons

2. Multithreading on the server - Gaining stability and performance through queuing

3. Multithreading on the client - The secrets of the AWT EventDispatch thread, EventQueues and repainting

4. How not to serialize a java.awt.Image

5. What are asynchronous Sockets (and why are they not supported in JDK1.1)?

6. Conclusions

Thursday, May 31, 2007

Bill Gates And Steve Jobs Interview

This recent interview is really a must see - I think they did not have that much fun since the Macintosh Dating Game in 1984 and the Macworld Expo Keynote in 1997.

Not to forget these previously unknown scenes.

BTW, I highly recommend the movie "Pirates Of Silicon Valley", which - as far as I can tell - provides a quite accurate picture of the PC revolution, plus it's very entertaining. Years ago I bought both the english and the german movie version, still back on VHS. I must have watched it a dozen times since then.

Not to forget these previously unknown scenes.

BTW, I highly recommend the movie "Pirates Of Silicon Valley", which - as far as I can tell - provides a quite accurate picture of the PC revolution, plus it's very entertaining. Years ago I bought both the english and the german movie version, still back on VHS. I must have watched it a dozen times since then.

Tuesday, May 15, 2007

Hints And Pitfalls In Database Development (Part 4): Do Not String-Concatenate SQL Parameter Values

Once again, this should be obvious. Unfortunately there are still plenty of articles and books out there with code examples that look something like this:

I don't know why this is the case. Most authors are well aware of the problems that might arise, and actually comment that this should not be done in real-life projects, but guess what - it will end up in real-life projects if examples like that are floating around.

So it's not really surprising people keep on writing this kind of statements. There are many reasons why this is just plain wrong.

Instead of concatenating parameter values, it's much better to use parameter placeholders, e.g.:

The placeholder syntax might be varying and depends on whether we are talking about the JDBC or ODBC / OLEDB / ADO.NET world (under OLEDB the syntax is defined by the underlying database specific driver).

The database API then provides some functionality to pass the actual parameter values , something like:

This has several advantages:

Previous Posts:

Follow-Ups:

string sql = "select * from orders where orderdate = '" + myDate.ToShortDateString() + "'";I don't know why this is the case. Most authors are well aware of the problems that might arise, and actually comment that this should not be done in real-life projects, but guess what - it will end up in real-life projects if examples like that are floating around.

So it's not really surprising people keep on writing this kind of statements. There are many reasons why this is just plain wrong.

Instead of concatenating parameter values, it's much better to use parameter placeholders, e.g.:

string sql = "select * from orders where orderdate = @myDate";The placeholder syntax might be varying and depends on whether we are talking about the JDBC or ODBC / OLEDB / ADO.NET world (under OLEDB the syntax is defined by the underlying database specific driver).

The database API then provides some functionality to pass the actual parameter values , something like:

SqlCommand cmd = new SqlCommand();

cmd.CommandText = sql;

cmd.Parameters.Add("@myDate", myDate);This has several advantages:

- Avoiding SQL injection: No need to worry that any user will enter something like "'; drop database;" into an input field. The database vendor's driver is going to take care of that.

- Taking advantage of prepared statements: As the SQL code always stays the same, and only parameter values change on consecutive invocations, the statement can be precompiled, which guarantees better performance.

- Independence from database language settings: Expressions like myDate.ToShortDateString() produce language-specific formats. If your customer's database was set up with another language setting, or this code is being executed from a client with different language settings, you are out of luck.

- Improved readability: SQL code can be defined in one piece at one place, maybe inside XML, without the need of cluttering it with of string-concatenation. Often those statements can just be copied and executed 1:1 within a database query tool for testing purposes. All that is necessary is to provide the parameters and set their values manually.

Previous Posts:

- Missing Indices

- Let The Database Enforce Data Constraints

- Database Programming Requires More Than SQL Knowledge

Follow-Ups:

Sunday, April 29, 2007

Hints And Pitfalls In Database Development (Part 3): Database Programming Requires More Than SQL Knowledge

First of all, "knowing SQL" is a rather broad term. SQL basics can be taught within a day, but gaining real in-depth SQL know-how, including all kind of database-specific extensions, might take years of practice.

Then there are always plenty of ways of how to build a solution in SQL, and only a small subset of those are really good ones. It is important to be aware of the implications of certain SQL constructs - which kind of actions the database engine has to undertake in order to fulfill a given task.

Let me provide a little checklist - those are things every database programmer should know about in my opinion:

Previous Posts:

Follow-Ups:

Then there are always plenty of ways of how to build a solution in SQL, and only a small subset of those are really good ones. It is important to be aware of the implications of certain SQL constructs - which kind of actions the database engine has to undertake in order to fulfill a given task.

Let me provide a little checklist - those are things every database programmer should know about in my opinion:

- ANSI SQL and vendor-specific additions (syntax, functions, procedural extensions, etc). What can be done in SQL, and when should it be done like that.

- Database basics (ACID, transactions, locking, indexing, stored procedures, triggers, and so on).

- Database design (normalization plus a dose of pragmatism, referential integrity, indices, table constraints, stuff like that).

- Internal functioning (for instance B-trees, transaction logs, temp databases, caching, statistics, execution plans, prepared statements, file structure and requirements for physical media, clustering, mirroring, and so on).

- How do certain tasks impact I/O, memory and CPU usage.

- Query optimizer: what it can do, and what it can't do.

- Error handling, security (for example how to avoid SQL injection, ...).

- Database tools: profiling, index tuning, maintenance plans (e.g. backup and reindexing), server monitoring.

- Interpretation of execution plans.

Previous Posts:

Follow-Ups:

Thursday, April 05, 2007

Hints And Pitfalls In Database Development (Part 2): Let The Database Enforce Data Constraints

Letting the database enforce data constraints might sound obvious, but as a matter of fact I had to listen to some people just a few months ago who advocated checking for referential integrity within business code instead of letting the database do so, because "foreign keys are slow" - I mean geez, what kind of nonsense is that?! I don't know, they probably forgot to define their primary keys as well, hence didn't have any index for fast lookup (*sarcasm*).

No other entity is better suited to safeguard data integrity than the database. It's closest to the data, it knows most about the data, and it's the single point every chunk of data has to pass through before being persisted.

And I am not only talking about primary keys and foreign key constraints. I put all kinds of checks on my databases, for example unique compound indices whenever I know that a certain combination of column values can only occur once. Or table constraints that enforce certain rules on the data which are being checked by the database on each insert and update. Triggers are another possibility, but care must be taken - they might be slower than table constraints.