This must be "The Daily WTF of the Year":

From "the global leader in providing supply chain execution and optimization solutions": One View to Rule Them All. See the underlying SQL-Code.

One of the most absurd things I have ever seen: Subselects, which contain subselects, which contain subselects, which contain aggregation functions. Also, the DISTINCT clause is certainly beneficial in regard to performance - especially on a view that might return every potential row, with close to 400 columns!

The unfortunate maintenance programmer explains:

"I was further astounded to learn that timeouts on certain critical operations were *routine*! [...] Paging through the trace, I found a stored procedure which took 505306 milliseconds - that's 8.5 minutes (!!!) - to execute, at 45% server utilisation."

Wednesday, December 29, 2004

Tuesday, December 28, 2004

Digital Fortress

I read Dan Brown's Digital Fortress during Christmas holidays. The book has an interesting plot, and the story takes some surprising twists, so it was in no sense boring. The problem is, it is just quite flawed from a technical point of view - and this really confines the reading experience for the average techie with some basic knowledge about cryptography.

I don't even want to start ranting about the unrealistic showdown, when a software worm takes down the NSA's security tiers one by one, and the agency's director decides to take the risk, instead of simply shutting down the system. Or the fact that the leading character, IQ-170 wonder-mathematician Susan Fletcher does not even grasp the most obvious coherences. Or that their massive-parallel miracle-system goes up in flames due to overheating (No heating ventilation? No emergency shutdown? Using NMOS, or what? And no backups and no redundant datacenter?). Let's just examine one of the book's main area of interest, namely cryptographic issues:

The so called Digital Fortress algorithm (which is able to resist brute force code-breaking attempts) is published on the internet, encrypted BY ITSELF (the main storyline is about the chase for the cipher key). The highest bidder will receive the key, hence will own the algorithm. Now wait a second - in order to decrypt it, he needs to know the algorithm already, right? Hmmm, makes you wonder how he should get hands on the algorithm, as it is only available in its encrypted form? Or put it the other way: let's suppose this is all possible, then the bidder HAS that algorithm already in cleartext at that point in time - before he is actually going decrypt it. So there is no reason for purchasing the key in the first place.

Another gemstone: "To TRANSLTR [the decryption machine] all codes looked identical, regardless of which algorithm wrote them". I am amazed how they decrypt something without any knowledge about the underlying algorithm. Anyway, no modern encryption standard depends on having to keep its algorithm secret. Keeping the key secret is the only thing that counts.

"Public Key Encryption" is being described as "software that scrambles personal e-mail message in such a way that they were totally unreadable. [...] The only way to unscramble the message was to enter the sender's pass-key". OK, this explanation is confusing, but the author also misses the main point here. Public/private keypairs resp. asymmetric encryption solves the problem of key distribution (e.g. for exchanging a short-lived, temporary symmetric key - symmetric encryption outperforms asymmetric encryption by far, hence is much more suitable for higher data volume and/or server applications), and enables sender authentication and message integrity. The receiver's public key is used for encryption by the sender, so that the counterpart private key (used for decryption) remains with the receiver exclusively. Accordingly the sender applies his private key for signing the message, so the receiver can verify the signature and the message's integrity using the sender's public key.

The author also mixes up key length and key space. He states: "... the computer's processors auditioned thirty million keys per second - one hundred billion per hour. If TRANSLTR was still counting [for 15 hours], that meant the key had to be enormous - over ten billion digits long." OK, 1,5 * 1012 keys in 15 hours, even if we are talking about binary digits here, 41bit keys are enough to cover that range. Not "over ten billion digits". This also contradicts the statement that TRANSLTR breaks 64bit keys in about 10 minutes.

In the book's foreword, the author says thanks to "the two faceless ex-NSA cryptographers who made invaluable contributions via anonymous remailers". Makes me wish they would have proof-read it once.

I don't even want to start ranting about the unrealistic showdown, when a software worm takes down the NSA's security tiers one by one, and the agency's director decides to take the risk, instead of simply shutting down the system. Or the fact that the leading character, IQ-170 wonder-mathematician Susan Fletcher does not even grasp the most obvious coherences. Or that their massive-parallel miracle-system goes up in flames due to overheating (No heating ventilation? No emergency shutdown? Using NMOS, or what? And no backups and no redundant datacenter?). Let's just examine one of the book's main area of interest, namely cryptographic issues:

The so called Digital Fortress algorithm (which is able to resist brute force code-breaking attempts) is published on the internet, encrypted BY ITSELF (the main storyline is about the chase for the cipher key). The highest bidder will receive the key, hence will own the algorithm. Now wait a second - in order to decrypt it, he needs to know the algorithm already, right? Hmmm, makes you wonder how he should get hands on the algorithm, as it is only available in its encrypted form? Or put it the other way: let's suppose this is all possible, then the bidder HAS that algorithm already in cleartext at that point in time - before he is actually going decrypt it. So there is no reason for purchasing the key in the first place.

Another gemstone: "To TRANSLTR [the decryption machine] all codes looked identical, regardless of which algorithm wrote them". I am amazed how they decrypt something without any knowledge about the underlying algorithm. Anyway, no modern encryption standard depends on having to keep its algorithm secret. Keeping the key secret is the only thing that counts.

"Public Key Encryption" is being described as "software that scrambles personal e-mail message in such a way that they were totally unreadable. [...] The only way to unscramble the message was to enter the sender's pass-key". OK, this explanation is confusing, but the author also misses the main point here. Public/private keypairs resp. asymmetric encryption solves the problem of key distribution (e.g. for exchanging a short-lived, temporary symmetric key - symmetric encryption outperforms asymmetric encryption by far, hence is much more suitable for higher data volume and/or server applications), and enables sender authentication and message integrity. The receiver's public key is used for encryption by the sender, so that the counterpart private key (used for decryption) remains with the receiver exclusively. Accordingly the sender applies his private key for signing the message, so the receiver can verify the signature and the message's integrity using the sender's public key.

The author also mixes up key length and key space. He states: "... the computer's processors auditioned thirty million keys per second - one hundred billion per hour. If TRANSLTR was still counting [for 15 hours], that meant the key had to be enormous - over ten billion digits long." OK, 1,5 * 1012 keys in 15 hours, even if we are talking about binary digits here, 41bit keys are enough to cover that range. Not "over ten billion digits". This also contradicts the statement that TRANSLTR breaks 64bit keys in about 10 minutes.

In the book's foreword, the author says thanks to "the two faceless ex-NSA cryptographers who made invaluable contributions via anonymous remailers". Makes me wish they would have proof-read it once.

Saturday, December 25, 2004

EPIC 2014

What will have happened to the news in the year 2014? An interesting look ahead into the future - entertaining, although not very likely if you ask me.

Friday, December 17, 2004

Microsoft

Yesterday I attended a Microsoft marketing presentation. Yes it was a cursory demo. That's obvious when you get a first glance at Whidbey, Yukon, Avalon, Indigo, Longhorn, all within one afternoon.

What impressed me most (probably because I had not seen it before) was the forthcoming Visual Studio Team System. It is scheduled about six months after Visual Studio 2005 (Whidbey), which means it will ship in about a year. I think Team System will substantially change the way we develop on the Microsoft platform. Yes, we have been working on component modeling, automated builds, static and dynamic code profiling, unit and load testing, and so on before, but this always used to require N different tools from N different vendors. But this is the first time all of this gets integrated into one big suite. Visual Studio Team System licenses are costly. But after all, it is mainly aimed for enterprise application projects.

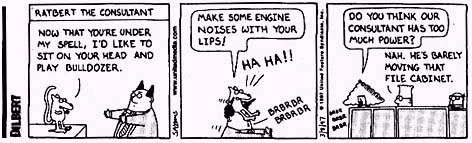

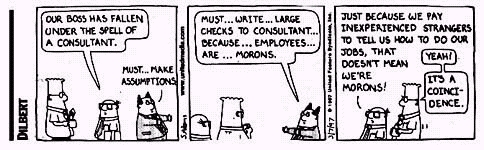

Talking about Microsoft marketing, admitted: Microsoft has a notorious history of creating "Fear, Uncertainty and Doubt". Promising impossible shipping dates for products and product features was one common strategy, next too ruthless business tactics. IBM experienced this with OS/2, as did 3COM with LAN Manager. Fifteen years have gone by since those days, and Microsoft is still aggressive, but it also grew up. They have stayed on the top of software development companies, many say thanks to the fact that their former CEO was the only real nerd, while his competitors were lead by MBAs who just didn't understand the software business.

Microsoft has also pioneered the concept of "good enough" software - which is just another term for finding the optimal economic balance between code purity and practicality (there is nothing bad about "good enough" software, actually it turned out to be the most successful approach for many shrink-wrapped products). In my experience, Microsoft has improved a lot on quality issues (see also their Trustworthy Computing Campaign). Lately I met with several Microsoft consultants, and all of them were top-notch engineers. When you think about it, this does not come as a surprise: Microsoft always hires the smartest of the smartest. Now they are in the position to do so (50.000 job applications a month - this means they can invite the TOP 5% for interviews, and employ the TOP 1%). But even in the old days, Bill Gates always engaged Triple-A developers. B-people are scared of A-people, so when put in charge, they tend to hire more B- or even C-people, dragging down your work force's qualification level.

Microsoft bashing is a common hobby among many. Some criticize their business behaviour (even the department of justice does from time to time) - one may agree or disagree to that. But I refer to unreflected criticism, the one that has the nature of religious wars (e.g. "Windows sucks, Linux rules"). It's funny to note that this often comes from people who are the least qualified to judge. Very rarely those are Triple-A people, and just don't play in the same league as those who develop the next version of Windows or the next Microsoft development platform up in Redmond (well OK, there is always an exception to the rule: programming gods like Linus Torvalds, Bill Joy or James Gosling are allowed to complain about Microsoft ;-)). Some hacked their time around at college on university systems, hardly ever worked on a major real-life software-project, but instead preferred to build up their little fiefdoms on archaic system that no one else really cared about.

Now, badmouthing Microsoft may make the averagely talented developer look cool, at least in front of those who don't know any better. Here is my advice for all unsolicited Microsoft bashers: Please, grow up! Welcome to the real world - in a professional cooperate environment no one wants to hear your religious rants. Microsoft is there, and it is going to stay, you'd better get used to it.

An interesting fact is that our Microsoft consultants knew very well about the strengths and weaknesses of their products. E.g. they never had a problem expressing their respect for cool J2EE features or the like. And they pointed out in which areas Microsoft still has to improve.

I have been working on Unix, Java and Microsoft platforms, and I appreciate all of them. I have the highest respect for their creators. But I am tired of B- and C-people who seriously think they are in any position to fire unsolicited flames on the efforts of real talented folks at world's most successful software company, just for the sake of boosting their own crippled egos.

What impressed me most (probably because I had not seen it before) was the forthcoming Visual Studio Team System. It is scheduled about six months after Visual Studio 2005 (Whidbey), which means it will ship in about a year. I think Team System will substantially change the way we develop on the Microsoft platform. Yes, we have been working on component modeling, automated builds, static and dynamic code profiling, unit and load testing, and so on before, but this always used to require N different tools from N different vendors. But this is the first time all of this gets integrated into one big suite. Visual Studio Team System licenses are costly. But after all, it is mainly aimed for enterprise application projects.

Talking about Microsoft marketing, admitted: Microsoft has a notorious history of creating "Fear, Uncertainty and Doubt". Promising impossible shipping dates for products and product features was one common strategy, next too ruthless business tactics. IBM experienced this with OS/2, as did 3COM with LAN Manager. Fifteen years have gone by since those days, and Microsoft is still aggressive, but it also grew up. They have stayed on the top of software development companies, many say thanks to the fact that their former CEO was the only real nerd, while his competitors were lead by MBAs who just didn't understand the software business.

Microsoft has also pioneered the concept of "good enough" software - which is just another term for finding the optimal economic balance between code purity and practicality (there is nothing bad about "good enough" software, actually it turned out to be the most successful approach for many shrink-wrapped products). In my experience, Microsoft has improved a lot on quality issues (see also their Trustworthy Computing Campaign). Lately I met with several Microsoft consultants, and all of them were top-notch engineers. When you think about it, this does not come as a surprise: Microsoft always hires the smartest of the smartest. Now they are in the position to do so (50.000 job applications a month - this means they can invite the TOP 5% for interviews, and employ the TOP 1%). But even in the old days, Bill Gates always engaged Triple-A developers. B-people are scared of A-people, so when put in charge, they tend to hire more B- or even C-people, dragging down your work force's qualification level.

Microsoft bashing is a common hobby among many. Some criticize their business behaviour (even the department of justice does from time to time) - one may agree or disagree to that. But I refer to unreflected criticism, the one that has the nature of religious wars (e.g. "Windows sucks, Linux rules"). It's funny to note that this often comes from people who are the least qualified to judge. Very rarely those are Triple-A people, and just don't play in the same league as those who develop the next version of Windows or the next Microsoft development platform up in Redmond (well OK, there is always an exception to the rule: programming gods like Linus Torvalds, Bill Joy or James Gosling are allowed to complain about Microsoft ;-)). Some hacked their time around at college on university systems, hardly ever worked on a major real-life software-project, but instead preferred to build up their little fiefdoms on archaic system that no one else really cared about.

Now, badmouthing Microsoft may make the averagely talented developer look cool, at least in front of those who don't know any better. Here is my advice for all unsolicited Microsoft bashers: Please, grow up! Welcome to the real world - in a professional cooperate environment no one wants to hear your religious rants. Microsoft is there, and it is going to stay, you'd better get used to it.

An interesting fact is that our Microsoft consultants knew very well about the strengths and weaknesses of their products. E.g. they never had a problem expressing their respect for cool J2EE features or the like. And they pointed out in which areas Microsoft still has to improve.

I have been working on Unix, Java and Microsoft platforms, and I appreciate all of them. I have the highest respect for their creators. But I am tired of B- and C-people who seriously think they are in any position to fire unsolicited flames on the efforts of real talented folks at world's most successful software company, just for the sake of boosting their own crippled egos.

Sunday, December 12, 2004

Plan Your Architecture Before Choosing Your Technology

It should be obvious to every software project manager, but unfortunately it doesn't always seem to be: System architecture design PRECEDES technology decision-making. Premature, ill-fated technology decisions can bring whole projects down. Or, as Hank Rainwater states in his book "Herding Cats: A Primer for Programmers Who Lead Programmers":

"The magic bullet or golden hammer (whatever you want to call it) technology doesn't solve business problems, people do. Sure, you employ technology to implement a solution, but you are wasting time if you think buying the latest addon to your development environment is going to increase productivity."

[...]

"I encourage you to determine your architectural needs and plan a system before you choose a technology of implementation. You'll just have to do it all over again if the new whiz-bang tool doesn't pan out. You've heard it said many times: If you don't have time to do the job right, when will you have time to do it over again?"

"The magic bullet or golden hammer (whatever you want to call it) technology doesn't solve business problems, people do. Sure, you employ technology to implement a solution, but you are wasting time if you think buying the latest addon to your development environment is going to increase productivity."

[...]

"I encourage you to determine your architectural needs and plan a system before you choose a technology of implementation. You'll just have to do it all over again if the new whiz-bang tool doesn't pan out. You've heard it said many times: If you don't have time to do the job right, when will you have time to do it over again?"

Wednesday, December 08, 2004

Apache AXIS, Proxies And SSL

Some time ago I ported a subsystem from a proprietary XML-over-HTTP request/response format to webservices. The webservice client was done in Java. Now, as we were applying secure sockets (including our own local keystore for holding a client certificate), there used to be an issue with the Java Secure Socket Extension's default behaviour when tunnelling HTTP over a proxy. JSSE's default SSLSocketFactory somehow expects a "HTTP/1.0 200 OK" response (this is hardwired!), but many proxies reply with "HTTP1.1/200 OK" or "HTTP1.1/200 connection established". More on this issue on JavaWorld.

Now, we simply implemented our own SSLTunnelSocketFactory which would not be as restrictive. One can either attach it globally by invoking HttpsURLConnection.setDefaultSSLSocketFactory(), or on a per-connection basis: HttpsURLConnection.setSSLSocketFactory().

This just worked fine for HttpsURLConnections. But Apache Axis (1.1) is different. It comes with its own JSSESocketFactory implementation, which of course once again won't support the proxy's HTTP1.1-response. And it ignores the fact that we already installed our own SSLTunnelSocketFactory. I was about to patch Axis and roll out our own build, when I came across a forum post, which mentioned that Axis would allow other SocketFactories once they implement a public SocketFactory(Hashtable attributes) constructor. Actually, this constructor will never be invoked. It just needs to be there. And it works like a charm now.

Do you remember the last time you saw one of those "Three Mouseclicks To Create Your Webservice Client On (VS.NET | IBM WSAD)" presentations? Real life just ain't that easy.

Now, we simply implemented our own SSLTunnelSocketFactory which would not be as restrictive. One can either attach it globally by invoking HttpsURLConnection.setDefaultSSLSocketFactory(), or on a per-connection basis: HttpsURLConnection.setSSLSocketFactory().

This just worked fine for HttpsURLConnections. But Apache Axis (1.1) is different. It comes with its own JSSESocketFactory implementation, which of course once again won't support the proxy's HTTP1.1-response. And it ignores the fact that we already installed our own SSLTunnelSocketFactory. I was about to patch Axis and roll out our own build, when I came across a forum post, which mentioned that Axis would allow other SocketFactories once they implement a public SocketFactory(Hashtable attributes) constructor. Actually, this constructor will never be invoked. It just needs to be there. And it works like a charm now.

Do you remember the last time you saw one of those "Three Mouseclicks To Create Your Webservice Client On (VS.NET | IBM WSAD)" presentations? Real life just ain't that easy.

Tuesday, December 07, 2004

On Software Development Methodologies

Tamir Nitzan on Joel on Software:

Lastly there's MSF. The author's [annotation: Joel Spolsky's] complaint about methodologies is that they essentially transform people into compliance monkeys. "our system isn't working" -- "but we signed all the phase exits!". Intuitively, there is SOME truth in that. Any methodology that aims to promote consistency essentially has to cater to a lowest common denominator. The concept of a "repeatable process" implies that while all people are not the same, they can all produce the same way, and should all be monitored similarly.

For instance, in software development, we like to have people unit-test their code. However, a good, experienced developer is about 100 times less likely to write bugs that will be uncovered during unit tests than a beginner. It is therefore practically useless for the former to write these... but most methodologies would enforce that he has to, or else you don't pass some phase. At that point, he's spending say 30% of his time on something essentially useless, which demotivates him. Since he isn't motivated to develop aggressively, he'll start giving large estimates, then not doing much, and perform his 9-5 duties to the letter. Project in crisis? Well, I did my unit tests. The rough translation of his sentence is: "methodologies encourage rock stars to become compliance monkeys, and I need everyone on my team to be a rock star".

Lastly there's MSF. The author's [annotation: Joel Spolsky's] complaint about methodologies is that they essentially transform people into compliance monkeys. "our system isn't working" -- "but we signed all the phase exits!". Intuitively, there is SOME truth in that. Any methodology that aims to promote consistency essentially has to cater to a lowest common denominator. The concept of a "repeatable process" implies that while all people are not the same, they can all produce the same way, and should all be monitored similarly.

For instance, in software development, we like to have people unit-test their code. However, a good, experienced developer is about 100 times less likely to write bugs that will be uncovered during unit tests than a beginner. It is therefore practically useless for the former to write these... but most methodologies would enforce that he has to, or else you don't pass some phase. At that point, he's spending say 30% of his time on something essentially useless, which demotivates him. Since he isn't motivated to develop aggressively, he'll start giving large estimates, then not doing much, and perform his 9-5 duties to the letter. Project in crisis? Well, I did my unit tests. The rough translation of his sentence is: "methodologies encourage rock stars to become compliance monkeys, and I need everyone on my team to be a rock star".

Sunday, December 05, 2004

The Triumph Of Belief Systems Over Engineering

From www.zeitgeist.com (and nothing has changed ever since):

| In Computer Science there's... | While in Computer Scientology it's.. | |

|---|---|---|

| 00000 | John Von Neumann | L. Ron Hubbard |

| 00001 | Communications of the ACM | InformationWeek |

| 00010 | SMTP/MIME | Notes "Mail" |

| 00011 | SNMP | "E-meters" |

| 00100 | "Two Phase Commit" | "Automatic Data Replication" |

| 00101 | TCP/IP | IPX |

| 00110 | The Internet | Compu$erve (or, AOL) |

| 00111 | Usenix/LISA Conference | Novell World |

| 01000 | SecurID/SKey/SecureNetKey | RLA/ARA |

| 01001 | Distributed Systems | Windows95 |

| 01010 | The World Wide Web | IBM/Lotus Notes |

| 01011 | Object Oriented Programming | Visual Basic |

| 01100 | Java | ActiveX |

| 01101 | Linux/NetBSD/FreeBSD | Windows/NT Server |

| 01110 | ACM TOPLAS | "Secrets of the Visual Basic Masters" |

| 01111 | GNU Public License | Patent Lawyers |

| 10000 | Lead Developers | "Empowered Managers" |

Saturday, November 27, 2004

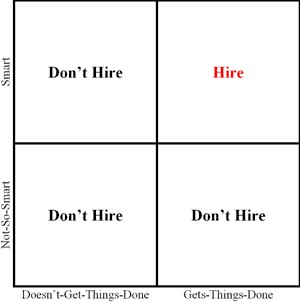

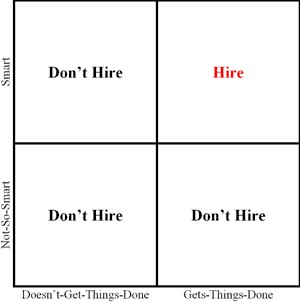

Hire The Right People

For Joel Spolsky, the #1 cardinal criteria for getting hired at Fog Creek is to be Smart and to Get Things Done.

(BTW, why does blogger.com image upload only support JPEGs? (using "Picasa Hello"))

It's hard to find smart doers, but please, keep on searching for them. If you have the slightest doubt that a potential employee does not fulfill this criteria, don't hire.

The most dangerous species are those who are smart, but don't get things done. First of all, they are harder to be identified as such during the recruitment process. Checking their project portfolio helps (still, they usually are smart enough to fake it).

As opposed to not-so-mart doers, who cause operative mistakes (bad enough), smart no-doers are in the position to make strategic mistakes (even worse). Those are the kind of people who can talk their management into following doomed endeavors which will never result in any marketable product, sometimes putting the whole company at risk. If combined with weak social skills and when put into charge, smart no-doers are best way to get rid of your last smart doers.

(BTW, why does blogger.com image upload only support JPEGs? (using "Picasa Hello"))

It's hard to find smart doers, but please, keep on searching for them. If you have the slightest doubt that a potential employee does not fulfill this criteria, don't hire.

The most dangerous species are those who are smart, but don't get things done. First of all, they are harder to be identified as such during the recruitment process. Checking their project portfolio helps (still, they usually are smart enough to fake it).

As opposed to not-so-mart doers, who cause operative mistakes (bad enough), smart no-doers are in the position to make strategic mistakes (even worse). Those are the kind of people who can talk their management into following doomed endeavors which will never result in any marketable product, sometimes putting the whole company at risk. If combined with weak social skills and when put into charge, smart no-doers are best way to get rid of your last smart doers.

About O/R Mappers

We all like object-oriented programming, right? SQL, well... it's cool to have SQL code generated. And your domain object model, too. Some Not-Invented-Here experts will even propose to build a home-brewn O/R mapper. After all, they can do a better job than those Hibernate guys, now don't they?

I know O/R mappers look tempting, and even more tempting is to build one on your own, at least to some architecture astronauts. Things might look promising unless... people actually start applying it. But at this point in time, the investment has been made. No way back. And more than once, the developers who built the O/R mapper are not the same who actually use it, and the blame game is about to begin.

I have seen applications stall, projects fail, people quit, companies go south thanks to the overly optimistic usage of home-made, unproven O/R mappers. Building an O/R mapper is a long and painful road. Avoid unnecessary database roundtrips, provide the right caching strategies, don't limit the developer ("but we were able to do subselects in SQL"), optimize SQL, support scalability, build a graphical mapping tool and so on. Some people designing SOA / message-oriented systems just DON'T WANT their database model floating around within the whole application, but that's what is most likely going to happen.

Will it support all kind of old legacy systems, including some bizarre / not-normalized database designs? And yes, sometimes your programmers don't know the consequences of a virtual proxy being expanded. Only database profiling will show what goes on behind the hood. "One Join" is certainly the preferred solution in comparison to "N Selects", but this is porbably not going to happen once you traverse over a 1:N relationship. Will your caching algorithm still work in concurrent/distributed scenarios? And what about reporting? Your report engine might require plain old resultsets, no persistence objects.

All those benefits the architects expected - they just don't turn out that way. "Too much magic", as one of our consultants expressed it. There must be a reason why accessing relations databases is done in SQL. It's just coherent. There is no OO equivalent, that fits. There is no silver bullet.

Now, there are scales of grey, just as there are application scenarios, where O/R mappers do make sense. I recommend considering an O/R mapper if

(1) You have full control over the database design (no old legacy database).

(2) Load and concurrency tests prove that the O/R mapper works in a production scenario.

(3) Your customer favors development speed over future adaptability.

(4) The O/R Mapper supports SQL execution (or a similar kind of query language).

(5) The O/R Mapper is a proven product, and not the pet project of an inhouse architect.

It is also important to distinguish standalone O/R mappers from container-controlled persistence (the later were designed for running on a middle tier). J2EE Container-Managed-Persistence Entity Beans do make sense in a couple of scenarios (and then again they don't make sense in a lot of others, and J2EE architects will rarely ever recommend a 100% CMP EJB approach). And of course, Hibernate and others do a pretty good job as well on N-tier systems.

Summing up, I strongly agree with Clemens Vasters in most of the cases. He states:

I claim that the benefits of explicit mapping exceed those of automatic O/R mapping by far. There's more to code in the beginning, that's pretty much all that speaks for O/R. I've wasted 1 1/2 years on an O/R mapping infrastructure that did everything from clever data retrieval to smartt [sic] caching and we always came back to the simple fact that "just code the damn thing" yields far superior, more manageable and maintainable results.

I know O/R mappers look tempting, and even more tempting is to build one on your own, at least to some architecture astronauts. Things might look promising unless... people actually start applying it. But at this point in time, the investment has been made. No way back. And more than once, the developers who built the O/R mapper are not the same who actually use it, and the blame game is about to begin.

I have seen applications stall, projects fail, people quit, companies go south thanks to the overly optimistic usage of home-made, unproven O/R mappers. Building an O/R mapper is a long and painful road. Avoid unnecessary database roundtrips, provide the right caching strategies, don't limit the developer ("but we were able to do subselects in SQL"), optimize SQL, support scalability, build a graphical mapping tool and so on. Some people designing SOA / message-oriented systems just DON'T WANT their database model floating around within the whole application, but that's what is most likely going to happen.

Will it support all kind of old legacy systems, including some bizarre / not-normalized database designs? And yes, sometimes your programmers don't know the consequences of a virtual proxy being expanded. Only database profiling will show what goes on behind the hood. "One Join" is certainly the preferred solution in comparison to "N Selects", but this is porbably not going to happen once you traverse over a 1:N relationship. Will your caching algorithm still work in concurrent/distributed scenarios? And what about reporting? Your report engine might require plain old resultsets, no persistence objects.

All those benefits the architects expected - they just don't turn out that way. "Too much magic", as one of our consultants expressed it. There must be a reason why accessing relations databases is done in SQL. It's just coherent. There is no OO equivalent, that fits. There is no silver bullet.

Now, there are scales of grey, just as there are application scenarios, where O/R mappers do make sense. I recommend considering an O/R mapper if

(1) You have full control over the database design (no old legacy database).

(2) Load and concurrency tests prove that the O/R mapper works in a production scenario.

(3) Your customer favors development speed over future adaptability.

(4) The O/R Mapper supports SQL execution (or a similar kind of query language).

(5) The O/R Mapper is a proven product, and not the pet project of an inhouse architect.

It is also important to distinguish standalone O/R mappers from container-controlled persistence (the later were designed for running on a middle tier). J2EE Container-Managed-Persistence Entity Beans do make sense in a couple of scenarios (and then again they don't make sense in a lot of others, and J2EE architects will rarely ever recommend a 100% CMP EJB approach). And of course, Hibernate and others do a pretty good job as well on N-tier systems.

Summing up, I strongly agree with Clemens Vasters in most of the cases. He states:

I claim that the benefits of explicit mapping exceed those of automatic O/R mapping by far. There's more to code in the beginning, that's pretty much all that speaks for O/R. I've wasted 1 1/2 years on an O/R mapping infrastructure that did everything from clever data retrieval to smartt [sic] caching and we always came back to the simple fact that "just code the damn thing" yields far superior, more manageable and maintainable results.

Sunday, November 21, 2004

Real Geeks

Real Geeks don't go to www.amihot.com or www.amihotornot.com, they vote on www.amibiosornot.com. No matter whether it actually is AMI BIOS, or not.

Tuesday, November 16, 2004

Life After Microsoft

Tomorrow Wednesday, October 17th, 7:00pm, the Upper-Austrian Workers Chamber will show "Life After Microsoft", a German TV documentation about former Microsoft employees, who suffer under serious burnout syndrome.

Subsequently there will be panel discussion. One panel member is the movie's director, Regina Schilling.

I have seen "Life After Microsoft" before. The Microsoft working ethics are quite demanding. Past achievements of long-time Microsofties don't count that much. You got to prove your commitment each day again.

On one hand, I would like to experience such working conditions. It must be very stimulating. On the other hand, it also sounds a little bit scary. I remember one of those Ex-Microsofties saying "I turned into a vegetable".

Subsequently there will be panel discussion. One panel member is the movie's director, Regina Schilling.

I have seen "Life After Microsoft" before. The Microsoft working ethics are quite demanding. Past achievements of long-time Microsofties don't count that much. You got to prove your commitment each day again.

On one hand, I would like to experience such working conditions. It must be very stimulating. On the other hand, it also sounds a little bit scary. I remember one of those Ex-Microsofties saying "I turned into a vegetable".

WSDL Binding Styles

IBM provides a good introductory document on different WSDL Binding Styles. The most common are RPC/encoded and Document/literal. WS-I Basic Profile also recommends Document/literal, which seems to become the broadly accepted standard. This encoding style should actually be sufficient in most of the cases, plus it provides the possibility of validating SOAP messages against their XSD schemas.

Well, there are always people who know better, e.g. certain government agencies that provide webservices, and somehow decided they had to use the completely uncommon RPC/literal binding style. This means that

(a) .NET 1.0 / 1.1 clients cannot access their webservice, as the .NET framework webservice implementation does not support RPC/literal. Admitted, there is a workaround, but that's definitely not something for the average programmer who drags and drops the webservice's reference into Visual Studio.

(b) SOAP messages are cluttered with unnecessary type encoding information on each request/response.

Of course those are the same folks that publish hand-coded (hence errorneous) WSDL-files.

Well, there are always people who know better, e.g. certain government agencies that provide webservices, and somehow decided they had to use the completely uncommon RPC/literal binding style. This means that

(a) .NET 1.0 / 1.1 clients cannot access their webservice, as the .NET framework webservice implementation does not support RPC/literal. Admitted, there is a workaround, but that's definitely not something for the average programmer who drags and drops the webservice's reference into Visual Studio.

(b) SOAP messages are cluttered with unnecessary type encoding information on each request/response.

Of course those are the same folks that publish hand-coded (hence errorneous) WSDL-files.

Thursday, November 11, 2004

Monday, November 08, 2004

Journey To The Past (14): Enterprise Applications (2002-today)

By the end of 2002 I returned to the multi-tier world, working on various enterprise application projects, mainly under J2EE resp .NET and .NET Enterprise Services. I share my time among consulting services, project management and programming.

Sunday, November 07, 2004

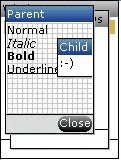

Journey To The Past (13): Wireless Systems (2001-2002)

Programming mobile phones was a real adventure. The segmented memory model of the 16-bit architecture implied just like the same 8086 / 80286 constraints from ten years before. Despite the restriction on system resources (which made me gain valuable know-how, even for my current work back in the client/server resp. multi-tier world), the development and debugging environments for embedded devices are something that takes getting used to. I was involved in several customer projects, mainly implementing man-machine-interfaces and sometimes even low-level device drivers (e.g. for the Samsung SGH-A500 and Asus J100 phones).

In the old days, common practice was to rewrite whole applications from the scratch for each new phone, depending on the underlying device drivers. We tried to improve that ponderous approach by building a C++ framework for mobile phone applications, which would encapsulate device specifics and provide a feature-rich API.

As one of the senior programmers I was in charge of laying some of the framework's groundwork (GUI, non-preemptive scheduler, API design and similar topics), and I also wrote several tools that completed our developer workbench, e.g. a phone emulation environment for Windows, graphic and font conversion programs, and a language resource editor. I was also managing a Java 2 MicroEdition port project.

In the old days, common practice was to rewrite whole applications from the scratch for each new phone, depending on the underlying device drivers. We tried to improve that ponderous approach by building a C++ framework for mobile phone applications, which would encapsulate device specifics and provide a feature-rich API.

As one of the senior programmers I was in charge of laying some of the framework's groundwork (GUI, non-preemptive scheduler, API design and similar topics), and I also wrote several tools that completed our developer workbench, e.g. a phone emulation environment for Windows, graphic and font conversion programs, and a language resource editor. I was also managing a Java 2 MicroEdition port project.

Saturday, November 06, 2004

Journey To The Past (12): Generic and Dynamic Hypertexts (2000-2001)

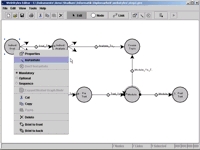

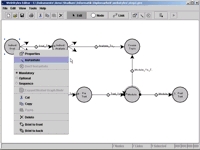

The topic of my second thesis was the implementation of a tool for generic hypertext creation in Java. The resulting system was cleanly designed from the beginning until the end, and still pleases my somewhat higher standards of today. I developed the editor (MVC-approach using observer mechanisms for loose coupling), including features like HTML content editing for graph nodes, an automation engine for building concrete hypertexts from generic templates, and a hypertext runtime implemented using Java Servlets.

Friday, November 05, 2004

Journey To The Past (11): PC Banking (1999-2001)

Our department was also developing and maintaining a PC banking system. For several years, the frontend used to be a 16bit Windows / MFC application. I was leading a developer team that ported the old client to Java, utilizing Swing, JDBC (Sybase SQLAnywhere), and an in-house application framework.

Besides the complex business logic, and the need for a RDBMS-to-OOP data mapping, realizing a powerful graphical user-interface in Java was the most difficult challenge. We had to build several more complex controls on our own, like data grids and navigation panels. The client runs under Windows as well as under Linux and MacOS.

The PC banking application is mainly aimed for business customers. It reached an installation base of about 5.000 companies at the end of 2002, while another 50.000 companies were expected to upgrade within the following 12 months.

Besides the complex business logic, and the need for a RDBMS-to-OOP data mapping, realizing a powerful graphical user-interface in Java was the most difficult challenge. We had to build several more complex controls on our own, like data grids and navigation panels. The client runs under Windows as well as under Linux and MacOS.

The PC banking application is mainly aimed for business customers. It reached an installation base of about 5.000 companies at the end of 2002, while another 50.000 companies were expected to upgrade within the following 12 months.

Journey To The Past (10): Visual Chat (1998)

My course curriculum for computer science included an assignment for a medium-scale project. I decided to build a chat program, which would let the user move around in a 3D world, and meet people at different locations. For several reasons I abandoned the idea of using standard protocols like IRC or VRML. Instead, I ramped up the complete solution (server and client) in Java, employing my own communication protocol and 3D engine. The client actually runs inside any internet browser, without the need to install any additional software. This was a very valuable experience, and included issues like threading, synchronization and networking. This was my first pure OOP project (disregarding the previous Smalltalk experience), and I remember it as a great field for experimenting, without tight schedules or sealed specifications. Of course when I look at the old code today, I can clearly notice that I was still lacking some experience back then.

Anyway, the size of Visual Chat's user community has risen steadily to more than 150.000 by the end of 2002. There are dozens of other Visual Chat Server installations on the internet today (it's freeware).

Anyway, the size of Visual Chat's user community has risen steadily to more than 150.000 by the end of 2002. There are dozens of other Visual Chat Server installations on the internet today (it's freeware).

Thursday, November 04, 2004

Journey To The Past (9): Internet Banking and Brokering (1997-2001)

Internet banking was still in its infancy at the beginning of 1997. We designed and developed the internet banking and brokering solution for one of the largest Austrian bank groups. Our system consisted of a Java applet as a frontend, the business logic was running on Sun Solaris servers, and we integrated bank legacy systems like Oracle databases on Digital VAX, or CICS transactions on IBM mainframes. Later on, I was project lead for porting our solution for several other regional banks.

Over the years we replaced the proprietary middleware by a Java 2 Enterprise Edition application server, and kept session state on the server which allowed for stateless clients. Java Server Pages would now produce HTML for client browsers resp. WML for WAP mobile phones.

At this point in time, 500.000 people have subscribed to the Internet banking and brokering service, accounting for an average of 100.000 logins per day.

Besides that, I was also responsible for the implementation of an internet ticketing service, including online payment.

Over the years we replaced the proprietary middleware by a Java 2 Enterprise Edition application server, and kept session state on the server which allowed for stateless clients. Java Server Pages would now produce HTML for client browsers resp. WML for WAP mobile phones.

At this point in time, 500.000 people have subscribed to the Internet banking and brokering service, accounting for an average of 100.000 logins per day.

Besides that, I was also responsible for the implementation of an internet ticketing service, including online payment.

Journey To The Past (8): Database Application Programming (1993-1996)

4th Generation Tools were specifically en-vogue those days, and heavily applied at university, mainly for their prototyping capabilities. I developed a 4th Dimension application on the Apple Macintosh, which helped university researchers to enter survey data about companies' information technology infrastructure, calculated statistical indices based on alterable formulas, and finally brought up some reports, with all kind of pie and bar charts. I also employed 4th Dimension for my business informatics diploma thesis, when I implemented an information system for university institutes (managing employees, students and course data, automated course enrollment).

We used SQL Windows for building a prototype for a bookstore database application under Window 3.1, which came with a nice multiple document interface. Another prototype was done for a room resource planning system at university. And I did several freelance projects, mainly on MS Access, e.g. a customer relation management and billing system for a local media company. We installed this application in a Windows for Workgroups / LAN-Manager multi-user environment. It is still in use today.

My Access knowledge would also help me later on, during military service, when I could spend two out of eight months inside a warm office (while my comrades were being drilled outside in cold Austrian winter), implementing a database solution for the Airforce Outpatient Department.

We used SQL Windows for building a prototype for a bookstore database application under Window 3.1, which came with a nice multiple document interface. Another prototype was done for a room resource planning system at university. And I did several freelance projects, mainly on MS Access, e.g. a customer relation management and billing system for a local media company. We installed this application in a Windows for Workgroups / LAN-Manager multi-user environment. It is still in use today.

My Access knowledge would also help me later on, during military service, when I could spend two out of eight months inside a warm office (while my comrades were being drilled outside in cold Austrian winter), implementing a database solution for the Airforce Outpatient Department.

Wednesday, November 03, 2004

Journey To The Past (7): Object Oriented Programming (1992)

Getting to know object oriented programming was a major turning point. The new paradigm was overwhelming, and Smalltalk really enforced pure object-orientation. I spent long hacking nights at the university lab getting to know the Visualworks Smalltalk framework on an Apple Macintosh II, and somehow managed to hand in the final project on time: a graphical calendar application.

Tuesday, November 02, 2004

Journey To The Past (6): IBM 3090 Mainframe (1991)

Mainframe programming under MVS and PL/1 was exciting at first, it felt like having stepping into the "big world" of business application development. Everyday experience turned out to be less fun at the end, when batch-jobs were slowed down by my senior university colleagues, who used to play multi-user dungeon games, and each program printout implied waiting for the next morning until I would finally receive it.

Monday, November 01, 2004

Journey To The Past (5): The Age Of Atari (1988-1992)

I really fell in love with my first Atari. It was a 520ST, equipped with 512KB RAM (upgraded to 1MB for a tremendous amount of money as soon as I received payment from a summer job). GFA Basic was a mighty language. This was my introduction to GUI programming (Digital Research's GEM, an early Macintosh look-alike). One could invoke inline assembler code, so I bought a book about 68K assembler, and finally managed to run some performance-critical stuff in native mode.

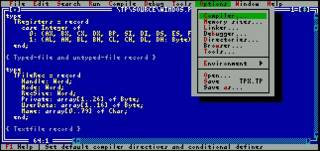

But I also felt the lack of support for modules and data encapsulation in Basic, so I decided to learn C (sounds like a contradiction today, but hey, this was 1988) using Borland Turbo C, which came along with a great graphical development environment for GEM. Phoenix on the other hand was a relational database system, that shipped with a very nice IDE. I learned about relational database modeling, and implemented some simple database applications.

Modula2 was the language of choice at my first university courses, but that wasn't too much of a change from the old Pascal days. Luckily, Modula2 compilers existed for the Atari ST as well, so I didn't have to spend my time at the always crowded university lab in front of those Apple Macs with 9-inch monitors.

In 1991 I purchased Atari's next generation workstation, the Atari TT-030. It was equipped with a Motorola 68030 processor running at 32Mhz, a 80MB HD and 8MB RAM.

But Atari did not manage to make the TT a winner, while the PC was gaining more and more market share. Notwithstanding all sentimental restraints, I finally bought a 486-DX2 in 1993.

But I also felt the lack of support for modules and data encapsulation in Basic, so I decided to learn C (sounds like a contradiction today, but hey, this was 1988) using Borland Turbo C, which came along with a great graphical development environment for GEM. Phoenix on the other hand was a relational database system, that shipped with a very nice IDE. I learned about relational database modeling, and implemented some simple database applications.

Modula2 was the language of choice at my first university courses, but that wasn't too much of a change from the old Pascal days. Luckily, Modula2 compilers existed for the Atari ST as well, so I didn't have to spend my time at the always crowded university lab in front of those Apple Macs with 9-inch monitors.

In 1991 I purchased Atari's next generation workstation, the Atari TT-030. It was equipped with a Motorola 68030 processor running at 32Mhz, a 80MB HD and 8MB RAM.

But Atari did not manage to make the TT a winner, while the PC was gaining more and more market share. Notwithstanding all sentimental restraints, I finally bought a 486-DX2 in 1993.

Friday, October 29, 2004

Journey To The Past (4): The PC (1987)

My school heavily invested into computer equipment, and bought brand new IBM-compatible PCs, that came with MS DOS 3.3. We started coding in Turbo Pascal, which was a fine environment for making my first steps in structured programming.

Thursday, October 28, 2004

Journey To The Past (3): Commodore 128 (1986-1988)

The first home computer I ever possessed was a Commodore 128D. Starting with C64 Basic, I used to write endless text-base adventure games (until I ran out of memory), which mainly consisted of a page of prints and a prompt asking the user to choose one option for his next move. Later, I discovered Simon's Basic, an enhancement of C64 basic, which allowed for graphical on-screen operations, and started to get to know C128 Basic. I also managed to get CP/M running (the first real operating system for micros) and Turbo Pascal for CP/M, so I could do computer science assignments at home.

Tuesday, October 26, 2004

Curious Perversions In Information Technology

"The Daily WTF" is a collection of really kinky code snipplets posted by unfortunate maintenance programmers. The level of these code samples ranges between "unbelievable" and "absolutely hilarious". I actually felt better after skim-reading those postings. I thought I had seen the bad and the ugly, but hey, there is even worse out there.

My personal favorites so far include:

So here is my own Top Ten List Of Programming Perversions. I have encountered them over the years. To stay fair, I am not going to post any code or other hints about their origin, so here is an anonymized version:

10. Declare it a Java List, but instantiate it as a LinkedList, then access all elements sequentially (from 0 to n - 1) by invoking List.get(int). Ehm - LinkedList, you know... a LINKED list? Here is what the doc says: Operations that index into the list will traverse the list from the begining or the end, whichever is closer to the specified index. Iterators, maybe?

9. Implement your own String.indexOf(String) in Java. Uhm, it does not work, and it does not perform. But hey, still better than Sun's library functions.

8. Draw a Windows GDI DIB (device-independent bitmap) by calculating each and every pixel's COLORREF and invoke SetPixel() on it. SetPixel also updates the screen synchronously. Yes, performance rocks - you can actually watch the painting process scanline by scanline. BitBlt(), anybody?

7. Write a RasterOp library, which - in its most-called function (SetAtom(), the function that is responsible for setting a pixel by applying a bit-mask resp. one or two bit-shifting operations) - checks for the current clipping rectangle and adjusts the blitting rectangle by creating some Rectangle objects and invoking several methods on those Rectangle objects. Ignore the fact that more or less all simple RasterOp's (like FillRect(), DrawLine() and the like) already define which region will be affected and offer a one-time possibility to adjust clipping and blitting. Instead do it repeatedly on each pixel drawn and create a performance penalty of about 5000%. Also prevent SetAtom() from being inlined by the compiler.

6. Custom ListBox control, programmed in C++. On every ListBox item's repaint event, create about ten (custom) string objects on the stack. Copy-assign senselessly to and from those strings (which all have the same character content), which leads to constant re-allocation of the strings' internal buffers. Score extra points by doing all of that on an embedded system. Really, a string's value type assignment-operator does work differently from the reference type assignment operator, which simply copies references? It might invoke all kind of other weird stuff, like malloc(), memcpy(), free() and the like? How should you have known that?

5. Use a Java obfuscator to scramble string constants, which are hardcoded and cluttered all over inner-most loop bodies (what about constants?). This implies de-obfuscation at runtime (including some fancy decryption-algorithm), each time the string is being accessed. Yes, it is used for self-implemented XML-parsing (see (2)) on megabytes of XML-streams.

4. Convert a byte-buffer to a string under C++/MFC: Instantiate one CString for each byte, write the byte to the CString's buffer, and concatenate this CString to another CString using the "+"-operator.

3. Build your own Object-Relational-Mapper, ignoring the fact that there are plenty of them freely available. Argue that this lowers the learning curve for programmers who don't know SQL (sure it make sense to employ programmers who don't know SQL in the first place). When your (actually SQL-capable) programmers complain that your ORM is missing a working query language, tell them to load a whole table's content into memory, and apply filters in-memory. Or: open a backdoor for SQL again (queries only). Let them define the mapping configuration in XML without providing any tool for automization or validation. Find out that your custom ORM just won't scale AFTER it has been installed on your customer's production environment. Finally conclude that you had preferred to shoot yourself in the foot instead of using Hibernate.

2. The self-implemented Java XML-Parser on a JDK 1.3 runtime (huh, there are dozens of JAXP-compliant, freely available XML-Parsers out there). Highly inefficient (about 100 times slower than Apache Xerces) and actually not-working (what are XML escape sequences again?).

1. Invent the One-Table-Database (yes, exactly what you are thinking of now...)

My personal favorites so far include:

- From the "It worked when I tested it" Department (Or: "Regular Expre-What?")

- Round() we go again

- If only Java supported XML... (I know a similar case - see below. Those guys implemented their own XML-Parser in Java. Maybe we are talking about the same vendor?)

- Not quite getting that Object-polymorphism thing...

- IsTrue()

- It seems my app is running a little slow...

- And I think I'll call it... "Referential Integrity"

- Can you think of a worse solution than this?

- You don't even need a stinkin' WHERE!

- Pointless Pointless Pointless Pointless Pointless Pointless Pointless

So here is my own Top Ten List Of Programming Perversions. I have encountered them over the years. To stay fair, I am not going to post any code or other hints about their origin, so here is an anonymized version:

10. Declare it a Java List, but instantiate it as a LinkedList, then access all elements sequentially (from 0 to n - 1) by invoking List.get(int). Ehm - LinkedList, you know... a LINKED list? Here is what the doc says: Operations that index into the list will traverse the list from the begining or the end, whichever is closer to the specified index. Iterators, maybe?

9. Implement your own String.indexOf(String) in Java. Uhm, it does not work, and it does not perform. But hey, still better than Sun's library functions.

8. Draw a Windows GDI DIB (device-independent bitmap) by calculating each and every pixel's COLORREF and invoke SetPixel() on it. SetPixel also updates the screen synchronously. Yes, performance rocks - you can actually watch the painting process scanline by scanline. BitBlt(), anybody?

7. Write a RasterOp library, which - in its most-called function (SetAtom(), the function that is responsible for setting a pixel by applying a bit-mask resp. one or two bit-shifting operations) - checks for the current clipping rectangle and adjusts the blitting rectangle by creating some Rectangle objects and invoking several methods on those Rectangle objects. Ignore the fact that more or less all simple RasterOp's (like FillRect(), DrawLine() and the like) already define which region will be affected and offer a one-time possibility to adjust clipping and blitting. Instead do it repeatedly on each pixel drawn and create a performance penalty of about 5000%. Also prevent SetAtom() from being inlined by the compiler.

6. Custom ListBox control, programmed in C++. On every ListBox item's repaint event, create about ten (custom) string objects on the stack. Copy-assign senselessly to and from those strings (which all have the same character content), which leads to constant re-allocation of the strings' internal buffers. Score extra points by doing all of that on an embedded system. Really, a string's value type assignment-operator does work differently from the reference type assignment operator, which simply copies references? It might invoke all kind of other weird stuff, like malloc(), memcpy(), free() and the like? How should you have known that?

5. Use a Java obfuscator to scramble string constants, which are hardcoded and cluttered all over inner-most loop bodies (what about constants?). This implies de-obfuscation at runtime (including some fancy decryption-algorithm), each time the string is being accessed. Yes, it is used for self-implemented XML-parsing (see (2)) on megabytes of XML-streams.

4. Convert a byte-buffer to a string under C++/MFC: Instantiate one CString for each byte, write the byte to the CString's buffer, and concatenate this CString to another CString using the "+"-operator.

3. Build your own Object-Relational-Mapper, ignoring the fact that there are plenty of them freely available. Argue that this lowers the learning curve for programmers who don't know SQL (sure it make sense to employ programmers who don't know SQL in the first place). When your (actually SQL-capable) programmers complain that your ORM is missing a working query language, tell them to load a whole table's content into memory, and apply filters in-memory. Or: open a backdoor for SQL again (queries only). Let them define the mapping configuration in XML without providing any tool for automization or validation. Find out that your custom ORM just won't scale AFTER it has been installed on your customer's production environment. Finally conclude that you had preferred to shoot yourself in the foot instead of using Hibernate.

2. The self-implemented Java XML-Parser on a JDK 1.3 runtime (huh, there are dozens of JAXP-compliant, freely available XML-Parsers out there). Highly inefficient (about 100 times slower than Apache Xerces) and actually not-working (what are XML escape sequences again?).

1. Invent the One-Table-Database (yes, exactly what you are thinking of now...)

Journey To The Past (2): Commodore CBM 8032 (1985-1986)

At high-school there was a broad variety of microcomputers, namely an Apple II for the chemistry lab (I didn't really like chemistry enough in order to sign in for advanced voluntary classes, so I never had the chance to access it), a Radio Shack TRS-80, and several Commodore CBMs. We used the CBM (an upgraded Commodore PET model) in computer science classes, where we learned about some more advanced topics, and solved problems like waiting queue simulations, or simple text-based, keyboard-controlled racing games.

Monday, October 25, 2004

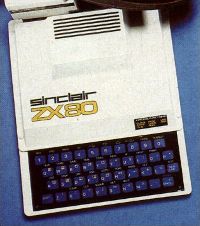

Journey To The Past (1): Sinclair ZX-80 (1984)

My first programming experiments go back to the year 1984. A schoolmate of mine owned a Sinclair ZX-80, an early 8-bit microcomputer, that came with the famous Zilog Z80, 16K RAM, a rubber-like keyboard, and could be plugged into a TV set. I somehow managed to convince my friend to lend me his ZX-80 over summer holidays, and started writing my first lines of Basic. The storage system was a tape recorder, simply connected with audio cables.

1984

It has been twenty years since my first contact with computers. Time for a journey to the past. I have spent some time digging for old pictures and screenshots, and composed a mini-series of hardware- and software-technologies that I have worked with over the years.

You see, that's why 1984 was not going to be like George Orwell's "1984". OK, someone else actually said that. For me, it all started with a Sinclar ZX-80...

You see, that's why 1984 was not going to be like George Orwell's "1984". OK, someone else actually said that. For me, it all started with a Sinclar ZX-80...

From IEEE Spectrum

- If an apparently serious problem manifests itself, no solution is acceptable unless it is involved, expensive and time-consuming.

- Completion of any task within the allocated time and budget does not bring credit upon the performing personnel - it merely proves that the task was easier than expected.

- Failure to complete any task within the allocated time and budget proves the task was more difficult than expected and requires promotion for those in charge.

- Sufficient monies to do the job correctly the first time are usually not available; however, ample funds are much more easily obtained for repeated major redesigns.

A Luminary Is On My Side

I was reading Joel Spolsky's latest book last night, where I found another real gemstone: "Back to Basics", which shows some surprisingly (or probably not so surprisingly?) close analogies to what I tried to express just a short time ago. Joel Spolsky writes about performance issues on C strings, in particular the strcat() function, and concludes:

These are all things that require you to think about bytes, and they affect the big top-level decisions we make in all kinds of architecture and strategy. This is why my view of teaching is that first year CS students need to start at the basics, using C and building their way up from the CPU. I am actually physically disgusted that so many computer science programs think that Java is a good introductory language, because it's "easy" and you don't get confused with all that boring string/malloc stuff but you can learn cool OOP stuff which will make your big programs ever so modular. This is a pedagogical disaster waiting to happen.

Here is what I had to say some weeks ago:

The course curriculum here in Austria allows you to walk through a computer science degree without writing a single line of C or C++ (not to mention assembler).

[...]

What computer science professors tend to forget is that the opposite course is likely to happen as well to those graduates once they enter working life (those who have only applied Java or C#). There is more than Java and C# out there. And this "backward" paradigm shift is a much more difficult one. Appointing inexperienced Java programmers to a C++ or C project is a severe project risk.

It's a nice comfort to know that my opinion complies with the conclusions of a real software development celebrity. I admire Joel Spolsky's knowledge of the software industry's internal mode of operation, but the same is true for his excellent writing style. His writings are perceptive and entertaining at the same time.

In another article, "The Guerrilla Guide to Interviewing", Joel states:

Many "C programmers" just don't know how to make pointer arithmetic work. Now, ordinarily, I wouldn't reject a candidate just because he lacked a particular skill. However, I've discovered that understanding pointers in C is not a skill, it's an aptitude. In Freshman year CompSci, there are always about 200 kids at the beginning of the semester, all of whom wrote complex adventure games in BASIC for their Atari 800s when they were 4 years old. They are having a good ol'; time learning Pascal in college, until one day their professor introduces pointers, and suddenly, they don't get it. They just don't understand anything any more. 90% of the class goes off and becomes PoliSci majors, then they tell their friends that there weren't enough good looking members of the appropriate sex in their CompSci classes, that's why they switched. For some reason most people seem to be born without the part of the brain that understands pointers. This is an aptitude thing, not a skill thing – it requires a complex form of doubly-indirected thinking that some people just can't do.

<humor_mode>Java is for wimps. Real developer use C.</humor_mode>

But there is something very true in that. So much harm is done by people who just don't understand the underlyings of their code.

I have been quite lucky so far. Either I could choose my project team members (admitted, from a quite limited pool of candidates), or I happened to work in an environment with sufficiently qualified people anyway. But I know of many occasions when the opposite happened. The candidates cheated on their resume. Their presentation skills were good, so they obscured their technical incompetence - even to technical-savvy interviewers. Those interviewers just didn't dig deep enough. They were too busy presenting their company, talking 80% of the time. They really should just have asked the right questions instead. And they should have spent more time on listening carefully.

Joel is actually looking for Triple-A people only. That's kind of hard when you work in a corporate environment. I do not have any influence on the recruiting process itself, so there is no "Hire" or "No Hire" flag I could wave. In order to attract excellent people, I can only try to ensure a professional working environment within the corporate boundaries. Still, this is not what potential hires get to see when they go through the recruiting process.

But I did something else. I forwarded "The Guerrilla Guide to Interviewing" to the people in charge.

These are all things that require you to think about bytes, and they affect the big top-level decisions we make in all kinds of architecture and strategy. This is why my view of teaching is that first year CS students need to start at the basics, using C and building their way up from the CPU. I am actually physically disgusted that so many computer science programs think that Java is a good introductory language, because it's "easy" and you don't get confused with all that boring string/malloc stuff but you can learn cool OOP stuff which will make your big programs ever so modular. This is a pedagogical disaster waiting to happen.

Here is what I had to say some weeks ago:

The course curriculum here in Austria allows you to walk through a computer science degree without writing a single line of C or C++ (not to mention assembler).

[...]

What computer science professors tend to forget is that the opposite course is likely to happen as well to those graduates once they enter working life (those who have only applied Java or C#). There is more than Java and C# out there. And this "backward" paradigm shift is a much more difficult one. Appointing inexperienced Java programmers to a C++ or C project is a severe project risk.

It's a nice comfort to know that my opinion complies with the conclusions of a real software development celebrity. I admire Joel Spolsky's knowledge of the software industry's internal mode of operation, but the same is true for his excellent writing style. His writings are perceptive and entertaining at the same time.

In another article, "The Guerrilla Guide to Interviewing", Joel states:

Many "C programmers" just don't know how to make pointer arithmetic work. Now, ordinarily, I wouldn't reject a candidate just because he lacked a particular skill. However, I've discovered that understanding pointers in C is not a skill, it's an aptitude. In Freshman year CompSci, there are always about 200 kids at the beginning of the semester, all of whom wrote complex adventure games in BASIC for their Atari 800s when they were 4 years old. They are having a good ol'; time learning Pascal in college, until one day their professor introduces pointers, and suddenly, they don't get it. They just don't understand anything any more. 90% of the class goes off and becomes PoliSci majors, then they tell their friends that there weren't enough good looking members of the appropriate sex in their CompSci classes, that's why they switched. For some reason most people seem to be born without the part of the brain that understands pointers. This is an aptitude thing, not a skill thing – it requires a complex form of doubly-indirected thinking that some people just can't do.

<humor_mode>Java is for wimps. Real developer use C.</humor_mode>

But there is something very true in that. So much harm is done by people who just don't understand the underlyings of their code.

I have been quite lucky so far. Either I could choose my project team members (admitted, from a quite limited pool of candidates), or I happened to work in an environment with sufficiently qualified people anyway. But I know of many occasions when the opposite happened. The candidates cheated on their resume. Their presentation skills were good, so they obscured their technical incompetence - even to technical-savvy interviewers. Those interviewers just didn't dig deep enough. They were too busy presenting their company, talking 80% of the time. They really should just have asked the right questions instead. And they should have spent more time on listening carefully.

Joel is actually looking for Triple-A people only. That's kind of hard when you work in a corporate environment. I do not have any influence on the recruiting process itself, so there is no "Hire" or "No Hire" flag I could wave. In order to attract excellent people, I can only try to ensure a professional working environment within the corporate boundaries. Still, this is not what potential hires get to see when they go through the recruiting process.

But I did something else. I forwarded "The Guerrilla Guide to Interviewing" to the people in charge.

Wednesday, October 20, 2004

The StringBuffer Myth

Charles Miller writes about his assessment of StringBuffer usage in Java:

One of my pet Java peeves is that some people religiously avoid the String concatenation operators, + and +=, because they are less efficient than the alternatives.

The theory goes like this. Strings are immutable. Thus, when you are concatenating "n" strings together, there must be "n - 1" intermediate String objects created in the process (including the final, complete String). Thus, to avoid dumping a bunch of unwanted String objects onto the garbage-collector, you should use the StringBuffer object instead.

So, by this theory,

For as long as I have been using Java, this has not been true. If you look at StringBuffer handling, you'll see the bytecodes that a Java compiler actually produces in those two cases. In most simple string-concatenation cases, the compiler will automatically convert a series of operations on Strings into a series of StringBuffer operations, and then pop the result back into a String.

The only time you need to switch to an explicit StringBuffer is in more complex cases, for example if the concatenation is occurring within a loop (see StringBuffer handling in loops).

Charles compares those to approaches:

VS.

As Charles points out correctly, the Java compiler internally replaces the string concatenation operators by a StringBuffer, which is converted back to a String at the end. This looks like the same result, as when using StringBuffer directly.

But the Java bytecode, that Charles analyzed in detail, only tells half of the story. What he did not take a closer look on was what happens inside the call to the StringBuffer constructor, which the compiler inserted. And that's where the real performance vulnerability strikes hard:

The constructor only allocates a buffer for holding the original String plus 16 characters. Not more than that. In addition, StringBuffer.append() only expands the StringBuffer's capacity to fit for the next String appended:

That means constant re-allocation on each consecutive call to StringBuffer.append().

You - the programmer - know better than that. You might know exactly how big the buffer is going to be in its final state - or if you don't know the exact number, you may at least apply a decent approximation. You can then construct your StringBuffer like this:

No constant reallocation necessary, that means better performance and less work for the garbage collector. And that's where the real benefit lies in when applying StringBuffer instead of String concatenation operators.

One of my pet Java peeves is that some people religiously avoid the String concatenation operators, + and +=, because they are less efficient than the alternatives.

The theory goes like this. Strings are immutable. Thus, when you are concatenating "n" strings together, there must be "n - 1" intermediate String objects created in the process (including the final, complete String). Thus, to avoid dumping a bunch of unwanted String objects onto the garbage-collector, you should use the StringBuffer object instead.

So, by this theory,

String a = b + c + d; is bad code, while String a = new StringBuffer(b).append(c).append(d).toString() is good code, despite the fact that the former is about a thousand times more readable than the latter.

For as long as I have been using Java, this has not been true. If you look at StringBuffer handling, you'll see the bytecodes that a Java compiler actually produces in those two cases. In most simple string-concatenation cases, the compiler will automatically convert a series of operations on Strings into a series of StringBuffer operations, and then pop the result back into a String.

The only time you need to switch to an explicit StringBuffer is in more complex cases, for example if the concatenation is occurring within a loop (see StringBuffer handling in loops).

Charles compares those to approaches:

return a + b + c;

VS.

StringBuffer s = new StringBuffer(a);

s.append(b);

s.append(c);

return s.toString();

As Charles points out correctly, the Java compiler internally replaces the string concatenation operators by a StringBuffer, which is converted back to a String at the end. This looks like the same result, as when using StringBuffer directly.

But the Java bytecode, that Charles analyzed in detail, only tells half of the story. What he did not take a closer look on was what happens inside the call to the StringBuffer constructor, which the compiler inserted. And that's where the real performance vulnerability strikes hard: