- Joel On Software (December 29th, 2005): The Perils Of JavaSchools

- Arno's Software Development Weblog (October 4th, 2004): Knowing Java But Not Having A Clue?

Saturday, December 31, 2005

A Luminary Is On My Side (II)

A luminary is on my side (hey, not for the first time):

Friday, December 30, 2005

As A Software Developer, You Don't Live On An Island (Part II)

Yesterday I talked about the fact that as software developers, we should be prepared for change.

But of course, there is the other extreme as well. Self-taught semi-professionals missing real project experience as well as the feedback of more adept peers. Those who jump on the bandwagon of every hype, but do so in a fundamentally flawed way.

Like the guys who took the "a real man writes his own web framework"-joke for serious, and invested several man-years in a doomed attempt to create a chimera that combined web user interface components and object-relational mapping (and by "combine" I mean tight coupling, you bet) - just because they didn't like Struts or Hibernate, and quote: "Java Server Faces are still in Beta". They also didn't like the Java Collections API, so they wrote their own (interface-compatible) implementation.

Another developer was so convinced of webservices and any other technology that he could possibly apply that he suggested to compile report layouts into .NET assemblies, store those assemblies in database BLOBS and download them to the intranet(!) client using webservices (byte-arrays serialized over XML). Need to say more?

But of course, there is the other extreme as well. Self-taught semi-professionals missing real project experience as well as the feedback of more adept peers. Those who jump on the bandwagon of every hype, but do so in a fundamentally flawed way.

Like the guys who took the "a real man writes his own web framework"-joke for serious, and invested several man-years in a doomed attempt to create a chimera that combined web user interface components and object-relational mapping (and by "combine" I mean tight coupling, you bet) - just because they didn't like Struts or Hibernate, and quote: "Java Server Faces are still in Beta". They also didn't like the Java Collections API, so they wrote their own (interface-compatible) implementation.

Another developer was so convinced of webservices and any other technology that he could possibly apply that he suggested to compile report layouts into .NET assemblies, store those assemblies in database BLOBS and download them to the intranet(!) client using webservices (byte-arrays serialized over XML). Need to say more?

Thursday, December 29, 2005

As A Software Developer, You Don't Live On An Island (Part I)

Maybe my expectations are too high, but how come that so many software developers suffer from the ostrich syndrome? They have been doing their RPG or Visual Basic or SQLWindows or <place_your_favorite_outdated_technology_here> programming for ten years or longer, but during all that time they never managed to look beyond their own nose. Now they suddenly face a migration to Java or VB.NET, or they must provide a webservice interface or the like. OOP, what's that? Unicode, what's that? XML, that looks easy, let's just do some printf()s (or whatever the RPG counterpart to printf() is ;-) ) with all these funny brackets. Oh, and when there is something new to learn, those folks of course expect their employer to send them on training for months.

I always thought this profession would mainly attract people who embrace change. Seems I was wrong.

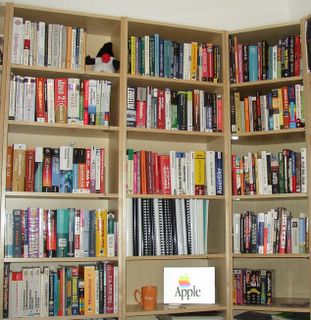

Besides everyday project business, I try to stay in touch with the latest in technology. Readings books, magazines and weblogs, listening to podcasts, talking with colleagues who are into some new stuff, and the like.

Reading one book a month about something you haven't dealt yet (may it be a C# primer or a Linux admin tutorial or whatever) - that's the minimum I recommend to every software developer with some sense of personal responsibility.

I always thought this profession would mainly attract people who embrace change. Seems I was wrong.

Besides everyday project business, I try to stay in touch with the latest in technology. Readings books, magazines and weblogs, listening to podcasts, talking with colleagues who are into some new stuff, and the like.

Reading one book a month about something you haven't dealt yet (may it be a C# primer or a Linux admin tutorial or whatever) - that's the minimum I recommend to every software developer with some sense of personal responsibility.

Wednesday, December 28, 2005

Seven Habits Of Highly Effective Programmers

"Seven Habits of Highly Effective Programmers" is one of several excellent articles on technicat.com.

Wednesday, December 21, 2005

LINQ

LINQ or "Language Integrated Query" will be part of .NET 3.0 - absolutely amazing stuff. I have to admit I am still rubbing my eyes - did Anders Hejlsberg just solve the object/relational mismatch as well as making painful XML programming models like DOM or XPath obsolete? Let's wait and see...

Now I have not really been too fond of one or two C# language constructs (don't get me wrong, the features themselves are great, it's just their syntax flavor - e.g. the way the notion of delegates and events looks like), but I have always admired Hejlsberg (of Turbo Pascal, Delphi and C# fame, for those who don't know) and his achievements for our industry. And LINQ really seems to be the icing on the cake.

Now I have not really been too fond of one or two C# language constructs (don't get me wrong, the features themselves are great, it's just their syntax flavor - e.g. the way the notion of delegates and events looks like), but I have always admired Hejlsberg (of Turbo Pascal, Delphi and C# fame, for those who don't know) and his achievements for our industry. And LINQ really seems to be the icing on the cake.

Thursday, December 15, 2005

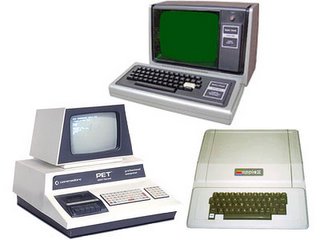

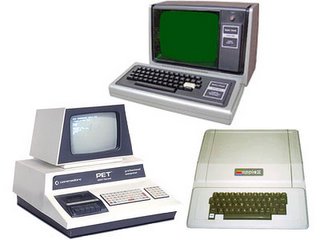

30 Years Of Personal Computer

Nice article about 30 Years Of Personal Computer, just one doubt: Did the Tandy-Radio Shack 80 really outsell the Apple II in the late 70s? I always assumed Apple was market leader until the introduction of the IBM PC.

By the way, we both had a TRS-80 and some Commodore CBMs side by side at school but we only used the Commodore, because to us it somehow seemed cooler.

By the way, we both had a TRS-80 and some Commodore CBMs side by side at school but we only used the Commodore, because to us it somehow seemed cooler.

Saturday, December 10, 2005

Object Oriented Crimes

80% of software development is maintenance work. That's reality, and I don't complain about that. I also don't complain about having to dig through other people's code that is sometimes hard to understand. It's just the same as when looking at some of my own old work - the design mistakes of the past are pretty obvious today. Maybe it was just that you had to find a pragmatic solution under project schedule pressure back then - it might not be beautiful, but it worked.

But then there are also programming perversions that are simply beyond good and evil (similar to the ones posted on The Daily WTF). Let me give you two examples - coincidentally each of those came from German vendors...

Case #1:

Java AWT UI (yes, the old days...), card layout, so there is one form visible at a time and the other forms are sent to the back. Now those UI classes need to exchange data, and this was achieved by putting all data into protected static variables in the base class, so that all derived classes could access it. Beautiful, isn't it? But that's not the whole story. Those static variables weren't business objects in the common sense - they were plain stupid string arrays. One array for each business object attribute (and of course, each datatype was represented by a string), and the actual "object" was identified by the array index. You know, something like this:

The memory alone still makes me shudder today...

Case #2:

Years later, this time we talk about a .NET WinForms application. Somehow the development lead had heard that it's a good idea to separate visualization from business model, so he invented two kinds of classes: UI classes and business object classes. Doesn't sound too bad? Well, first of all he kind of forgot about the third layer (data access, that's it!), and spilled his SQL statements all over his business objects. And by that I don't mean that you could read your SQL statement in clear text. No, they were created by a bizarre concatenated mechanism, which included the concept of putting some parts of the SQL code in base classes (re-use, you know), and distribute the rest over property getters within the derived classes. And this SQL code did not only cover business-relevant logic, it was also responsible for populating completely unrelated UI controls. The so called "business object" also contained information about which grid row or which tab page had been selected by the user - yeah, talking about interpretating another OO concept completely wrong. The Model-View-Controller design pattern is all about loose coupling, so that models can be applied with different views.

Uuuh, I try to repress the thought about it, but it keeps coming back...

For a developer, being able to start a project without this kind of legacy burden is a blessing. I appreciate it each time management decides to trust its own people to build up something from scratch, instead of buying in third party garbage like that.

But then there are also programming perversions that are simply beyond good and evil (similar to the ones posted on The Daily WTF). Let me give you two examples - coincidentally each of those came from German vendors...

Case #1:

Java AWT UI (yes, the old days...), card layout, so there is one form visible at a time and the other forms are sent to the back. Now those UI classes need to exchange data, and this was achieved by putting all data into protected static variables in the base class, so that all derived classes could access it. Beautiful, isn't it? But that's not the whole story. Those static variables weren't business objects in the common sense - they were plain stupid string arrays. One array for each business object attribute (and of course, each datatype was represented by a string), and the actual "object" was identified by the array index. You know, something like this:

protected static String[] employeeFirstName;

protected static String[] employeeLastName;

protected static String[] employeeIncome;

protected static int currentEmployee;The memory alone still makes me shudder today...

Case #2:

Years later, this time we talk about a .NET WinForms application. Somehow the development lead had heard that it's a good idea to separate visualization from business model, so he invented two kinds of classes: UI classes and business object classes. Doesn't sound too bad? Well, first of all he kind of forgot about the third layer (data access, that's it!), and spilled his SQL statements all over his business objects. And by that I don't mean that you could read your SQL statement in clear text. No, they were created by a bizarre concatenated mechanism, which included the concept of putting some parts of the SQL code in base classes (re-use, you know), and distribute the rest over property getters within the derived classes. And this SQL code did not only cover business-relevant logic, it was also responsible for populating completely unrelated UI controls. The so called "business object" also contained information about which grid row or which tab page had been selected by the user - yeah, talking about interpretating another OO concept completely wrong. The Model-View-Controller design pattern is all about loose coupling, so that models can be applied with different views.

Uuuh, I try to repress the thought about it, but it keeps coming back...

For a developer, being able to start a project without this kind of legacy burden is a blessing. I appreciate it each time management decides to trust its own people to build up something from scratch, instead of buying in third party garbage like that.

Sunday, November 27, 2005

My Favorite Software Technology Webcasts / Podcasts

Sorry, no longish postings those days, as I am in release crunch mode right now. In the meantime, you might want to check out what I consider some of the most interesting software technology webcats / podcasts out there:

I listen to them on a daily basis using an iPOD shuffle during my way from / to work.

I listen to them on a daily basis using an iPOD shuffle during my way from / to work.

Saturday, November 19, 2005

ADO.NET Transactions, DataAdapter.Update() And DataRow.RowState

I guess that almost every ADO.NET developer stumbles upon this sooner or later... as we all know, DataAdapter.Update() invokes DataRow.AcceptChanges() after a successful DB-update/-insert/-delete. But what if that whole operation happened within a transaction, and a rollback occurs later on during that process? Our DataRow's RowState has already changed from Modified/Added/Deleted to Unchanged (resp. Detached in case of a delete), our DataRowVersions are lost, hence our DataSet does not reflect the rollback that happened on the database.

What we are doing so far in our projects is to work on a copy of the original DataSet, so we can perform a "rollback" in memory as well. In detail, we merge back DataRow by DataRow, which allows us to keep not only its original RowState, but also different DataRowVersions (Original, Current, Proposed and the like). This is essential for subsequent updates to work properly.

But merging thousands of DataRows has a huge performance penalty (esp. if you got Guids as primary keys - this might be caused by a huge number of primary key comparisons, but I am not really sure about that), which sometimes forces us to disable that whole feature, and do a complete reload from the DB after an update instead (but then again, this will wipe pending updates which were rolled back).

So right now I am looking for a faster alternative. ADO.NET 2.0 provides a new DataAdapter property, namely DataAdapter.AcceptChangesDuringUpdate. This is exactly what we need, but we are still running under .NET 1.1.

Microsoft ADO.NET guru David Sceppa came up with another approach:

If you want to keep the DataAdapter from implicitly calling AcceptChanges after submitting the pending changes in a row, handle the DataAdapter's RowUpdated event and set RowUpdatedEventArgs to UpdateStatus.SkipCurrentRow.

That is actually what I will try to do next.

What we are doing so far in our projects is to work on a copy of the original DataSet, so we can perform a "rollback" in memory as well. In detail, we merge back DataRow by DataRow, which allows us to keep not only its original RowState, but also different DataRowVersions (Original, Current, Proposed and the like). This is essential for subsequent updates to work properly.

But merging thousands of DataRows has a huge performance penalty (esp. if you got Guids as primary keys - this might be caused by a huge number of primary key comparisons, but I am not really sure about that), which sometimes forces us to disable that whole feature, and do a complete reload from the DB after an update instead (but then again, this will wipe pending updates which were rolled back).

So right now I am looking for a faster alternative. ADO.NET 2.0 provides a new DataAdapter property, namely DataAdapter.AcceptChangesDuringUpdate. This is exactly what we need, but we are still running under .NET 1.1.

Microsoft ADO.NET guru David Sceppa came up with another approach:

If you want to keep the DataAdapter from implicitly calling AcceptChanges after submitting the pending changes in a row, handle the DataAdapter's RowUpdated event and set RowUpdatedEventArgs to UpdateStatus.SkipCurrentRow.

That is actually what I will try to do next.

Friday, November 18, 2005

Tuesday, November 15, 2005

Latest Purchase On Thinkgeek.Com

The "Binary Dad" t-shirt

In order to save on overseas shipping costs, we always pool our orders. Top item among my friends' orders this time was the "Schrödinger's Cat Is Dead" t-shirt.

In order to save on overseas shipping costs, we always pool our orders. Top item among my friends' orders this time was the "Schrödinger's Cat Is Dead" t-shirt.

Friday, November 11, 2005

Is MS Windows Ready For The Desktop?

No offense intended, I am myself a very Windows-centric developer, but "Is MS Windows Ready For The Desktop" is just too funny!

So now comes partitioning. Since I know from Linux that NTFS is a buggy file system (never got it to work smooth under Linux), I choose the more advanced FAT-fs, since there's no other option, this should be right for me. It's a disappointment I can't implement volume management tools like LVM or EVMS, or an initrd, but I decide to forget about this and go on, believing the Windows-team has made the right decision by not supporting this cumbersome features. So this means, I can forget about software-RAID and hard disk encryption supported by the kernel. But what about resizing partitions? Steve explains, this can be done in Windows. You go to your software-supplier, ask for the free Partition Magic 8.0, and after paying your €55 NVC, the Partition Magic CD can do a (tiny) part of the stuff EVMS can, like resizing.

So now comes partitioning. Since I know from Linux that NTFS is a buggy file system (never got it to work smooth under Linux), I choose the more advanced FAT-fs, since there's no other option, this should be right for me. It's a disappointment I can't implement volume management tools like LVM or EVMS, or an initrd, but I decide to forget about this and go on, believing the Windows-team has made the right decision by not supporting this cumbersome features. So this means, I can forget about software-RAID and hard disk encryption supported by the kernel. But what about resizing partitions? Steve explains, this can be done in Windows. You go to your software-supplier, ask for the free Partition Magic 8.0, and after paying your €55 NVC, the Partition Magic CD can do a (tiny) part of the stuff EVMS can, like resizing.

Wednesday, November 09, 2005

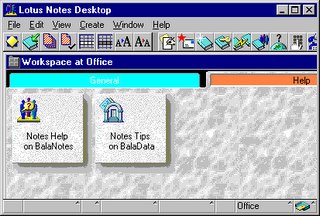

Lotus Notes

Let me talk about Lotus Notes today (the client only, as this is all I know about - and by the way, this is why the UI matters so much: users just don't care about fancy server stuff like sophisticated data replication, they care about their experience on the frontend side of things). I mean some people have learned to swallow the bitter pill, quietly launching their little KillNotes.exe in order to restart Notes after each crash without having to reboot the whole system. Others may curse on a daily basis (Survival of the Unfittest, Lotus Notes Sucks, "Software Tools of Dirt"-Page (in German), I hate Notes, Interface Hall of Shame or Notes Sucks come to mind). As for myself, I am just going to calmly describe how working with Lotus Notes looks like:

This screenshot shows a typical Lotus Notes 4.5 workplace as of 1996. Notes 5.0, which shipped in 1999, looks pretty much the same and is still widely used today.

As far as I have heard some things have been improved in Notes 6.5, while others haven't. But you know, large corporations sometimes skip one major release on their installation base, because of the effort involved in updating all the clients or because they couldn't migrate their applications built on top of it or whatever. This just proves that software tends to stay around longer than some vendors originally anticipated.

Jeff Atwood - as usual - sums things up pretty accurately:

This screenshot shows a typical Lotus Notes 4.5 workplace as of 1996. Notes 5.0, which shipped in 1999, looks pretty much the same and is still widely used today.

- The Notes client crashes when doing a search, it crashes when inserting data from the clipboard, it crashes on all kinds of occasions, and the stuff you worked on is gone. No draft folder, nothing.

- After Notes crashes, there is no way to restart it, because some processes remain in a zombie-like state. Luckily there is KillNotes. It is even available from an IBM download site. Think about it, instead of fixing those crashes, or instead of avoiding zombie processes being left behind after crashing, they did the third-best thing: provide a tool to clean up those zombie-processes. Of course out of the 100 million Lotus Notes users worldwide, maybe 1% have ever heard of that tool at all.

- The UI is... special. The Look & Feel is incomparable to anything else you might know. Most famous: The "You have mail"-Messagebox - pops up, even when you are typing in another application. Makes you switch to your inbox, where you find - nothing. No new mail. UI controls that look and behave completely different than what the standards of the underlying operating system would suggest. Modeless dialogs for text formatting. Undo rarely ever works. Pasting some HTML or RichText data from the clipboard blocks the system for several seconds. There is no toolbar icon for refreshing the current list of documents (e.g. mail inbox) - refreshing happens when you click into a grey window area (you'd better know about it!). Forms do not provide comboboxes for listing values to choose from, only modal dialogs that open up and contain listboxes. Icons don't comply with the desktop's standard, e.g. the icon for clipboard pasting is a a pin, the icon for saving is a book. Grids are not interactive, e.g. no way to change column order, only rudimentary grid sorting. And so on and so on...

- When sent to the background, the client sometimes starts consuming CPU power (up to 90%) - slowing down the foreground application, doing I don't know what. As soon as you bring it to the front - back to 0%. Like a little child saying "It wasn't me". "Un-cooperative multitasking" at its best (I wonder what would have happened during the non-preemptive days of 16bit-Windows).

- Text search: Runs in the main UI thread, so it blocks the whole application until it's done - and this might take a looong time. I don't know for sure, but it's so slow I suspect it has no fulltext index, thus has to scan each and every document again and again. And if that's not the case, its index seek implementation must be bogus. Anyway, more often than not the search result is just plainly wrong - documents that clearly should appear simply don't show up. Searches are only possible for one criteria, not more than that.

- From time to time Notes causes flickering by senselessly repeated window repainting.

- There are those constant security messages popping up, that keep on asking whether to create a counter certificate or whatever in order to be able to access certain documents. I have a degree in computer science, but I find these messages completely incomprehensible. Go figure how much the average end-user will enjoy that.

- All those weaknesses are - per-definitionem - inherited by most Lotus Notes applications.

As far as I have heard some things have been improved in Notes 6.5, while others haven't. But you know, large corporations sometimes skip one major release on their installation base, because of the effort involved in updating all the clients or because they couldn't migrate their applications built on top of it or whatever. This just proves that software tends to stay around longer than some vendors originally anticipated.

Jeff Atwood - as usual - sums things up pretty accurately:

We've all had bad software experiences. However, at my last job, our corporate email client of choice was Lotus Notes. And until you've used Lotus Notes, you haven't truly experienced bad software. It is death by a thousand tiny annoyances - the digital equivalent of being kicked in the groin upon arrival at work every day.

Lotus Notes is a trainwreck of epic (enterprise?) proportions, but it's worth studying to learn what not to do when developing software.

Thank You McAfee

So second level support calls in: "Listen, one customer installed our application, but he keeps on insisting that the user interface is all empty". "Yeah right", you think, thanking god that you don't have to answer hotline calls.

A quick Google search brings up a well-known suspect: McAfee AntiVirus. According to this Microsoft knowledge base article, McAfee's buffer overflow protection feature can screw WinForms applications - and that's exactly what had happened at the customer's site. Luckily there is a patch available.

BTW, I stopped using McAfee privately when they changed their virus definition subscription model - I hate to pay for a program that literally expires after 12 months.

A quick Google search brings up a well-known suspect: McAfee AntiVirus. According to this Microsoft knowledge base article, McAfee's buffer overflow protection feature can screw WinForms applications - and that's exactly what had happened at the customer's site. Luckily there is a patch available.

BTW, I stopped using McAfee privately when they changed their virus definition subscription model - I hate to pay for a program that literally expires after 12 months.

Friday, November 04, 2005

First Impressions Of Visual Studio 2005 RTM

I installed Visual Studio 2005 RTM yesterday, and - as a first endurance test - tried to convert one of our larger Visual Studio 2003 projects to the new environment. The conversion finished successfully, but the compilation raised several errors:

After fixing those issues, our application could be built. But I faced runtime problems as well, e.g. a third party library (for XML encryption) which suddenly produced invalid hash results (sidenote: XML encryption is now supported by .NET 2.0 - my colleagues and me were joking that this might be just another case of DOS Ain't Done 'Til Lotus Won't Run).

Don't get me wrong, Visual Studio 2005 is a cool product with lots of long-awaited features. When starting a project from scratch, I would be tempted to jump board on 2005. But with .NET 1.1 legacy projects lying around, there is just no way we can migrate to 2005 yet.

By the way, wiping an old Visual Studio 2005 Beta or CTP version is a nightmare (you are expected to manually uninstall up to 23 components in the correct order)! Luckily Dan Fernandez helped out with the Pre-RTM Visual Studio 2005 Automatic Uninstall Tool.

- One self-assignment ("a = a") caused an error (this was not even a warning under .NET 1.1), I guess this can be toggled by lowering the compiler warning level. Anyway it is a good thing the compiler pointed that out, as the developer's intention clearly was to write "this.a = a". Luckily the assignment to "this.a" happened already in the base class as well.

- Typed Datasets generated by the IDE are not backward compatible, as the access modifiers for InitClass() have changed to "private". We had a subclass which tried to invoke base.InitClass(), hence broke the build.

- The new compiler spit out several naming collision errors, when equally named third party components were not access through their fully qualified names (although in the code context, due to the type they were assigned to, it was clear which type to use) The .NET 1.1 compiler used to have no problem with that.

- Visual Studio 2005 also crashed once during compilation. I had to compile one project individually in order to get ahead.

After fixing those issues, our application could be built. But I faced runtime problems as well, e.g. a third party library (for XML encryption) which suddenly produced invalid hash results (sidenote: XML encryption is now supported by .NET 2.0 - my colleagues and me were joking that this might be just another case of DOS Ain't Done 'Til Lotus Won't Run).

Don't get me wrong, Visual Studio 2005 is a cool product with lots of long-awaited features. When starting a project from scratch, I would be tempted to jump board on 2005. But with .NET 1.1 legacy projects lying around, there is just no way we can migrate to 2005 yet.

By the way, wiping an old Visual Studio 2005 Beta or CTP version is a nightmare (you are expected to manually uninstall up to 23 components in the correct order)! Luckily Dan Fernandez helped out with the Pre-RTM Visual Studio 2005 Automatic Uninstall Tool.

Thursday, November 03, 2005

J2EE Inhouse Frameworks

Spring Framework lead developers Rod Johnson and Juergen Hoeller have their own opinion about J2EE Inhouse Frameworks (taken from their JavaOne 2005 presentation):

Tuesday, November 01, 2005

In The Kingdom Of The Blind, The One-Eyed Man Is King

Don't you also sometimes wonder where all the top-notch developers are gone? As more and more unskilled novices enter the scene while simultaneously some old veterans are losing the edge, chances are there are not too many star programmers left to steer the ship through difficult waters. Not that this is happening everywhere, it's just that it seems to be happening at some of the software shops I know, so I am quite sure the same is true for other places as well...

The combination of newcomers on one hand, who have some theoretical knowledge about new technology, but at the same time no idea how to turn that knowledge into working products, and long-serving developers on the other hand, who are not willing to relearn their profession on each single day, is particularly dangerous.

The greenhorns often load a pile of tech buzzwords upon their more-experienced peers along with poorly engineered, homebrewn frameworks (notwithstanding they might know about third party software platform and component markets, they tend to suffer the Not-Invented-Here Syndrome). After that, they endlessly keep on theorizing whether to favor the Abstract Factory Pattern over the Factory Method Pattern or not.

At the same time their seasoned colleagues - overwhelmed with all that new stuff - just want to turn back time to the good old days when all they had to worry about was whether the Visual Basic interpreter swallowed their code or their mainframe batch jobs produced a valid result. In the kingdom of the blind, the one-eyed man is king.

Really it's weird - the people who are smart AND experienced AND get something done, they seem to be an endangered species. Some of them waft away to middle management (which is often a frustrating experience, as they cannot do any more what they do best), others are still around but because of one reason or another they are not in charge any more of taking the lead on the technology frontier. It's the architecture astronauts who took over. And it's the MBAs who allowed them to. Pity.

The combination of newcomers on one hand, who have some theoretical knowledge about new technology, but at the same time no idea how to turn that knowledge into working products, and long-serving developers on the other hand, who are not willing to relearn their profession on each single day, is particularly dangerous.

The greenhorns often load a pile of tech buzzwords upon their more-experienced peers along with poorly engineered, homebrewn frameworks (notwithstanding they might know about third party software platform and component markets, they tend to suffer the Not-Invented-Here Syndrome). After that, they endlessly keep on theorizing whether to favor the Abstract Factory Pattern over the Factory Method Pattern or not.

At the same time their seasoned colleagues - overwhelmed with all that new stuff - just want to turn back time to the good old days when all they had to worry about was whether the Visual Basic interpreter swallowed their code or their mainframe batch jobs produced a valid result. In the kingdom of the blind, the one-eyed man is king.

Really it's weird - the people who are smart AND experienced AND get something done, they seem to be an endangered species. Some of them waft away to middle management (which is often a frustrating experience, as they cannot do any more what they do best), others are still around but because of one reason or another they are not in charge any more of taking the lead on the technology frontier. It's the architecture astronauts who took over. And it's the MBAs who allowed them to. Pity.

Wednesday, October 26, 2005

PDC05 Sessions Online

In case you - just like me - were unable to attend the PDC05, Microsoft now put all sessions online including streaming and downloadable presentation videos, transcripts and PowerPoint slides.

Sunday, October 23, 2005

Correlated Subqueries

Just last week we faced a SQL performance issue in one of our applications during ongoing development work. Reason was a correlated subquery - that is, a query that can't be evaluated independently, but depends on the outer query for its results, something like:

or

Please note that those are just examples for better understanding, and that database optimizers can do a better job on simple subqueries like that. Our real-life SQL included several inserts-by-select and a deletes-by-select with inverse exists / not exists operations on complex subqueries.

I know correlated subqueries are unavoidable sometimes, but often there are alternatives. In many cases they can be replaced by joins (which might be or not be faster - e.g. there are situations when exists/not exists-operators are superior to joins, because the database will stop looping as soon as one row is found - but that of course depends on the underlying data). Incoherent subqueries though (in opposite to correlated subqueries) should also outperform joins under normal circumstances.

SQL Server Pro Robert Vieira writes in "Professional SQL Server 2000 Programming": "Internally, a correlated subquery is going to create a nested loop situation. This can create quite a bit of overhead. Subqueries are substantially faster than cursors in most instances, but slower than other options that might be available."

When joining is no option, it might be a good idea to take a step back and do some redesign in a larger context. We managed to achieve a many-fold performance boost on a typical workload by replacing the correlated subqueries with a different approach that produced the same results at the end.

Do not expect the database engine to optimize away all tradeoffs that might be caused by a certain query design. The most-advanced optimizer can't take over complete responsibility from the developer. It's important to be conscious about which kind of tuning your database can do during execution, and which not. Creating an index on the correlated subquery's attribute that connects it to the embedding query is a good starting point.

select A1,

(select min(B1)

from TableB

where TableB.B2 = TableA.A2) as MinB1

from TableAor

select A1

from TableA

where exists (select 1

from TableB

where TableB.B2 = TableA.A2)Please note that those are just examples for better understanding, and that database optimizers can do a better job on simple subqueries like that. Our real-life SQL included several inserts-by-select and a deletes-by-select with inverse exists / not exists operations on complex subqueries.

I know correlated subqueries are unavoidable sometimes, but often there are alternatives. In many cases they can be replaced by joins (which might be or not be faster - e.g. there are situations when exists/not exists-operators are superior to joins, because the database will stop looping as soon as one row is found - but that of course depends on the underlying data). Incoherent subqueries though (in opposite to correlated subqueries) should also outperform joins under normal circumstances.

SQL Server Pro Robert Vieira writes in "Professional SQL Server 2000 Programming": "Internally, a correlated subquery is going to create a nested loop situation. This can create quite a bit of overhead. Subqueries are substantially faster than cursors in most instances, but slower than other options that might be available."

When joining is no option, it might be a good idea to take a step back and do some redesign in a larger context. We managed to achieve a many-fold performance boost on a typical workload by replacing the correlated subqueries with a different approach that produced the same results at the end.

Do not expect the database engine to optimize away all tradeoffs that might be caused by a certain query design. The most-advanced optimizer can't take over complete responsibility from the developer. It's important to be conscious about which kind of tuning your database can do during execution, and which not. Creating an index on the correlated subquery's attribute that connects it to the embedding query is a good starting point.

Sunday, October 16, 2005

So, The Internet Started In 1995?

Maybe I am just to harsh on IT journalism or people writing about technical issues they just don't understand, but sometimes popular scientific articles are simply so far away from the truth, it drives me crazy. This also makes you wonder about all other kind of popular scientific publishing. I know about software, and most popular software articles are plain nonsense. That is were I notice. But what about topics regarding medicine, biology or physics? Is the same happening there as well?

E.g. Dan Brown's book Digital Fortress was cheered by the press, but littered with nonsense each time it came to talk about cryptographic issues.

Today, Austrian Broadcasting Corporation's tech portal ORF Futurezone published an article about the history of the Internet, stating that "Europe accepted TCP/IP and the Internet architecture in 1995" and "the ISO/OSI committees and telephone companies were opposing TCP/IP, as the were favoring the ISO/OSI networking model". You can't be serious! I mean I understand the average Internet user today might think the Internet started in the mid-90's with the first graphical browsers Mosaic (1993) and Netscape (1994), but IT journalists should know better.

Error in reasoning #1: Defining the Internet equal to the World Wide Web. One might argue that the WWW took off in 1994 with the first graphical browsers available on widespread operating systems like Windows 3 or MacOS. But WWW was and is just a subset of Internet protocol standards (mainly HTTP and HTML) and some server and browser implementations. When I took Internet classes at university in 1994, WWW was mentioned as one of several appliances next to Gopher, FTP, Mail, Usenet, Archie, WAIS and the like. In 1992 when I connected to the Internet for the first time, I did so to read Usenet. Predecessor network ARPANet had been introduced in 1969, and soon after the first mails were sent and files were transferred.

Error in reasoning #2: Putting ISO/OSI network model and TCP/IP in conflict. A short answer to that one: The ISO/OSI is a model for network protocol classification, TCP/IP is a concrete network protocol standard and implementationm where TCP is located at ISO/OSI layer 4 (transport layer), and IP at layer 3 (network layer).

Error in reasoning #3: Taking one interviewee's word for granted that there was European opposition (e.g. telephone companies) to the Internet and TCP/IP until 1995. Truth is: Norway was the first European country to connect its research institutions to the ARPANet already in 1973. Austria followed quite late, and joined NSFNet in 1989. The first commercial providers in Austria were founded in the early 90's, when the Internet was opened for commercial usage. If there was opposition from telephone companies, that was true for the US as well. Bob Taylor, ARPANet project lead, was running against windmills at AT&T for years, because packet-switching just did not fit into their circuit-switching-infested brains.

E.g. Dan Brown's book Digital Fortress was cheered by the press, but littered with nonsense each time it came to talk about cryptographic issues.

Today, Austrian Broadcasting Corporation's tech portal ORF Futurezone published an article about the history of the Internet, stating that "Europe accepted TCP/IP and the Internet architecture in 1995" and "the ISO/OSI committees and telephone companies were opposing TCP/IP, as the were favoring the ISO/OSI networking model". You can't be serious! I mean I understand the average Internet user today might think the Internet started in the mid-90's with the first graphical browsers Mosaic (1993) and Netscape (1994), but IT journalists should know better.

Error in reasoning #1: Defining the Internet equal to the World Wide Web. One might argue that the WWW took off in 1994 with the first graphical browsers available on widespread operating systems like Windows 3 or MacOS. But WWW was and is just a subset of Internet protocol standards (mainly HTTP and HTML) and some server and browser implementations. When I took Internet classes at university in 1994, WWW was mentioned as one of several appliances next to Gopher, FTP, Mail, Usenet, Archie, WAIS and the like. In 1992 when I connected to the Internet for the first time, I did so to read Usenet. Predecessor network ARPANet had been introduced in 1969, and soon after the first mails were sent and files were transferred.

Error in reasoning #2: Putting ISO/OSI network model and TCP/IP in conflict. A short answer to that one: The ISO/OSI is a model for network protocol classification, TCP/IP is a concrete network protocol standard and implementationm where TCP is located at ISO/OSI layer 4 (transport layer), and IP at layer 3 (network layer).

Error in reasoning #3: Taking one interviewee's word for granted that there was European opposition (e.g. telephone companies) to the Internet and TCP/IP until 1995. Truth is: Norway was the first European country to connect its research institutions to the ARPANet already in 1973. Austria followed quite late, and joined NSFNet in 1989. The first commercial providers in Austria were founded in the early 90's, when the Internet was opened for commercial usage. If there was opposition from telephone companies, that was true for the US as well. Bob Taylor, ARPANet project lead, was running against windmills at AT&T for years, because packet-switching just did not fit into their circuit-switching-infested brains.

Saturday, October 08, 2005

New Office 12 User Interface

Revolutionary news from Microsoft - Office usability will never be the same again. Office 12 comes with a completely refurnished user interface. Folks, it was about time! E.g. all Word for Windows releases at least since version 2.0 (1992) essentially followed the same user experience paradigm.

For me the most annoying feature ever were "personalized menus" (you know, only the last recently used menus became visible, so you certainly wouldn't find what you were looking for). That one was introduced in, what was it, Office 2000? And don't let me start moaning about Clippy.

But from what I have seen, Office 12 will be a real step forward. It's a bit risky: just imagine the install base - millions and millions of secretaries who have been ill-conditioned over the last decade in order to find the 1% of overall functionality that they are actually using. But only the bold one wins! Don't let Apple take away all the user interface glory, Microsofties!

For me the most annoying feature ever were "personalized menus" (you know, only the last recently used menus became visible, so you certainly wouldn't find what you were looking for). That one was introduced in, what was it, Office 2000? And don't let me start moaning about Clippy.

But from what I have seen, Office 12 will be a real step forward. It's a bit risky: just imagine the install base - millions and millions of secretaries who have been ill-conditioned over the last decade in order to find the 1% of overall functionality that they are actually using. But only the bold one wins! Don't let Apple take away all the user interface glory, Microsofties!

Monday, October 03, 2005

Five Things Every Win32 Programmer Needs To Know

I was really looking forward to Raymond Chen's PDC talk about "Five Things Every Win32 Programmer Needs To Know" (partly because I was curious which five things he would pick, partly I was wondering whether I would "pass the test" - although I do not really consider myself a Win32 programmer at heart). Unfortunately, Channel 9's hand recording was unviewable because of the shaky picture and the poor audio quality. Luckily Raymond's PDC slides are available on commnet.microsoftpdc.com.

Tuesday, September 27, 2005

Saturday, September 17, 2005

So You Think Visual Basic Is A Wimpy Programming Language?

Yes, hardcore programmers sometimes look down on their Visual Basic counterparts. Aren't those the guys who never have to deal with pointers, dynamic memory allocation and multithreading, and who are writing code in a language that was intended for novices (hence "Beginner's All-purpose Symbolic Instruction Code"). Those who use an interpreter whose roots go back to Microsoft's first product, Altair Basic? Hey, not so fast! I know Java and .NET developers who have no clue about pointers, dynamic memory allocation and multithreading either, and are easily outperformed by VB veterans.

Last week I introduced a Visual Basic programmer to MSXML, and prepared some code samples so she would have something to start with. And it's true, the VB6-syntax is partly bizarre. Let alone the Visual Basic IDE which pops up an error messagebox each time I leave a line of code that does not work yet (although I am sure there should be an option to switch off this annoyance). It also has the same usability charm as back when it was introduced on 16-bit Windows. The API is quite limiting as well, so the first thing I had to do was to import several Win32 functions.

Let's face it, legacy applications done in Visual Basic can turn into a CIO's nightmare. A huge number of little standalone VB applications have emerged during the last fifteen years, fragmenting corporate software infrastructure. VB applications are often hard to maintain because of the lack of structured programming the language enforces, VB6 itself is a dead horse and the migration path to VB.NET is tedious.

Legions of VB programmers are supposed to switch over to VB.NET those days. VB.NET does not have a lot in common with the old platform but some syntax similarities. Not only do those programmers suddenly have to grasp OOP, they must also get to know the .NET framework as well as a completely different IDE. BTW, I really dislike the VB.NET syntax. Just compare the MSDN VB.NET and C# code samples. C# is elegant (thanks to MS distinguished engineer Anders Hejlsberg), while VB.NET is nothing but an ugly hybrid.

Another story comes to my mind: a friend of mine, expert on Linux and embedded systems, once went to work for a new employer. He knew they were doing some stuff in Visual Basic. Then one day someone sent him a Powerpoint file. My friend was wondering what it was all about, as the presentation itself was empty. "No, it's no presentation, it's our codebase", the other guy answered. They had done their stuff in Powerpoint VBA. Unbelievable! Needless to mention, my friend left that company shortly after.

So if you want to know my opinion: Visual Basic might be wimpy, but in some perverted way it's for the real tough as well.

Last week I introduced a Visual Basic programmer to MSXML, and prepared some code samples so she would have something to start with. And it's true, the VB6-syntax is partly bizarre. Let alone the Visual Basic IDE which pops up an error messagebox each time I leave a line of code that does not work yet (although I am sure there should be an option to switch off this annoyance). It also has the same usability charm as back when it was introduced on 16-bit Windows. The API is quite limiting as well, so the first thing I had to do was to import several Win32 functions.

Let's face it, legacy applications done in Visual Basic can turn into a CIO's nightmare. A huge number of little standalone VB applications have emerged during the last fifteen years, fragmenting corporate software infrastructure. VB applications are often hard to maintain because of the lack of structured programming the language enforces, VB6 itself is a dead horse and the migration path to VB.NET is tedious.

Legions of VB programmers are supposed to switch over to VB.NET those days. VB.NET does not have a lot in common with the old platform but some syntax similarities. Not only do those programmers suddenly have to grasp OOP, they must also get to know the .NET framework as well as a completely different IDE. BTW, I really dislike the VB.NET syntax. Just compare the MSDN VB.NET and C# code samples. C# is elegant (thanks to MS distinguished engineer Anders Hejlsberg), while VB.NET is nothing but an ugly hybrid.

Another story comes to my mind: a friend of mine, expert on Linux and embedded systems, once went to work for a new employer. He knew they were doing some stuff in Visual Basic. Then one day someone sent him a Powerpoint file. My friend was wondering what it was all about, as the presentation itself was empty. "No, it's no presentation, it's our codebase", the other guy answered. They had done their stuff in Powerpoint VBA. Unbelievable! Needless to mention, my friend left that company shortly after.

So if you want to know my opinion: Visual Basic might be wimpy, but in some perverted way it's for the real tough as well.

Friday, September 09, 2005

Andy Hertzfeld On NerdTV

Bob Cringely just published the first in series of interviews on NerdTV (hey there is no MPEG-4 codec in Windows Media Player - luckily Quicktime supports MPEG-4). He talks with original Macintosh system software programmer Andy Hertzfeld about Apple, the Mac project, Steve Jobs, Open Source and how he just recently excavated the old sourcecode of Bill Atkinson's MacPaint for Donald Knuth. Today Andy Hertzfeld runs Folklore.Org - "Anecdotes about the development of Apple's original Macintosh computer, and the people who created it".

At one point they refer to Cringely's 1996 TV-series "Triumph Of The Nerds", where Steve Jobs accused Microsoft of having "no taste" (his words actually were "The only problem with Microsoft is they just have no taste, they have absolutely no taste, and what that means is - I don't mean that in a small way I mean that in a big way.").

So after "Triumph Of The Nerds" aired, Steve Jobs came over to Andy Hertzfeld's place, and called Bill Gates to apologize. When Steve Jobs says sorry to Bill Gates, it goes like "Bill I'm calling to apologize. I saw the documentary and I said that you had no taste. Well I shouldn't have said that publicly. It's true, but I shouldn't have said it publicly". And Bill Gates responds saying "Steve, I may have no taste, but that doesn't mean my entire company has no taste". This little episode tells us a lot about those two characters, doesn't it?

At one point they refer to Cringely's 1996 TV-series "Triumph Of The Nerds", where Steve Jobs accused Microsoft of having "no taste" (his words actually were "The only problem with Microsoft is they just have no taste, they have absolutely no taste, and what that means is - I don't mean that in a small way I mean that in a big way.").

So after "Triumph Of The Nerds" aired, Steve Jobs came over to Andy Hertzfeld's place, and called Bill Gates to apologize. When Steve Jobs says sorry to Bill Gates, it goes like "Bill I'm calling to apologize. I saw the documentary and I said that you had no taste. Well I shouldn't have said that publicly. It's true, but I shouldn't have said it publicly". And Bill Gates responds saying "Steve, I may have no taste, but that doesn't mean my entire company has no taste". This little episode tells us a lot about those two characters, doesn't it?

Sunday, September 04, 2005

The Good, The Bad, The Ugly Elevator Panel User Interface

Some days ago, I blogged about the completely-screwed-up user interface of a parking garage ticketmachine here in the city of Linz, Austria. Same place, different usability nightmare: The garage elevator.

I should explain first: There are two parking decks, both under ground. They are labeled "level 1" and "level 2". The elevator stops at both levels, and at the ground floor from where you can exit to the outside.

Anyway, who ever is responsible for this parking garage's elevator panel, did not follow the "Keep-It-Simple-Stupid"-principle (remember, this panel and its "manual" are located outside the cabin):

Translation:

You want to enter the destination floor? [No, do I have to?]

Press "-".

Press "1" or "2".

No further operations required inside the cabin.

It's hard to notice, but the "-"-button is actually in the left bottom corner.

There are only levels 0, 1 and 2 - what are the other buttons good for? Oh I see, future extensability. Once they will excavate a whole new level 3 under ground, at least they won't have to touch the elevator panel! And what for the "-"? There is no "+"-counterpart. It all ends at level 0. This just forces you to press two buttons, instead of one. But then, it's mathematically correct, right?

So, you are standing at the ground floor, and want to go down. Unless you press "-" and then "1" or "2", the cabin will not even show up. Similar when you enter at level 2, you can then either enter "0" or "-" followed by "1". It's just ridiculous!

Now I am sure the Petronas Twin Towers must have a complex shortest-path-selection-algorithm implemented for their elevator system. I understand this decision making can be simplified if the user signals the destination of his journey already when calling the cabin. As we all know, standard elevators provide one up- and one down-button for summoning the cabin, so the cabin only stops if it's passing by in the right direction (not to mention that many people still press the wrong button or both of them once they get impatient). This ought to be enough for small to medium size systems.

Where does that thing want to go anyway? On level 0, basement parking garage customers tend to go down. On the lower levels, they most likely want to get up to the surface. OK, some weirdows might enjoy shuttling between level 1 and 2. Anyway with one and only one intermediate level, there is no real need for a complex path-optimization approach, which would depend on preceding destination input. Knowing whether we want to go up or down, followed by destination input inside the cabin should be the natural choice. Also, that's what people are used to.

I have observed elderly folks standing in front of that panel, giving up and going up or down the stairs by foot. I know this interface overstrains users. But hey, at least there are "no further operations required inside the cabin".

And I have tried, it is not a telephone, either. And in case it's supposed to be a calculator, where is the square root button? (talking about calculators, just a short off-topic-insertion at this point: you might want to have a look at Kraig Brockschmidt's online book "Mystic Microsoft" - Kraig is the creator of the original Windows 1.0 calculator and author of "Inside OLE").

I should explain first: There are two parking decks, both under ground. They are labeled "level 1" and "level 2". The elevator stops at both levels, and at the ground floor from where you can exit to the outside.

Anyway, who ever is responsible for this parking garage's elevator panel, did not follow the "Keep-It-Simple-Stupid"-principle (remember, this panel and its "manual" are located outside the cabin):

|  |

Translation:

You want to enter the destination floor? [No, do I have to?]

Press "-".

Press "1" or "2".

No further operations required inside the cabin.

It's hard to notice, but the "-"-button is actually in the left bottom corner.

There are only levels 0, 1 and 2 - what are the other buttons good for? Oh I see, future extensability. Once they will excavate a whole new level 3 under ground, at least they won't have to touch the elevator panel! And what for the "-"? There is no "+"-counterpart. It all ends at level 0. This just forces you to press two buttons, instead of one. But then, it's mathematically correct, right?

So, you are standing at the ground floor, and want to go down. Unless you press "-" and then "1" or "2", the cabin will not even show up. Similar when you enter at level 2, you can then either enter "0" or "-" followed by "1". It's just ridiculous!

Now I am sure the Petronas Twin Towers must have a complex shortest-path-selection-algorithm implemented for their elevator system. I understand this decision making can be simplified if the user signals the destination of his journey already when calling the cabin. As we all know, standard elevators provide one up- and one down-button for summoning the cabin, so the cabin only stops if it's passing by in the right direction (not to mention that many people still press the wrong button or both of them once they get impatient). This ought to be enough for small to medium size systems.

Where does that thing want to go anyway? On level 0, basement parking garage customers tend to go down. On the lower levels, they most likely want to get up to the surface. OK, some weirdows might enjoy shuttling between level 1 and 2. Anyway with one and only one intermediate level, there is no real need for a complex path-optimization approach, which would depend on preceding destination input. Knowing whether we want to go up or down, followed by destination input inside the cabin should be the natural choice. Also, that's what people are used to.

I have observed elderly folks standing in front of that panel, giving up and going up or down the stairs by foot. I know this interface overstrains users. But hey, at least there are "no further operations required inside the cabin".

And I have tried, it is not a telephone, either. And in case it's supposed to be a calculator, where is the square root button? (talking about calculators, just a short off-topic-insertion at this point: you might want to have a look at Kraig Brockschmidt's online book "Mystic Microsoft" - Kraig is the creator of the original Windows 1.0 calculator and author of "Inside OLE").

Friday, August 26, 2005

Multiple Inheritance And The "This"-Pointer

Excellent article on why using C++'s <dynamic_cast> is a good idea when casting multiple-inheritance instance pointers (this is true even if you derive from only one concrete class plus one or more pure virtual classes (=interfaces)). <dynamic_cast> is aware of the different vtable- and memberdata-offsets (due to runtime type information), and will move the casted pointer accordingly.

Some C++ developers tend to keep their C-style casting habits, which will introduce fatal flaws into their code. My recommendation: If you haven't done yet, get to know about the four C++ casting operators, and apply them accordingly. Developers should understand the memory layout of their data types. And what a vtable is. And what a compiler can do, and what not.

If still in doubt, use <dynamic_cast> - it's slower, but safe. Don't forget to check whether the cast was successful, though.

Some C++ developers tend to keep their C-style casting habits, which will introduce fatal flaws into their code. My recommendation: If you haven't done yet, get to know about the four C++ casting operators, and apply them accordingly. Developers should understand the memory layout of their data types. And what a vtable is. And what a compiler can do, and what not.

If still in doubt, use <dynamic_cast> - it's slower, but safe. Don't forget to check whether the cast was successful, though.

Thursday, August 18, 2005

How Bill Gates Outmaneuvered Gary Kildall

The History Of Computing Project provides plenty of information on companies and individuals who helped to shape today's computer industry. It includes historical anecdotes and timelines, e.g. a summary of Microsoft's history. What's missing in the 1980/1981 entries (dawn of the IBM PC and DOS) is the story how Bill Gates outmaneuvered Digital Research and Gary Kildall, then leading 8-bit OS vendor with their CP/M system. So here is a summary of what happened. I must have heard / read dozens of slightly different variations on this story over the years, so this outline of events should be pretty accurate:

Back at that time, Microsoft provided programming languages (Basic, Fortran, Cobol and the like) for 8-bit machines, while the most-widespread operating system CP/M came from Digital Research. The two companies had an unspoken agreement on not raiding the other one's market. But Microsoft had licensed CP/M for a very successful hardware product at that time - the so called SoftCard for the Apple II. SoftCard contained a Zilog Z80 processor, and allowed Apple II owners to run CP/M and CP/M applications.

IBM - awakened by Apple's microcomputer success with the Apple II - approached Microsoft in search of an operating system and programming languages for their "Project Chess" = "Acorn" = "IBM PC" machine, which was to be a microcomputer based on open standards and off-the-self parts. Those off-the-self parts included Intel's 8088 CPU, a downsized 8086. One exception was the BIOS chip, which was reverse-engineered later by Compaq and others.

The legend goes that IBM's decision makers mistakenly thought CP/M originated from Microsoft, but that sounds doubtful. Anyway, Bill Gates told IBM they would have to talk to Digital Research, and even organized an appointment with Digital Research CEO Gary Kildall.

Kildall was a brilliant computer scientist, but probably missing the business instinct and restless ambition of Bill Gates. Some people say Gates didn't inform Kildall about who the prospective customer was, but only noted that "those guys are important", which might be plausible, as IBM was paranoid about anyone getting to know what they were doing. On the other hand, Gates himself once mentioned in an interview that Kildall knew it was IBM who was coming.

So the next day some IBM representatives, including IBM veteran Jack Sams, flew from Microsoft's office in Seattle to Pacific Grove, California, where Digital Research was located. When they arrived, Kildall was not there. While Gates later commented that "Gary went flying" (Kildall was a hobbyist pilot), truth is that Kildall had already another meeting scheduled and was visiting important customers, and flew his own plane to get there. Kildall's wife Dorothy McEwen and her lawyer fatally misjudged the situation in the meantime and declined to sign IBM's nondisclosure-agreement, so IBM left with empty hands. It should be noted that Gates had no problem signing a NDA.

Again, different versions exist about that part of the story - one of them goes that Kildall actually appeared in the afternoon, but no agreement was reached either. Another problem at that time was that Digital Research had not even finished CP/M's 16bit 8086-version.

When IBM returned to Microsoft still looking for an operating system, Gates would not neglect that opportunity. Microsoft co-founder Paul Allen knew of a 16-bit CP/M clone named "Quick and Dirty Operating System" (QDOS), written by Tim Paterson of nearby "Seattle Computer Products". Paterson had grown tired of waiting for CP/M-8086, so he had decided to build one on his own. In a matchless deal, Microsoft purchased QDOS for a mere USD 50,000, and transformed it into PC DOS 1.0. IBM had unexclusively licensed DOS, which opened doors for Microsoft to sell MS DOS (back then slightly varying versions) to every PC clonemaker that would come along.

At the same time, Digital Research finally finished their 16-bit CP/M, which was even offered by IBM as an alternative to DOS, but never took off mainly because of pricing reasons (USD 240 for CP/M in comparison to USD 40 for DOS). Thanks to the similarities between DOS and CP/M, CP/M applications were easily ported to DOS as well - sometimes it was enough to change a single byte value in the program's header section.

Back then IBM still believed that even on the microcomputer market, profits would stem from hardware sales, not from software sales (remember they gave operating system and standard applications away for free in order to sell mainframes - software was a by-product). Their next mistake was to open doors to clonemakers thanks to their open-standards approach and their licensing agreement with Microsoft. IBM also paid a high price later during their OS/2 cooperation with then-strengthened Microsoft, when Microsoft suddenly dropped OS/2 in favor of Windows.

Myths entwine around the real events, and a lot of wrong or over-simplified versions are circulating. People tend to forget that Microsoft was quite successful long before the days of DOS, selling their Basic version, which ran on more or less every 8-bit microcomputer. They actually began in 1975 on the famous Altair. And no, Gates and Allen did not start their company in a garage (those were Steve Jobs and Steve Wozniak with Apple), they established Microsoft in Albuquerque, New Mexico - because that was where Altair producer MITS had its headquarters. Some years later they moved back to their hometown Seattle.

One of my college teachers once told us that "IBM came to Seattle to talk to an OS vendor, but those guys were out of town, so they went to Microsoft instead". Isn't the real story so much more amazing than that oversimplified one?

Update 2016: I found this Tom Rolander interview, who actually "went flying with Gary" that fateful day (at 17min 7sec):

In 2015 I held this presentation on the "History of the PC" at Techplauscherl in Linz:

|

| ||||

Back at that time, Microsoft provided programming languages (Basic, Fortran, Cobol and the like) for 8-bit machines, while the most-widespread operating system CP/M came from Digital Research. The two companies had an unspoken agreement on not raiding the other one's market. But Microsoft had licensed CP/M for a very successful hardware product at that time - the so called SoftCard for the Apple II. SoftCard contained a Zilog Z80 processor, and allowed Apple II owners to run CP/M and CP/M applications.

IBM - awakened by Apple's microcomputer success with the Apple II - approached Microsoft in search of an operating system and programming languages for their "Project Chess" = "Acorn" = "IBM PC" machine, which was to be a microcomputer based on open standards and off-the-self parts. Those off-the-self parts included Intel's 8088 CPU, a downsized 8086. One exception was the BIOS chip, which was reverse-engineered later by Compaq and others.

The legend goes that IBM's decision makers mistakenly thought CP/M originated from Microsoft, but that sounds doubtful. Anyway, Bill Gates told IBM they would have to talk to Digital Research, and even organized an appointment with Digital Research CEO Gary Kildall.

Kildall was a brilliant computer scientist, but probably missing the business instinct and restless ambition of Bill Gates. Some people say Gates didn't inform Kildall about who the prospective customer was, but only noted that "those guys are important", which might be plausible, as IBM was paranoid about anyone getting to know what they were doing. On the other hand, Gates himself once mentioned in an interview that Kildall knew it was IBM who was coming.

|

| Digital Research's former headquarters, Pacific Grove, California |

Again, different versions exist about that part of the story - one of them goes that Kildall actually appeared in the afternoon, but no agreement was reached either. Another problem at that time was that Digital Research had not even finished CP/M's 16bit 8086-version.

When IBM returned to Microsoft still looking for an operating system, Gates would not neglect that opportunity. Microsoft co-founder Paul Allen knew of a 16-bit CP/M clone named "Quick and Dirty Operating System" (QDOS), written by Tim Paterson of nearby "Seattle Computer Products". Paterson had grown tired of waiting for CP/M-8086, so he had decided to build one on his own. In a matchless deal, Microsoft purchased QDOS for a mere USD 50,000, and transformed it into PC DOS 1.0. IBM had unexclusively licensed DOS, which opened doors for Microsoft to sell MS DOS (back then slightly varying versions) to every PC clonemaker that would come along.

At the same time, Digital Research finally finished their 16-bit CP/M, which was even offered by IBM as an alternative to DOS, but never took off mainly because of pricing reasons (USD 240 for CP/M in comparison to USD 40 for DOS). Thanks to the similarities between DOS and CP/M, CP/M applications were easily ported to DOS as well - sometimes it was enough to change a single byte value in the program's header section.

Back then IBM still believed that even on the microcomputer market, profits would stem from hardware sales, not from software sales (remember they gave operating system and standard applications away for free in order to sell mainframes - software was a by-product). Their next mistake was to open doors to clonemakers thanks to their open-standards approach and their licensing agreement with Microsoft. IBM also paid a high price later during their OS/2 cooperation with then-strengthened Microsoft, when Microsoft suddenly dropped OS/2 in favor of Windows.

Myths entwine around the real events, and a lot of wrong or over-simplified versions are circulating. People tend to forget that Microsoft was quite successful long before the days of DOS, selling their Basic version, which ran on more or less every 8-bit microcomputer. They actually began in 1975 on the famous Altair. And no, Gates and Allen did not start their company in a garage (those were Steve Jobs and Steve Wozniak with Apple), they established Microsoft in Albuquerque, New Mexico - because that was where Altair producer MITS had its headquarters. Some years later they moved back to their hometown Seattle.

One of my college teachers once told us that "IBM came to Seattle to talk to an OS vendor, but those guys were out of town, so they went to Microsoft instead". Isn't the real story so much more amazing than that oversimplified one?

Update 2016: I found this Tom Rolander interview, who actually "went flying with Gary" that fateful day (at 17min 7sec):

In 2015 I held this presentation on the "History of the PC" at Techplauscherl in Linz:

Sunday, August 14, 2005

The Good, The Bad, The Ugly Ticketmachine User Interface

When visiting my wife in hospital last week, I received an impression of several inner city parking garages. One of them drew my attention thanks to its quite unique ticket issuing machine:

An unused display, several ugly stickers, partly crossed out, redundant labels and arrows, so that even after passing the garage entrance several times I still could not find the ticket button immediately. Regarding the emergency button (="Notruf" in German), I wonder which kind of emergency one might be facing at this point anyway (but maybe a nervous visual overload attack).

Why on earth, if all of that could be so easy:

BTW, this garage has additional bad user experience to offer (hint: calculator-like elevator button panel, so what about pressing "-" and "1" when going to the basement?) - more on that in one of my next postings.

An unused display, several ugly stickers, partly crossed out, redundant labels and arrows, so that even after passing the garage entrance several times I still could not find the ticket button immediately. Regarding the emergency button (="Notruf" in German), I wonder which kind of emergency one might be facing at this point anyway (but maybe a nervous visual overload attack).

Why on earth, if all of that could be so easy:

| ||

|

BTW, this garage has additional bad user experience to offer (hint: calculator-like elevator button panel, so what about pressing "-" and "1" when going to the basement?) - more on that in one of my next postings.

.NET 2.0: Nullable ValueTypes

Finally they are here: .NET 2.0 introduces Nullable ValueTypes (and a lot more than that - e.g. Generics). What a relief - it was kind of tedious having to implement our own nullable DateTime- or GUID-wrappers, just because those attributes happened to be nullable on the database as well, or because you wanted to pass null-values in case of strongly typed method parameters.

Friday, August 05, 2005

Agile Programming

"Are you doing XP-style unit testing throughout your project? You know, we even write the test code prior to the functional code on each and every method.", the recent hire (fresh from polytechnic school) insisted. "Leave me in peace", I said to myself, "I have real work to do". Two years later I asked him how he was doing. "You know we gave up on the eXtreme Programming approach. It just was frustrating. Oh, and our project was cancelled"

Please don't get me wrong, XP brought some exciting new ideas, and I really admire Kent Beck for the groundwork he has laid in this area. XP also shares several ideas with the proposal I will discuss in a moment. I just don't understand people who immediately jump on the bandwagon of the latest software methodology hype, each time something new comes along. Those folks don't act deliberately, they don't consider whether certain measures make or do not make sense in a specific project scenario, they don't challenge new approaches, they just blindly follow them. Why? I am not sure, but my theory goes that forcing their favorite methodology on other people within their tiny software development microcosm creates a boost on their low self-esteem.

So I am not going to force my favorite methodology on you. But maybe you are also tired of overly bureaucratic software engineering (you know, no line of code will be written until hundreds of pages of detailed specs have been approved by several committees), and eXtreme programming is just too, well, extreme for your taste. The proposal that comes closest to what I think software development should be all about is Agile Programming (you might want to have a look at Martin Fowler's excellent introductionary article). In essence, Agile Programming is people-oriented (requires creative and talented people), acknowledges that there is just not a lot of predictability in the process of creating software, and puts emphasis on iterative development and communication with the customer.

Here, your design document IS in your sourcecode. Software development is not like the building industry, that's why you cannot just draw a construction plan and hand it over to the workers (=programmers) for execution. Agile Programming embraces change (e.g. changing requirements - face it, this will always happen), and helps to react accordingly, thus avoiding the rude awakening after months of denial that you project is off track - the kind of awakenings that might happen as long as you can hide behind stacks of UML diagrams and project plans, but do not have to deliver a new software release each week.

There is not "One-Size-Fits-All", and I probably wouldn't use Agile Programming when building navigation software for the NASA. But many of the agile principles just make sense within the projects I work on (and I try to apply those principles selectively).

I also don't completely agree to the Agile Manifesto's statement #1 "We have come to value individuals and interactions over processes and tools". If they would just have left away the part about tools, it would have been fine. I know what they meant, though, but let me be picky this time. Tools are important. Thing is, smart individuals tend to use or create good tools, so it all comes together again at the end. The manifesto also continues "That is, while there is value in the items on the right [referring to processes and tools], we value the items on the left [referring to individuals and interactions] more". Unfortunately people often forget about the footnotes, and might interpretate this more in a sense of "it doesn't matter I use Edlin for programming, as long as I write clever code".

Changing topic, did you know that Microsoft Project was tailored for building industry projects, NOT for software projects? Think about it for a moment.

Please don't get me wrong, XP brought some exciting new ideas, and I really admire Kent Beck for the groundwork he has laid in this area. XP also shares several ideas with the proposal I will discuss in a moment. I just don't understand people who immediately jump on the bandwagon of the latest software methodology hype, each time something new comes along. Those folks don't act deliberately, they don't consider whether certain measures make or do not make sense in a specific project scenario, they don't challenge new approaches, they just blindly follow them. Why? I am not sure, but my theory goes that forcing their favorite methodology on other people within their tiny software development microcosm creates a boost on their low self-esteem.

So I am not going to force my favorite methodology on you. But maybe you are also tired of overly bureaucratic software engineering (you know, no line of code will be written until hundreds of pages of detailed specs have been approved by several committees), and eXtreme programming is just too, well, extreme for your taste. The proposal that comes closest to what I think software development should be all about is Agile Programming (you might want to have a look at Martin Fowler's excellent introductionary article). In essence, Agile Programming is people-oriented (requires creative and talented people), acknowledges that there is just not a lot of predictability in the process of creating software, and puts emphasis on iterative development and communication with the customer.

Here, your design document IS in your sourcecode. Software development is not like the building industry, that's why you cannot just draw a construction plan and hand it over to the workers (=programmers) for execution. Agile Programming embraces change (e.g. changing requirements - face it, this will always happen), and helps to react accordingly, thus avoiding the rude awakening after months of denial that you project is off track - the kind of awakenings that might happen as long as you can hide behind stacks of UML diagrams and project plans, but do not have to deliver a new software release each week.