"Humans and higher primates share approximately 97% of their DNA in common. Recent research in primate programming suggests computing is a task that most higher primates can easily perform. Visual Basic 6.0 was the preferred IDE for the majority of experiment primate subjects."

Sunday, January 30, 2005

Primate Programming

We should really consider getting our next software contractors from Primate Programming, Inc. Looks like a talented bunch of people.

"Humans and higher primates share approximately 97% of their DNA in common. Recent research in primate programming suggests computing is a task that most higher primates can easily perform. Visual Basic 6.0 was the preferred IDE for the majority of experiment primate subjects."

"Humans and higher primates share approximately 97% of their DNA in common. Recent research in primate programming suggests computing is a task that most higher primates can easily perform. Visual Basic 6.0 was the preferred IDE for the majority of experiment primate subjects."

Thursday, January 27, 2005

Listen To Your Star Programmers

As Robin Sharp and others have pointed out before, several empiric studies show that the best programmers are between 10 and 20 times more productive than the worst programmers. So let's suppose you are lucky enough to have some of those star programmers. Often those also tend to be the kind of people who think outside the box. They might observe what's going wrong in your organization. If they tend to be introverted, they probably won't talk about those observations, while others will. Now what are you - the manager - going to do about it? Two possibilities:

(1) Take you star programmers for serious. Ask for their opinion - all the time. You might not agree to everything they say, but at least listen and give them some feedback. Gain new insights from their perspective - or especially if you happened to be a programmer once yourself - try two walk in their shoes again. And most of all: do your best to make them happy. You are in the software business. Those folks are by far your most valuable asset.

(2) Ignore you best peoples' objections. Fob them off with comments like "You don't see that from a management perspective" or simply "You are wrong". Make them feel like replaceable parts, e.g. by letting them know they could easily be substituted by any mediocre developer walking down the street. And once they decide to leave, blame them and forget about their dedication and achievements, from which your company has benefited.

So which alternative is chosen in most of today's corporations? You may have guessed it - number (2). More often than not it's the top notch people who care and try to improve their own and their coworkers' environment, try to point out where things simply go wrong-wrong-wrong. Yes, once they fail on this crusade, they might actually leave disenchantedly (frequently after a long, personal struggle) - after all those are the folks who won't have a problem finding a new opportunity somewhere else.

Once it's too late, management might finally ask what went wrong (or probably not). If you see one of your stars leaving the ship, it may be coincidence or just bad luck, but two or more mean that the sh.. hits the fan. How are you planning to be competitive with mostly not-so-gifted people and one or two morons? (If your organization has reached a certain size, expect to have at least some morons sitting around, those who slipped through your recruitment process. A high moron ratio is yet another way to frustrate your star programmers). If it's not the bottom 20% who quit, but the top 20%, then "something is rotten in the state of Denmark."

A somewhat deeper analysis regarding this topic can be found in one of my all-time favorite books, Tom DeMarco's / Timothy Lister's "Peopleware":

The final outcome of any effort is more a function of WHO does the work, not HOW the work is done. Yet modern management science pays almost no attention to hiring and keeping the right people.

We will attempt to undo the damage of this view, and replace it with an approach that encourages you to court success with this formula:

Why people leave:

(1) Take you star programmers for serious. Ask for their opinion - all the time. You might not agree to everything they say, but at least listen and give them some feedback. Gain new insights from their perspective - or especially if you happened to be a programmer once yourself - try two walk in their shoes again. And most of all: do your best to make them happy. You are in the software business. Those folks are by far your most valuable asset.

(2) Ignore you best peoples' objections. Fob them off with comments like "You don't see that from a management perspective" or simply "You are wrong". Make them feel like replaceable parts, e.g. by letting them know they could easily be substituted by any mediocre developer walking down the street. And once they decide to leave, blame them and forget about their dedication and achievements, from which your company has benefited.

So which alternative is chosen in most of today's corporations? You may have guessed it - number (2). More often than not it's the top notch people who care and try to improve their own and their coworkers' environment, try to point out where things simply go wrong-wrong-wrong. Yes, once they fail on this crusade, they might actually leave disenchantedly (frequently after a long, personal struggle) - after all those are the folks who won't have a problem finding a new opportunity somewhere else.

Once it's too late, management might finally ask what went wrong (or probably not). If you see one of your stars leaving the ship, it may be coincidence or just bad luck, but two or more mean that the sh.. hits the fan. How are you planning to be competitive with mostly not-so-gifted people and one or two morons? (If your organization has reached a certain size, expect to have at least some morons sitting around, those who slipped through your recruitment process. A high moron ratio is yet another way to frustrate your star programmers). If it's not the bottom 20% who quit, but the top 20%, then "something is rotten in the state of Denmark."

A somewhat deeper analysis regarding this topic can be found in one of my all-time favorite books, Tom DeMarco's / Timothy Lister's "Peopleware":

The final outcome of any effort is more a function of WHO does the work, not HOW the work is done. Yet modern management science pays almost no attention to hiring and keeping the right people.

We will attempt to undo the damage of this view, and replace it with an approach that encourages you to court success with this formula:

- Get the right people

- Make them happy so they don't want to leave

- Turn them loose

Why people leave:

- A just-passing-through mentality: Co-workers engender no feelings of long-term involvement in the job.

- A feeling of disposability: Management can only think of its workers as interchangeable parts.

- A sense of loyalty would be ludicrous: Who could be loyal to an organization that views its people as parts?

Wednesday, January 26, 2005

How To RTFM

Now that's what I call one of the more offending MSDN fakes - I mean - pages (Parental Advisory: Explicit Language).

Prerequisites:

- The Ability to Read

- Basic Brain Function

Symptoms:

After asking a truly pathetic question, you are instructed to RTFM.

Prerequisites:

- The Ability to Read

- Basic Brain Function

Symptoms:

After asking a truly pathetic question, you are instructed to RTFM.

Thursday, January 20, 2005

Sorting Algorithms Visualized

Peter Weigel and Andreas Boltzmann did a really nice job on visualizing the internal workings of different sorting algorithms. Their simulation also indicates the huge performance penalties of O(N^2) complexity approaches (e.g. Bubblesort) in comparison to O(N * log(N)) complexity (e.g. Quicksort).

The original implementation consisted of a Java top-level Window with German localization, so I did a quick hack and threw it all into an applet container, as well as translating it to English.

Update 2015: If you cannot see anything, this is most likely caused by your browser not supporting unsigned applets any more. Sorry!

The original implementation consisted of a Java top-level Window with German localization, so I did a quick hack and threw it all into an applet container, as well as translating it to English.

Update 2015: If you cannot see anything, this is most likely caused by your browser not supporting unsigned applets any more. Sorry!

Wednesday, January 19, 2005

How Nerdy Are You?

Now, I am not quite sure if 98% nerdhood is something to be proud of (probably not). I guess that about 80% would be far healthier. But I really SHOULD have a biohazard sign on my room, if only my wife would allow me to...

Anyway, I know one or two guys who would easily rank at 100% on that test - or have you heard of anyone else on this planet, who runs Windows on Virtual-PC-for-Mac on Mac-on-Linux emulator "MOL" on top of Debian on an iBook G4 - just for the fun of it?

Tuesday, January 18, 2005

Who On Earth Is Patrick Naughton?

Besides the fact that James Gosling has been inescapably tagged as "The Inventor Of Java" (which is undoubtedly true - he was on the project from day one, designing the language, writing the first compiler), the original roots of Java actually go back to a guy named Patrick Naughton. Naughton, a young, talented software engineer, had been working at Sun Microsystem's "NeWS"-group from 1988 on. Gosling - the group's lead - became Naughton's mentor.

Still, in 1990 Patrick Naughton was about to leave Sun. His "X11/NeWS"-project was going nowhere. Steve Jobs' NeXTSTEP was the hot new thing at that time, and Jobs offered Naughton a job at NeXT.

When Naughton told Scott McNealy about his resignation at the "Dutch Goose" in Menlo Park (some Sun folks had met there for beers and burgers, after one of Sun's famous employee hockey games), McNealy tried to convince him to stay with Sun, at least postpone his departure. When he did not succeed in that, McNealy asked Naughton for a memo describing what he would to change at Sun.

Naughton suggested reducing project staffing by an order of magnitude, where the remaining small core of people could do more to effect change quickly than they would ever get with any compromise solutions. He wanted to do fewer things, but do those fewer things better.

Says Naughton: "I lined up a sequence of suppositions for why projects fail inside of Sun that made a firm argument for creating a group that had complete autonomy from mainline Sun. I suggested that we would need to be completely secret, a black project. We would move off campus to some non-descript locale nearby, like the NFS group had done in Menlo Park a few years earlier. We would have to have a tiny group of people, not larger than a round table at Little Garden, our favorite Schezchuan/Hunan Chinese place in Palo Alto. We would get to hand pick a few senior contributors from inside of Sun, then go outside for art and design help. We would be exempt from the Sun Product Strategy Committee guidelines about using SPARC, Solaris and OPEN LOOK. In fact, we would explicitly build for some other platform, not at all like a workstation."

McNealy accepted, and added a significant salary raise, so Naughton stayed. This was the dawn of project "Green" (later: "Oak"), which took some more interesting twists, which Naughton describes in detail in "The Long Strange Trip to Java". The rest is history.

Naughton bailed out in 1994 after some dispute with his vice president, just some months before Java was introduced to the public as how we know it today (in 1993, Sun had tried to sell "Oak" in the consumer electronic market as a system-platform for set-top boxes).

Less well known is what happened with Naughton afterwards. He became CTO at a company named Starwave, and later VP at Infoseek/Go Network/Disney. In 1997 Naughton wrote an autobiographic article for Forbes: "Mr. Famous Comes Home".

More information from Sun: "Java Technologies - The Early Days". Naughton appears on the team's group photo. This page also includes a screenshot of "Webrunner" (later: HotJava browser), which had been developed by - Patrick Naughton.

Still, in 1990 Patrick Naughton was about to leave Sun. His "X11/NeWS"-project was going nowhere. Steve Jobs' NeXTSTEP was the hot new thing at that time, and Jobs offered Naughton a job at NeXT.

When Naughton told Scott McNealy about his resignation at the "Dutch Goose" in Menlo Park (some Sun folks had met there for beers and burgers, after one of Sun's famous employee hockey games), McNealy tried to convince him to stay with Sun, at least postpone his departure. When he did not succeed in that, McNealy asked Naughton for a memo describing what he would to change at Sun.

Naughton suggested reducing project staffing by an order of magnitude, where the remaining small core of people could do more to effect change quickly than they would ever get with any compromise solutions. He wanted to do fewer things, but do those fewer things better.

Says Naughton: "I lined up a sequence of suppositions for why projects fail inside of Sun that made a firm argument for creating a group that had complete autonomy from mainline Sun. I suggested that we would need to be completely secret, a black project. We would move off campus to some non-descript locale nearby, like the NFS group had done in Menlo Park a few years earlier. We would have to have a tiny group of people, not larger than a round table at Little Garden, our favorite Schezchuan/Hunan Chinese place in Palo Alto. We would get to hand pick a few senior contributors from inside of Sun, then go outside for art and design help. We would be exempt from the Sun Product Strategy Committee guidelines about using SPARC, Solaris and OPEN LOOK. In fact, we would explicitly build for some other platform, not at all like a workstation."

McNealy accepted, and added a significant salary raise, so Naughton stayed. This was the dawn of project "Green" (later: "Oak"), which took some more interesting twists, which Naughton describes in detail in "The Long Strange Trip to Java". The rest is history.

Naughton bailed out in 1994 after some dispute with his vice president, just some months before Java was introduced to the public as how we know it today (in 1993, Sun had tried to sell "Oak" in the consumer electronic market as a system-platform for set-top boxes).

Less well known is what happened with Naughton afterwards. He became CTO at a company named Starwave, and later VP at Infoseek/Go Network/Disney. In 1997 Naughton wrote an autobiographic article for Forbes: "Mr. Famous Comes Home".

More information from Sun: "Java Technologies - The Early Days". Naughton appears on the team's group photo. This page also includes a screenshot of "Webrunner" (later: HotJava browser), which had been developed by - Patrick Naughton.

Monday, January 17, 2005

Bill Gates Strikes A Pose (1983)

Monkey Methods digged out that one: Bill Gates in love with his 1983 IBM PC.

What about the dark rings under his eyes - too much late-night hacking on Advanced Disk BASIC for DOS 2.0?

I think I remember reading somewhere that 1983 was actually the last time Bill Gates did some coding work on a Microsoft product, namely Microsoft Fortran.

What about the dark rings under his eyes - too much late-night hacking on Advanced Disk BASIC for DOS 2.0?

I think I remember reading somewhere that 1983 was actually the last time Bill Gates did some coding work on a Microsoft product, namely Microsoft Fortran.

Sunday, January 16, 2005

Floating-Point Arithmetic

"Floating-point arithmetic is considered an esoteric subject by many people. This is rather surprising because floating-point is ubiquitous in computer systems."

What Every Computer Scientist Should Know About Floating-Point Arithmetic

What Every Computer Scientist Should Know About Floating-Point Arithmetic

Thursday, January 13, 2005

The 640k Legend

It does not really come as a surprise: Bill Gates' famous "640k should be enough for everyone"-statement is a canard. James Fallows (National correspondent for "The New York Review of Books") recently received the following mail from the Microsoft Chairman and Chief Software Architect...

This is one of those "quotes" that won't seem to go away.

I've explained that it's wrong when it's come up every few years, including in a newspaper column and in interviews.

There is a lot of irony to this one. Lou Eggebrecht (who really designed the IBM PC original hardware) and I wanted to convince IBM to have a 32-bit address space, but the 68000 [a Motorola-designed processing chip, eventually used in the Apple Macintosh] just wasn't ready. Lew had an early prototype but it would have delayed things at least a year.

The 8086/8088 [the Intel-designed chip used in early personal computers] architecture has a 20-bit address bus [the mechanism used by the microprocessor to access memory; each additional "bit" in the address bus doubles the amount of memory that can be used], and the instruction set [the basic set of commands that the microprocessor understands] only generates 20-bit addresses.

I and many others have said the industry "uses" an extra address bit every two years, as hardware and software become more powerful, so going from 16-bit to 20-bit was clearly not going to last us very long. The extra silicon to do 32-bit addressing is trivial, but it wasn't there. The VAX was around and all the 68000 people did was look at the VAX! 2 to the 20th is 1 megabyte (1024K), so you might ask why the difference between 640K and 1024K—where did the last 384K go?

The answer is that in that 1M of address space we had to accommodate RAM [random access memory], ROM [read-only memory], and I/O addresses [Input/Output addresses used for "peripherals" like keyboards, disk drives, and hard drives], and IBM laid it out so those other things started at 640K and used all the memory space up to 1M. If they had been a bit more careful we could have had 800K instead of 640K available.

In fact, we had 800K on the Sirius machine, which I got to have a lot of input on (designed by Chuck Peddle, who did the Commodore Pet and the 6502, too). The key problem though is not getting to use only 640K of the 1M of address space that was available. It's the 1M limit, which comes from having only 20 bits of address space, which is all that chip can handle!

So, this limit has nothing to do with any Microsoft software.

Although people talk about previous computing as 8-bit, it was 16-bit addressing in the 8080/Z80/6800/6502 [all early processing chips]. So we had only 64K of addressability.

Amazingly people like Bob Harp (Vector Graphics—remember them?) went around the industry saying we should stick with that and just use bank switching techniques. Bank switching comes up whenever an address space is at the end of its life. It's a hack where you have more physical memory than logical memory. Fortunately we got enough applications moved to the 8086/8 machines to get the industry off of 16-bit addressing, but it was clear from the start the extra 4 bits wouldn't be sufficient for long.

Now you MIGHT think that the next time around the chip guys would get it right.

But NO, instead of going from 20 bits to 32 bits, we got the 286 chip next. Intel had its A team working on the 432 (remember that? Fortune had a silly article about how it was so far ahead of everyone, but it was a dead end even though its address space was fine). The 286's address space wasn't fine. It only had 24 bits. It used segments instead of pages and the segments were limited to 24 bits.

When Intel produced the 32-bit 386 chip, IBM delayed doing a 386 machine because they had a special version of the 286 that only they could get, and they ordered way too many of them.

It's hard to remember, but companies were chicken to do a 386 machine before IBM. I went down to Compaq five times and they decided to be brave and do it. They came out with a 386 machine! So finally the PC industry had a 32-bit address space.

We have just recently passed through the 32-bit limit and are going to 64-bit. This is another complex story. Itanium is 64-bit. Meanwhile, AMD on its own has extended the x86 to 64-bit.

Even 64-bit architecture won't last forever, but it will last for quite a while since only servers and scientific stuff have run out of 32-bit space right now. In three or four years the industry will have moved over to 64-bit architecture, and it looks like it will suffice for more than a decade.

Apollo actually did 128-bit architecture really early, as did some IBM architectures. But there are tradeoffs that made those not ever become mainstream.

A long answer to just say "no." I don't want anyone thinking that the address limits of the PC had something to do with software or me or a lack of understanding of the history of address spaces.

My first address space was the PDP-8. That was a 12-bit address space!

Even the 8008, at 14 bits, was a step up from that.

This is one of those "quotes" that won't seem to go away.

I've explained that it's wrong when it's come up every few years, including in a newspaper column and in interviews.

There is a lot of irony to this one. Lou Eggebrecht (who really designed the IBM PC original hardware) and I wanted to convince IBM to have a 32-bit address space, but the 68000 [a Motorola-designed processing chip, eventually used in the Apple Macintosh] just wasn't ready. Lew had an early prototype but it would have delayed things at least a year.

The 8086/8088 [the Intel-designed chip used in early personal computers] architecture has a 20-bit address bus [the mechanism used by the microprocessor to access memory; each additional "bit" in the address bus doubles the amount of memory that can be used], and the instruction set [the basic set of commands that the microprocessor understands] only generates 20-bit addresses.

I and many others have said the industry "uses" an extra address bit every two years, as hardware and software become more powerful, so going from 16-bit to 20-bit was clearly not going to last us very long. The extra silicon to do 32-bit addressing is trivial, but it wasn't there. The VAX was around and all the 68000 people did was look at the VAX! 2 to the 20th is 1 megabyte (1024K), so you might ask why the difference between 640K and 1024K—where did the last 384K go?

The answer is that in that 1M of address space we had to accommodate RAM [random access memory], ROM [read-only memory], and I/O addresses [Input/Output addresses used for "peripherals" like keyboards, disk drives, and hard drives], and IBM laid it out so those other things started at 640K and used all the memory space up to 1M. If they had been a bit more careful we could have had 800K instead of 640K available.

In fact, we had 800K on the Sirius machine, which I got to have a lot of input on (designed by Chuck Peddle, who did the Commodore Pet and the 6502, too). The key problem though is not getting to use only 640K of the 1M of address space that was available. It's the 1M limit, which comes from having only 20 bits of address space, which is all that chip can handle!

So, this limit has nothing to do with any Microsoft software.

Although people talk about previous computing as 8-bit, it was 16-bit addressing in the 8080/Z80/6800/6502 [all early processing chips]. So we had only 64K of addressability.

Amazingly people like Bob Harp (Vector Graphics—remember them?) went around the industry saying we should stick with that and just use bank switching techniques. Bank switching comes up whenever an address space is at the end of its life. It's a hack where you have more physical memory than logical memory. Fortunately we got enough applications moved to the 8086/8 machines to get the industry off of 16-bit addressing, but it was clear from the start the extra 4 bits wouldn't be sufficient for long.

Now you MIGHT think that the next time around the chip guys would get it right.

But NO, instead of going from 20 bits to 32 bits, we got the 286 chip next. Intel had its A team working on the 432 (remember that? Fortune had a silly article about how it was so far ahead of everyone, but it was a dead end even though its address space was fine). The 286's address space wasn't fine. It only had 24 bits. It used segments instead of pages and the segments were limited to 24 bits.

When Intel produced the 32-bit 386 chip, IBM delayed doing a 386 machine because they had a special version of the 286 that only they could get, and they ordered way too many of them.

It's hard to remember, but companies were chicken to do a 386 machine before IBM. I went down to Compaq five times and they decided to be brave and do it. They came out with a 386 machine! So finally the PC industry had a 32-bit address space.

We have just recently passed through the 32-bit limit and are going to 64-bit. This is another complex story. Itanium is 64-bit. Meanwhile, AMD on its own has extended the x86 to 64-bit.

Even 64-bit architecture won't last forever, but it will last for quite a while since only servers and scientific stuff have run out of 32-bit space right now. In three or four years the industry will have moved over to 64-bit architecture, and it looks like it will suffice for more than a decade.

Apollo actually did 128-bit architecture really early, as did some IBM architectures. But there are tradeoffs that made those not ever become mainstream.

A long answer to just say "no." I don't want anyone thinking that the address limits of the PC had something to do with software or me or a lack of understanding of the history of address spaces.

My first address space was the PDP-8. That was a 12-bit address space!

Even the 8008, at 14 bits, was a step up from that.

Tuesday, January 11, 2005

Electronic Arts - A Company Not To Work For?

Two recent blog entries (by Joe Straitiff, a former employee, resp. E.A.-spouse's E.A.-The Human Story) suggest that Electronic Arts is not really a great company to work for. Even worse, E.A. seems to force its employees (whether they do have family or not) to the borderline of mental and physical exhaustion, demanding constant 80-90 working-hours per week, without compensation whatsoever.

E.A.-spouse writes: "When you make your profit calculations and your cost analyses, you know that a great measure of that cost is being paid in raw human dignity, right?"

Scary.

E.A.-spouse writes: "When you make your profit calculations and your cost analyses, you know that a great measure of that cost is being paid in raw human dignity, right?"

Scary.

Monday, January 10, 2005

To Comment Or Not To Comment

I noticed a steady increase of page views lately, so I am currently considering to turn on blog commenting (hey, I didn't really know someone was actually reading all of this ;-) ). I would really like to see some feedback on certain articles (e.g. someone might want to disprove my "IP packet fragmentation"-theory). On the other hand, blog comment spamming is quite a problem, and tends to obfuscate legitimate responses. Blogger.com allows for commenting of registered blogger.com-users only, but that would limit the group of annotators considerably.

I will probably give it a test run in some days. Please stay tuned.

I will probably give it a test run in some days. Please stay tuned.

Saturday, January 08, 2005

Java: Duke Vader Vs. The Casting Knights

Matt Quail obviously does not appreciate generics in Java 5 ("Tiger"). Says Casting Knight Yoda: "Fear leads to Generics. Generics lead to Autoboxing. Autoboxing leads to NullPointerExceptions".

A hilarious flash animation: Episode III - The Revenge of the <T>.

Starring James Gosling & The Casting Knights, Duke Vader, and many more.

A hilarious flash animation: Episode III - The Revenge of the <T>.

Starring James Gosling & The Casting Knights, Duke Vader, and many more.

Friday, January 07, 2005

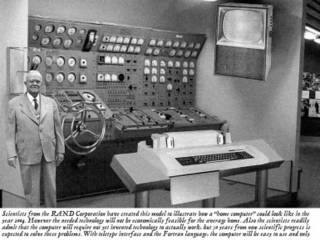

Home Computer As Of 2004

Another infamous how-will-technology-look-like prediction.

From the text: "Scientists of RAND Corporation have created this model to illustrate how a 'home computer' could look like in the year 2004. [...] The scientists readily admit that the computer will require not yet invented technology. [...] With teletype interface and the Fortran langage, the computer will be easy to use [...]"

I personally like the bulkhead wheel. What is that, did RAND invent the trackpoint before IBM did?

Update: Did some further research (the bulkhead wheel had made me suspicious), and yes, it's a hoax. In reality, this is the maneuvering console of a 1950's US submarine. Here is the original photograph, taken at the Smithsonian Institution exhibit "Fast Attacks and Boomers: Submarines in the Cold War" in the year 2000:

More at Snopes.Com.

More at Snopes.Com.

From the text: "Scientists of RAND Corporation have created this model to illustrate how a 'home computer' could look like in the year 2004. [...] The scientists readily admit that the computer will require not yet invented technology. [...] With teletype interface and the Fortran langage, the computer will be easy to use [...]"

I personally like the bulkhead wheel. What is that, did RAND invent the trackpoint before IBM did?

Update: Did some further research (the bulkhead wheel had made me suspicious), and yes, it's a hoax. In reality, this is the maneuvering console of a 1950's US submarine. Here is the original photograph, taken at the Smithsonian Institution exhibit "Fast Attacks and Boomers: Submarines in the Cold War" in the year 2000:

More at Snopes.Com.

More at Snopes.Com.

IT Conversations

IT Conversations provides a set of interviews with computer industry legends, e.g. Steve Wozniak, Joel Spolsky or Bruce Schneier.

Tuesday, January 04, 2005

Rational ClearCase

Murray Cumming writes: Lots of mistakes have been made during this project, but nothing else has hurt us so much as ClearCase. Every other problem could have been overcome if it hadn't been for ClearCase at the bottom of our dependency tree. This could not be more obvious.

I comprehend Murray's pain about ClearCase. Still I'd say ClearCase itself is not the root of all evil, but its misapplication is - and clearly ClearCase does not prevent its own misapplication (even worse, it encourages it). I have wandered the swamp of evil, when people completely abused ClearCase features at one place - version trees (or should I say forrests?) of byzantine complexity, dozens of branches (the kind that can never be merged back), differing config.spec's on each client, that kind of stuff. Somewhere else, I saw ClearCase work just beautifully (aligned with clear developer guidelines, using snapshot views only).

I comprehend Murray's pain about ClearCase. Still I'd say ClearCase itself is not the root of all evil, but its misapplication is - and clearly ClearCase does not prevent its own misapplication (even worse, it encourages it). I have wandered the swamp of evil, when people completely abused ClearCase features at one place - version trees (or should I say forrests?) of byzantine complexity, dozens of branches (the kind that can never be merged back), differing config.spec's on each client, that kind of stuff. Somewhere else, I saw ClearCase work just beautifully (aligned with clear developer guidelines, using snapshot views only).

Saturday, January 01, 2005

Mediocre Programming And The Lack Of A Well-Defined Test Plan

The German federal labor office is facing a software-"MCA" ("maximum credible accident") those days. Their new unemployment benefit software solution - a software project which received enormous attention lately, mainly due its heavily criticized time schedule (IBM had backed out, doubting its realizability) and questionable implementation - seems to fail miserably on its bank account formatting function: Leading zeros are being appended instead of prepended (e.g. account number "123456" is being formatted as "12345600" instead of "00123456"), which results in erroneous transfers.

Hundreds of thousands of unemployed are not receiving their benefits. The responsible federal agency had already declared victory as things had finally seemed to work - but disaster stroke only two days later.

Hard to imagine how this is possible - this functionality must be at the center of every test plan. The coding error itself is horrible, no doubt (never let mediocre programmers touch your crown jewels), but human failure is as certain as anything in software development. The testing process has to ensure that such errors will never slip into production code.

Somehow this reminds of an incident that happened to me years ago. We had let a college intern write some amount formatting function (this was in the days of Java 1.0, way long before java.text.NumberFormat). The code was clumsy and hard to read, but it seemed to work (and we had some time pressure, as always) - so we gave green light to merge it into our production code branch. Some days before installation, I had another look at it (there was just this unpleasant feeling about it, that wouldn't leave me alone), and from some more detailed static analysis I noticed that it would fail on a certain value range: the leading sign was reversed in those cases (from plus to minus, and vice versa). Our test data accidentally didn't contain values within that range. Now that would have been a "maximum credible accident" as well. Luckily we were just on time to fix it. Our test data was adjusted accordingly the very same day.

Hundreds of thousands of unemployed are not receiving their benefits. The responsible federal agency had already declared victory as things had finally seemed to work - but disaster stroke only two days later.

Hard to imagine how this is possible - this functionality must be at the center of every test plan. The coding error itself is horrible, no doubt (never let mediocre programmers touch your crown jewels), but human failure is as certain as anything in software development. The testing process has to ensure that such errors will never slip into production code.

Somehow this reminds of an incident that happened to me years ago. We had let a college intern write some amount formatting function (this was in the days of Java 1.0, way long before java.text.NumberFormat). The code was clumsy and hard to read, but it seemed to work (and we had some time pressure, as always) - so we gave green light to merge it into our production code branch. Some days before installation, I had another look at it (there was just this unpleasant feeling about it, that wouldn't leave me alone), and from some more detailed static analysis I noticed that it would fail on a certain value range: the leading sign was reversed in those cases (from plus to minus, and vice versa). Our test data accidentally didn't contain values within that range. Now that would have been a "maximum credible accident" as well. Luckily we were just on time to fix it. Our test data was adjusted accordingly the very same day.

Subscribe to:

Posts (Atom)