Over the last weeks, a small fraction of our customers reported some application instability. The client, a .NET 1.1 WinForms program (due to .NET framework backward incompatibilities resp. several third party tool problems we have not been able to migrate to .NET 2.0 yet), would simply terminate occasionally without any error message, dump or whatsoever. Several modules were affected, and there seemed to be no systematic scheme behind it.

After talking with some customers we figured out what those crashes had in common: they all occurred when a worker thread had been spawned to do some DB stuff, while the UI thread would display a modal progress dialog. Moving to a single thread (namely the UI thread) was no alternative, as the worker thread's task included some long running DB stuff, which would block the client, hence make the UI non-responsive, even when applying

Application.DoEvents in between).

I suspected a concurrency issue, and had a closer look at the code - but there was nothing wrong about it, data access was cleanly separated between the two threads, which would only communicate with each other on a message basis.

So the next thing we tried was to reproduce the error on one of our test machines. But bad luck again. We had recorded a macro which represented the scenario our customers had described, but our application wouldn't crash, not in hours, not in days.

Finally we digged out an old developer PC which had actually been scrapped already, and there it happened, just as the customer had reported it - repeatedly, and within minutes. The .NET runtime simply terminated, do dump, no system log entry, nothing. Attaching the debugger didn't help either. Next I invoked several profilers (

Compuware BoundsChecker,

Rational Purify and

JetBrains DotTrace). Profiling also did not provide new insights. BoundsChecker and Purify originally are unmanaged code profilers - they do support .NET profiling, but still it doesn't seem to be a first class citizen, and DotTrace requires .NET 2.0 for memory profiling.

So time for

WinDbg, Microsoft's system level debugger. I didn't really have a lot of necessity to apply WinDbg before, but this seemed to be one of those cases. Luckily I read Tess'

"If broken it is, fix it you should"-blog on Windows debugging at regular intervals, so this turned out to be helpful. Impatiently anticipating some kind of progress, I attached WinDbg to our application, and sure enough some time later it broke at a

second chance exception. The call stack was full of entries like

mscorwks!GetCompileInfoYes the .NET runtime crashed, and it was clear I needed some runtime symbol files ("GetCompileInfo" is just a placeholder).

Fortunately there is the so called Microsoft symbol server, from which one can either download all kinds of system symbol files, or simply let WinDbg connect to it and do the job (File / Symbol File Path / "srv*c:\symbols*http://msdl.microsoft.com/download/symbols"). Furthermore I wanted better support for .NET debugging, so I loaded the SOS (Son of Strike) extension into WinDbg:

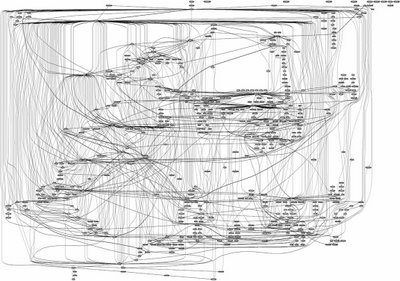

0:000> .load clr10\sosOn the next crash, the callstack made more sense:

0:000> kb

00 0012d3d0 79216288 0162652c 00166c8c 00000001 mscorwks!gc_heap::mark_object_simple+0x77 (FPO: [Non-Fpo])

01 0012d44c 79213753 79211d81 00000000 00000000 mscorwks!gc_heap::mark_through_cards_for_segments+0x28f (FPO: [Non-Fpo])

02 0012d478 7921248e 00000001 00000000 00000000 mscorwks!gc_heap::mark_phase+0xb6 (FPO: [Non-Fpo])

03 0012d4d4 7921301a 00000000 00000000 00000000 mscorwks!gc_heap::gc1+0x96 (FPO: [Non-Fpo])

04 0012d514 79213c37 00000000 00000000 00000000 mscorwks!gc_heap::garbage_collect+0x1bf (FPO: [Non-Fpo])

05 0012d534 79214e83 00000000 00000000 00000044 mscorwks!GCHeap::GarbageCollectGeneration+0x11b (FPO: [Non-Fpo])

06 0012d564 792d176b 793df808 00000044 00000000 mscorwks!gc_heap::allocate_more_space+0x13a (FPO: [Non-Fpo])

07 0012d788 791b3af0 00000044 00000002 0012d7b8 mscorwks!GCHeap::Alloc+0x77 (FPO: [Non-Fpo])

08 0012d798 791d6280 00000044 00000000 00040000 mscorwks!Alloc+0x3a (FPO: [Non-Fpo])It was the garbage collector, and it looked like some managed heap corruption. "verifyheap" would tell me for sure:

0:000> !verifyheap

VerifyHeap will only produce output if there are errors in the heap

object cbad00: bad member 162652c at 00cbad30

curr_object : 0xcbad00 size = 0

Last good object: 0xcbac94OK, maybe the last good object would supply some further clues:

0:000> !do 0xcbac94

Name: System.Data.SqlClient.SqlCommandI tried this several times, the last good object always was either System.Data.SqlClient.SqlCommand or System.Data.SqlClient.SqlDataAdapter. I had a look at our code and checked which other objects were instantiated in this context. Besides SqlCommand and SqlDataAdapter there was System.Data.SqlClient.SqlConnection. So I googled for "managed heap corruption" for each of those three classes, and there it was:

"Managed Heap Corruption When You Use SqlConnection". From the text:

"[...] This causes the Microsoft .NET application to quit unexpectedly during the garbage collection phase".

This is an MDAC (

Microsoft Data Access Components) 2.7 problem (MDAC 2.7 SP1 solves it), which occurrs when database connections are being rapidly opened and closed (logically, not physically) on multiple threads. As .NET 1.1 itself only requires MDAC 2.6 or above, older clients that have not been updated are most likely affected.

It had been a hard day's work.